9.3. Files with pandas#

Interactive page

This is an interactive book page. Press launch button at the top right side.

In the previous section, we used NumPy to work with a file containing numerical values. When our data contains different data types, which will often be the case, we resort to pandas package.

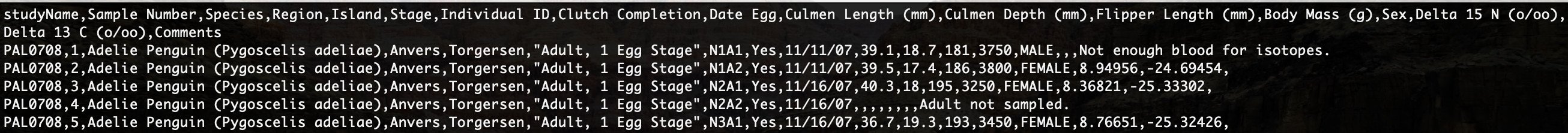

What does such data look like? Let’s use Palmer Archipelago (Antarctica) penguin data as an example dataset.

Fig. 9.3 The first few rows of Palmer Archipelago (Antarctica) penguin data. Note that the column values are not aligned with column names in this preview.#

The penguin dataset contains text (studyName, Island, etc.) and numeric data (Sample Number, Body mass (g), etc.), as well as some missing values (you can spot them in CSV by consecutive commas ,,,, like in the second to last row in the figure above).

To use the pandas library for importing such a data file, we first need to import pandas (as any Python library). It is commonly imported in this way:

import pandas as pd

allowing us to call functions from pandas using the prefix pd..

9.3.1. Loading a file#

To load data into Python using pandas, you can use its pd.read_* function, where wildcard * stands for file type. E.g., you can use pd.read_csv() for CSV files, pd.read_excel() for Excel’s xlsx files, etc.

Fig. 9.4 Reading and writing tabular data with pandas (image from pandas website, © 2024, pandas, image cannot be reused).#

If passed to a variable, these functions will store data from your file into a pandas DataFrame type. As a default, printing it displays the first and last five rows. Let’s try it with our CSV file:

import pandas as pd

penguins = pd.read_csv("penguins.csv")

print(penguins)

print(type(penguins))

If we had an Excel file with these data stored in a sheet called “penguin_analysis”, we could use pd.read_excel() for import:

penguins = pd.read_excel("penguins.xlsx", sheet_name="penguin_analysis")

Very conveniently, head() and tail() methods from pandas allow you to print the selected number of rows at the beginning or end of your DataFrame, respectively. Note that the display of the rows also looks quite neat.

penguins.head(2)

If we’re curious about the data types in the different columns of our penguins DataFrame, we can use the dtypes atttribute. In our DataFrame, we have integers (int64), floats (float64) and strings (object):

penguins.dtypes

Did something look strange to you in the head and dtypes code lines above?

Note that we didn’t use pd.head(penguins) or pd.dtypes(penguins), but instead penguins.head() and penguins.dtypes! Notice also that the difference between a method head and an attribute dtypes in the usage of round brackets. The details of the reason behind this go beyond the level of this book (we give you a glimpse into the reasons in the info box below, in case you’re curious). For now, it’s only important to remember that pandas works this way.

Functional programming vs. object-oriented programming

There are two programming paradigms: functional programming (FP) and object-oriented programming (OOP). FP is based on functions that take inputs and produce outputs. OOP is based on objects, which can contain both data (attributes) and behaviour (methods) that describe characteristics and actions. The details of FP vs. OOP are beyond this book, and we don’t delve into OOP.

The reason for penguins.head() is that pandas is designed using OOP principles, where head() is a method (not a function), so we call penguins.head() a “method call”. Similarly, dtypes is an attribute of a DataFrame, and it doesn’t require the usage of ().

pandas also has some functional interfaces where you use pd. (e.g., pd.read_csv() above), though it’s less commonly used and not as extensive as in NumPy.

Note that NumPy is also designed with OOP principles. E.g., for a NumPy array a = np.array([1, 2, 3]), you can get its mean either with np.mean(a) or with a.mean().

9.3.2. Saving a file#

As we’ve seen in Fig. 9.4, we can save pandas DataFrames into files using to_* where wildcard * again stands for different file types, e.g., to_csv() or to_excel(). Explore: what does index=False in the code below do?

penguins.to_excel("penguins_data.xlsx", sheet_name="penguins_analysis", index=False)

9.3.3. Manipulating data#

Being able to manipulate data is essential in science. Some estimates say that up to 80% of work in data analysis (e.g., in bioinformatics) is data manipulation, i.e., cleaning and preparing the data so that it’s in a form needed for analysis and plotting.

Here we offer a glimpse into the possibilities of data manipulation with pandas. To learn more, visit pandas tutorials, or simply use Google, ChatGPT, or check the cheat sheet when you come across a data manipulation action that you don’t know how to perform.

9.3.3.1. Subset#

9.3.3.1.1. Columns#

Fig. 9.5 Subsetting columns from a pandas DataFrame (image from pandas website, © 2024, pandas, image cannot be reused).#

To select a single column, use square brackets [] with the column name of the column of interest.

# Select column "Species"

penguin_region = penguins["Body Mass (g)"]

print(penguin_region.head(3))

# Print output variable type

print(type(penguin_region))

# Print output variable shape

print(penguin_region.shape)

As you can see, a column of a pandas DataFrame is a pandas Series with shape (344,), i.e., 344 rows and no columns. That tells you that Series is one-dimensional. From the usage penguin_region.shape you can also see that shape is an attribute, so no brackets are used.

If you’re interested in extracting several columns, you can do that in one go like this: dataframe["column1", "column2"]. Note that in this case your output is 2D, and therefore a pandas DataFrame.

9.3.3.1.2. Rows#

Fig. 9.6 Subsetting rows from a pandas DataFrame (image from pandas website, © 2024, pandas, image cannot be reused).#

You can also extract rows from a pandas DataFrame based on a condition. You write the condition between []. Let’s say you’re interested in penguins with body mass above 5 kg:

above_5kg = penguins[penguins["Body Mass (g)"] > 5000]

above_5kg.head(3)

Selection based on condition

The output of the conditional expression (in this case >) is a pandas Series of Boolean values (True or False) with the same number of rows as the original DataFrame. Such a Series of Boolean values can be used to filter the DataFrame by putting it in between the selection brackets []. Only rows for which the value is True will be selected.

Let’s check how many rows we actually end up with compared to the initial DataFrame:

print("Initial shape =", penguins.shape)

print("Above 5 kg =", above_5kg.shape)

You can also combine conditions using special operators: & meaning and and | meaning or. For example, to filter the DataFrame rows of male penguins that weigh below 4 kg, we can use the following:

male_below_4kg = penguins[(penguins["Body Mass (g)"] < 4000) & (penguins["Sex"] == "MALE")]

print("Male below 4 kg =", male_below_4kg.shape)

9.3.3.1.3. Rows and columns#

Fig. 9.7 Subsetting rows and column from a pandas DataFrame (image from pandas website, © 2024, pandas, image cannot be reused).#

To select both specific rows and columns in one go, [] are not sufficient. For this, we need to use operators in front of []:

locfor selecting based on column namesilocfor selecting based on position (row/column position or range)

species_above_5kg = penguins.loc[penguins["Body Mass (g)"] > 5000, "Species"]

print(species_above_5kg.shape)

subset = penguins.iloc[9:25, 2:5]

print(subset.shape)

9.3.3.2. Create columns#

Often in science we want to create new columns in our data for analysis or plotting purposes. For example, we may want to change the unit of data in a given column, or log-transform our data.

Let’s add a new column Body Mass (kg) to our DataFrame by dividing Body Mass (g) by 1000:

penguins["Body Mass (kg)"] = penguins["Body Mass (g)"] / 1000

penguins.head(2)

Note that the operations is done “element-wise”, i.e., on all values in the Body Mass (g) column at once. Therefore, you don’t need a loop to iterate over rows.

Using analogous syntax, you can also make new columns based on the existing ones. Say we have a column with mass and a column with volume, we can create a column with density like this:

dataframe["density"] = dataframe["mass"] / dataframe["volume"]

Other mathematical operators (+, -, *,…) and logical operators (<, >, ==, …) also work element-wise.

9.3.3.3. Combine data from multiple tables#

Very often we need to combine data from several tables. Let’s explore two scenarios for combining tables.

9.3.3.3.1. Concatenating#

Fig. 9.8 Concatenating two pandas DataFrames (image from pandas website, © 2024, pandas, image cannot be reused).#

Say you have performed two experiments and stored your data in two tables that contain the columns of the same names. To “paste” the rows of one table below the rows of the other, we perform concatenation.

Explore: what does axis=0 mean in pd.concat()?

# Load data frame of measurements for iris setosa flowers

setosa = pd.read_csv("Iris-setosa.csv", delimiter=";")

print(setosa.head(2))

# Load data frame of measurements for iris virgnica flowers

virginica = pd.read_csv("Iris-virginica.csv", delimiter=";")

print(virginica.head(2))

# Concatenate two tables of iris flower measurements

iris = pd.concat([setosa, virginica], axis=0)

print(setosa.shape)

print(virginica.shape)

print(iris.shape)

9.3.3.3.2. Join tables#

Fig. 9.9 Merging two pandas DataFrames based on a key (image from pandas website, © 2024, pandas, image cannot be reused).#

Let’s say that you have a large table from a proteomics experiment, containing measurements of all proteins in a yeast cell. Imagine that you want to learn more about these proteins by combining this data with information from databases (such as protein length, their function, etc.). This would require you to combine (join, merge) two DataFrames using a common identifier (key).

Let’s look again at the iris dataset as an example. This time, two data files contain information on the same plants, but have different measurements:

# Load data frame of sepal measurements

sepal = pd.read_csv("Iris-Sepal.csv", delimiter=";")

print(sepal.head(5))

print(sepal.tail(5))

# Load data frame of petal measurements

petal = pd.read_csv("Iris-Petal.csv", delimiter=";")

print(petal.head(5))

print(petal.tail(5))

What could be a useful identifier (key) in these two DataFrames?

If we were to use the iris Species column, that wouldn’t be unique, e.g., there a multiple rows with “Iris-setosa”. What does appear to be a unique identified is Id. Let’s use that column for merging the two tables:

all_measurements = pd.merge(sepal, petal, how="left", on="Id")

all_measurements.head(5)

Note that we could do the same thing even if the ID column was named differently in the two DataFrames - in that case, we would need to use left_on='key_column_name_first_df', right_on='key_column_name_second_df'.

Notice also the how argument. What does that mean, and what are the alternatives to “left”?

Explore this in pandas documentation or with ?.

import micropip

await micropip.install("jupyterquiz")

from jupyterquiz import display_quiz

import json

with open("questions7.json", "r") as file:

questions=json.load(file)

display_quiz(questions, border_radius=0)

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[14], line 1

----> 1 import micropip

2 await micropip.install("jupyterquiz")

3 from jupyterquiz import display_quiz

ModuleNotFoundError: No module named 'micropip'