import matplotlib

if not hasattr(matplotlib.RcParams, "_get"):

matplotlib.RcParams._get = dict.get

6.2. AI Prompting#

Interactive page

This is an interactive book page. Press the launch button at the top right of the page.

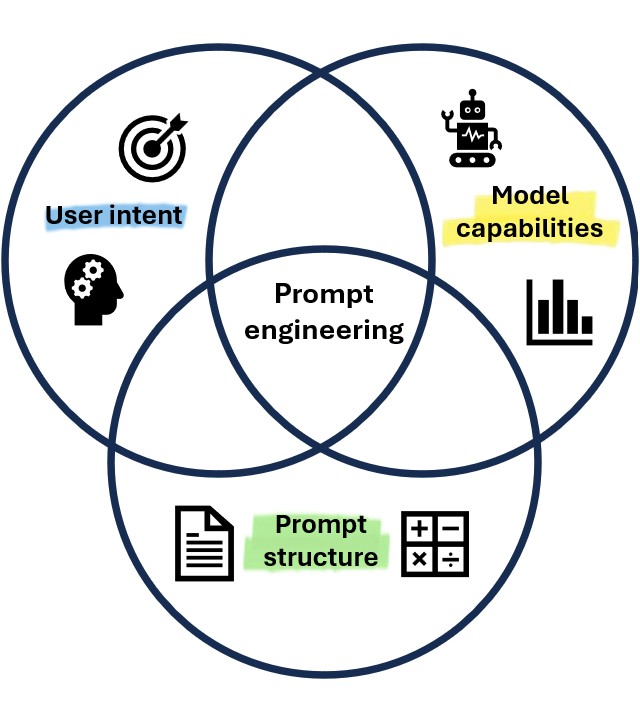

When using LLMs, users must interact with the AI by providing input that the AI can then process. This input is known as the prompt, where correct understanding of how to effectively structure a prompt can lead to more accurate and useful replies. Prompt engineering is the process of optimising the prompt given in order to obtain the desired output from these LLMs. This process usually consists of a lot of trial and error, but keeping in mind how LLMs work and some general best practices can be very helpful in reducing the amount of time needed to reach a satisfactory outcome.

Fig. 6.4 Simple representation showing the different aspects that play into prompt engineering.#

In this section we will present you an overview of all different factors that play a role in prompt engineering, exploring different strategies and tools through which you can improve the quality of the results obtained from AI models.

6.2.1. Types of Interfaces#

There are many different types of interfaces users can use to communicate with AI models. The two most widespread interacting interfaces are chatbots and APIs. While these are not the only ways to interact with AI models, they are the ones you are most likely to encounter during your studies:

Chatbots : Their name originates from their similarities with a normal conversation; the user starts by sending a first prompt including an instruction or question, and the model returns an answer to said input. ChatGPT or Microsoft Copilot are some examples of this type.

Fig. 6.5 Screenshot taken from ChatGPT’s web interface as a chatbox example.#

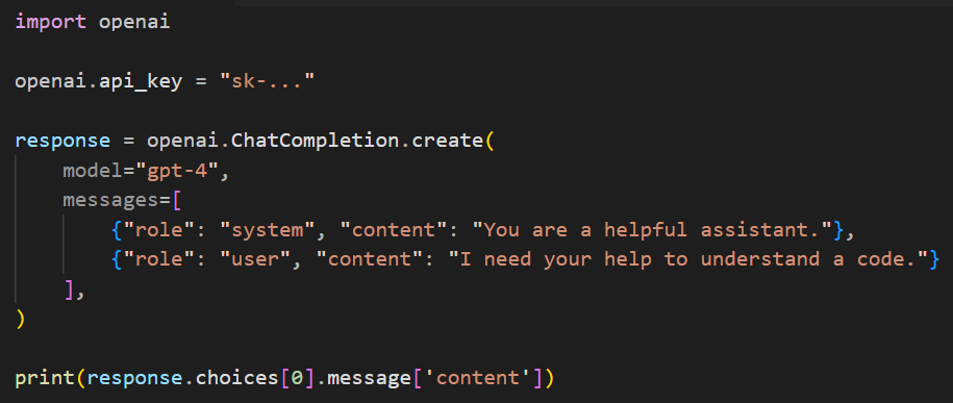

APIs (Application Programming Interfaces): This type of interface enables the integration of LLMs into programs, applications and custom workflows. Unlike chatbots, which provide an interactive conversational experience, APIs require users to structure their requests within a programming language, such as Python or JavaScript, and often require specific parameters to be filled in order to generate the expected response. These requests are then passed to the LLM of choice, and the response can then be used in the program.

Fig. 6.6 Screenshot taken from code using OpenAI’s API that lets you “talk to” ChatGPT through code.#

6.2.2. Types of processing#

When interacting with an LLM, what influences its response is not just the training it has been given, but also our previous interaction with it. We can distinguish two aproaches of input-processing the model can take:

Stateless processing: every prompt needs to be self-contained because each input is processed independently

Stateful processing: the model retains previous information given by the user, allowing for follow-up questions and contextual understanding

6.2.3. Prompt Strategies#

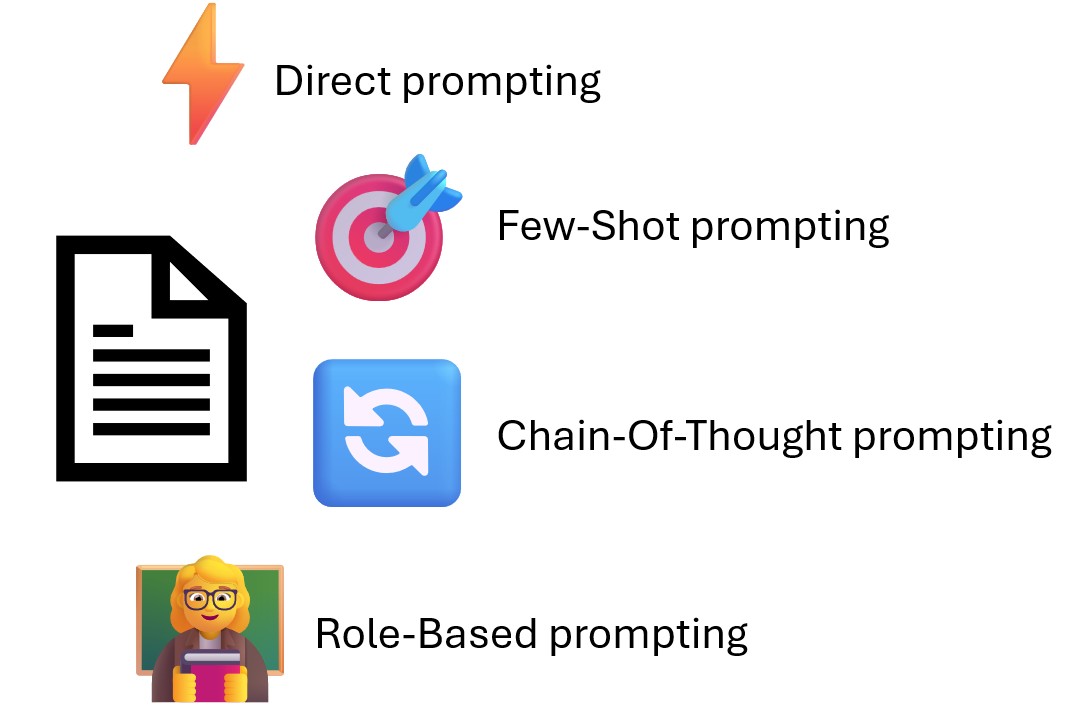

Even though it is good practice, not all elements need to always be included in a prompt. This variety in combinations of prompt components leads to different prompting strategies we can use to interact with LLMs. We are going to touch upon some of the more common strategies. We encourage you to open your chatbot of choice and try the examples below.

Fig. 6.7 Four most common prompt strategies used.#

Direct or zero-shot prompting

Asking the LLM to complete a task directly. It is the simplest strategy so the best results should not be expected.

Translate this text to Spanish: “The words of wisdom”.

Explain the concept of recursion in three sentences.

One or few-shot prompting

This strategy provides examples within the prompt to guide the model’s response. This way, the context and instructions can be inferred from the example(s) as well as wanted patterns.

‘Convert the sentence to a formal tone as in the two given examples: What’s up? Are you free later? → I hope this message finds you well. Would you be available later today? Hey, can you send me that report soon? → Could you please send me the report at your earliest convenience? Can you help me with this task? → ?’

Chain-of-thought prompting

Encouraging a step-by-step reasoning for solving complex tasks. This technique asks to perform and show the intermediate calculations/logic necessary, leading to more accurate final results. It is ideal for logical reasoning, mathematical problems, or to break down complex processes.

‘Solve this mathematical problem step-by-step. The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 mores, how many apples do they have?’

‘Explain step-by-step how would you build a recommendation system’

Role-based prompting

Here we set a specific role or context for the model to follow and improve the quality of the response, tailoring its tone, expertise and response style.

‘You are a 3rd grade English teacher. Explain the difference between past simple and past perfect.’

‘You are a very experienced doctor. Explain the symptoms of flu.’

6.2.4. Prompt Structure Elements#

While LLM prompting is not a deterministic process, and answers to the same prompt may vary, there are some ways of organising the prompt and established practices you can keep in mind while writing your prompts to efficiently obtain more relevant results:

We have seen the way the prompt is organised has an impact on the output produced. While you may be able to throw all information together and still obtain a usable result, following a clear structure can help the AI model better process your request. While there exist many specific ways in which to structure the prompts within the field of prompt engineering, they all involve breaking down your request into the following 5 components:

Goal: Clearly defines the task

This is the core instruction that tells the AI what to do. It should be specific and without ambiguity in order to improve the response’s quality.

Tell me about '1984'.→ (Too vague, unclear if you want a summary of the book, themes, or historical context)→

Summarize the main arguments of the book '1984' in 200 words.Framing: Provides details needed for context

This section provides extra background or constraints that help the AI tailor its response.

Explain quantum mechanics.→ (No clarity on assumed knowledge level or intended audience)→

Provide an overview of quantum mechanics, assuming the reader has a high school-level understanding of physics.Examples/Reference: Provides context from existing materials

This part includes external references, sources or specific examples that the AI should consider when generating its response.

Write a product description for a smartwatch.→ (No reference for tone or detail level)→

Write a product description for a smartwatch, similar in style to this example: 'The UltraFit Pro is a sleek, waterproof fitness tracker with a 10-day battery life and heart-rate monitoring capabilities.'Persona: Assign the AI a role

By instructing the AI to adopt a specific perspective or persona, such as a professor, journalist, or software engineer, the response can become more relevant and appropriate to your needs.

Explain cognitive dissonance.→ (No assigned role, leading to a generic response)→

Act as a university professor in psychology and explain the effects of cognitive dissonance with references to academic studies.Format: Defines output style

This section specifies how the response should be structured or styled. Directing the AI to generate content in a specific manner rather than a generic response.

Tell me how to bake a chocolate cake.→ (No clarity on structured steps)→

Provide a step-by-step guide on how to bake a chocolate cake, formatted as a numbered list.

6.2.5. LLMs in coding#

LLMs have become powerful tools for coding assistance, providing support for both beginners and experienced developers. However, to get the most out of them, it is important to know what these AI models are capable of.

This section explores the three most useful ways in which LLMs can be used for learning, code generation, and troubleshooting. If used correctly, this can help increase efficiency in work.

Code explanation

One of the most common uses of LLMs in coding is for explaining code. Whether you’re a beginner or an experienced programmer, trying to understand unfamiliar code can be challenging. By providing a prompt that contains a code snippet or a specific block of code, users can ask how it works, what it does, or to break it down step by step, and LLMs can provide an immediate and understandable explanation.

"What does this line of code do? Return me its value.” squares = [x**2 for x in range(1, 6)]

Answer: “This list comprehension generates a list of squares of numbers from 1 to 5. The result is [1, 4, 9, 16, 25].”

"Explain step-by-step how this function calculates the Fibonacci sequence.” def fibonacci(n): if n <= 1: return n else: return fibonacci(n-1) + fibonacci(n-2)

Answer: “Step 1: Function call … Step 2: Base Case Check … Step 3: Recursive Case … Step 4: Recursive breakdown of fibonacci(n) … Step 5: Base Case Reached … Step 6: Calculating Fibonacci Values … Step 7: Final Result”

Learning new functions

When working with programming libraries, especially large ones, it can be difficult to remember all the functions and how they work. Asking an LLM to explain a function from a library can help you understand its purpose, arguments, outputs, and how you might apply it in your code.

The advantage of LLMs here is that they are not limited to the documentation Python itself offers but also can answer more specific questions about the use of a function.

"Explain the pandas.DataFrame.groupby() function. What are its arguments, what does it return, and when should I use it?"

"Compare the numpy.array() function and numpy.asarray(). When should I use one over the other?"

"What happens if I set the usecols parameter of pandas.read_csv() to a list of selected column names? How does it affect the columns read from the CSV file?"

Additionally, you can also use the help of LLMs to ask if functions that can perform a desired task exist within a specific package, helping you make the most out of existing packages.

“Is there a function in pandas that can find the highest number in a dataframe?”

“I need to perform (task A), does numpy have a function for this?”

Furthermore, LLMs can assist you in choosing the best method or function for a given task, especially when multiple approaches are possible. This includes comparing across different libraries.

“I have a dataset composed of 1 million rows and 20 columns. What are the different ways to calculate the mean of a specific attribute using NumPy and pandas? Which will be faster?”

“I want to create a scatter plot and add a regression line. Should I use matplotlib or seaborn?”

Debugging

A significant aspect of programming is the process of debugging, and LLMs can be immensely helpful as a debugging tool. They can help not only by spotting issues in your code but also by providing insights into potential causes and solutions.

A great way to use LLMs for debugging is for quick help when your code is giving you an error you haven’t encountered before. By receiving a straightforward explanation to the issue, next time you encounter the same error type you are more likely to be able to find the cause quicker.

"I’m getting this error when running my code: IndexError: list index out of range. What might be causing this?" numbers = [1, 2, 3] print(numbers[ 3 ])

"My function is not returning the correct value. It seems to be stuck in an infinite loop. How can I fix this?" def countdown(n): while n > 0: print(n) countdown(n) print("Done!") countdown(3)

"How do I efficiently debug a function with many if statements?"

import micropip

await micropip.install("jupyterquiz")

from jupyterquiz import display_quiz

import json

with open("questions_prompting_0.json", "r") as file:

questions=json.load(file)

display_quiz(questions, border_radius=0)

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 1

----> 1 import micropip

2 await micropip.install("jupyterquiz")

3 from jupyterquiz import display_quiz

ModuleNotFoundError: No module named 'micropip'