Scalar Diffraction Optics#

Introduction#

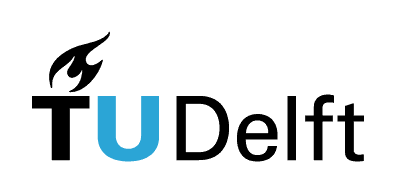

In this chapter we will study how light propagates as a wave. In the study of the double-slit experiment we concluded from the interference pattern observed on a screen that light is a wave. To demonstrate more convincingly that light is indeed a wave, we require a detailed quantitative model of the propagation of light, which gives experimentally verifiable predictions.

But a precise description of the propagation of light is not only important for fundamental science, it also has many practical applications. For example, if a sample must be analysed by illuminating it and measuring the scattered light, the fact that the detected light has not only been affected by the sample, but by both the sample and propagation has to be taken into account. Another example is lithography. If a pattern has to be printed onto a substrate using a mask that is illuminated and there is a certain distance between the mask and the photoresist, the light which reaches the resist does not have the exact shape of the mask due to propagation effects. Thus, the mask needs to be designed to compensate for these effects.

Fig. 85 A quantitative model of the propagation of light is required to predict the properties of propagation and to apply it in sample analyses and lithography.#

In section.scalvecwave we have derived that in homogeneous matter (i.e. the permittivity is constant), every component \(U\) of a time-harmonic electromagnetic field satisfies the scalar Helmholtz equation (45):

where \(k=\omega\sqrt{\epsilon \mu_0}\) is the wave number of the light in matter with permittivity \(\epsilon\) and refractive index \(n=\sqrt{\epsilon/\epsilon_0}\).

When the refractive index is not constant, Maxwell’s equations are no longer equivalent to the wave equation for the individual electromagnetic field components and there is then coupling between the components due to the curl operators in Maxwell’s equation. When the variation of the refractive index is slow on the scale of the wavelength, the scalar wave equation may still be a good approximation, but for structures that vary on the scale of the wavelength (i.e. on the scale of ten microns or less), the scalar wave equation is not sufficiently accurate.

Propagation of light through a homogenous medium#

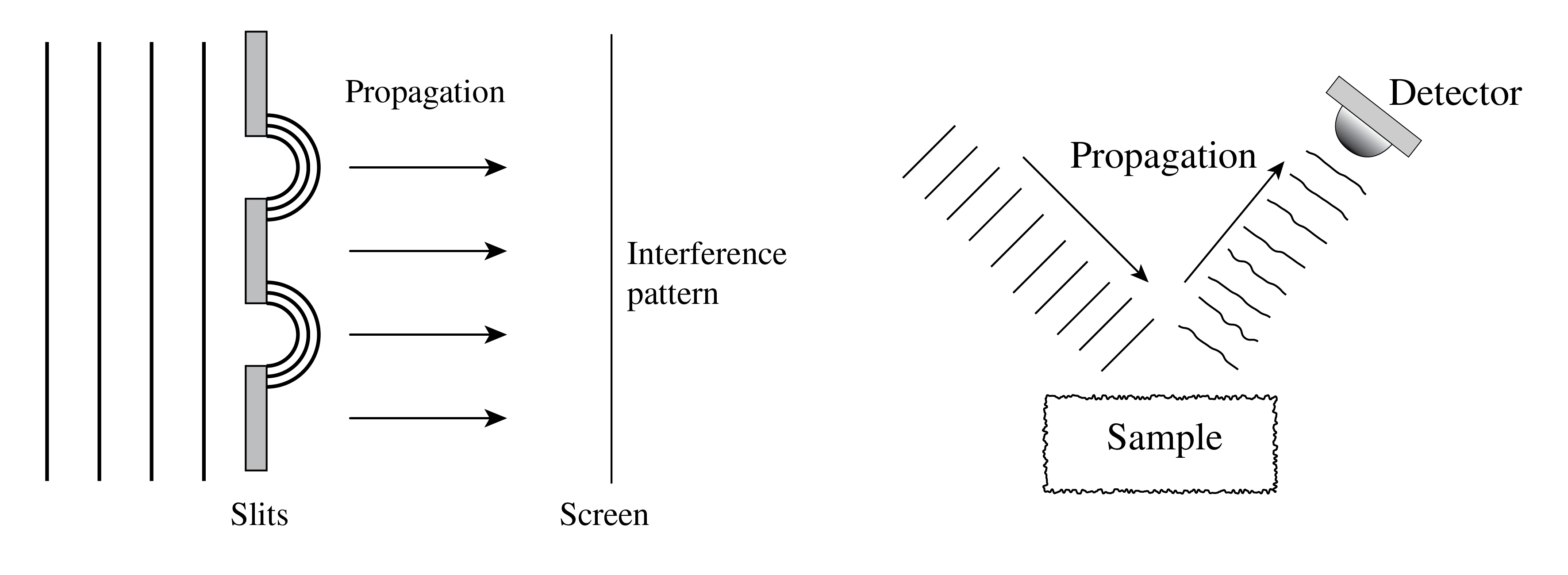

We will describe two equivalent methods to compute the propagation of the field through homogeneous matter, namely the angular spectrum method and the Rayleigh-Sommerfeld diffraction formula. Our goal is to derive the field in some point \((x,y,z)\) with \(z>0\), given the field in the plane \(z=0\), as is illustrated in Fig. 86. Although both methods in the end describe the same, they give physical insight into different aspects of propagation.

Angular Spectrum Method#

Fig. 86 Given the field \(U(x,y,0)\), we want to find \(U\) in a point \((x,y,z)\) with \(z>0\). It is assumed that the field propagates in the positive \(z\)-direction, which means that all sources are in the region \(z<0\).#

The inverse Fourier transform implies:

Important properties of the Fourier transform are listed in The Fourier Transform. By defining \(k_x=2\pi \xi\), \(k_y=2\pi \eta\), (372) can be written as

The variables in the Fourier plane: \((\xi,\eta)\) and \((k_x, k_y)\) are called spatial frequencies.

Equation (373) says that we can write \(U_0(x,y)=U(x,y,z=0)\) as an integral (a sum) of plane waves[1] with wave vector \(\mathbf{k}=(k_x,k_y, k_z)^T\), each with its own weight (i.e. complex amplitude) \({\cal F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\). We know how each plane wave with complex amplitude \({\cal F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\) and wave vector \(\mathbf{k}=(k_x,k_y,k_z)^T\) propagates over a distance \(z>0\)

Therefore, the field \(U(x,y,z)\) in the plane \(z\) (for some \(z>0\)) is given by

where

with \(\lambda\) the wavelength of the light as measured in the material (hence, \(\lambda=\lambda_0/n\), with \(\lambda_0\) the wavelength in vacuum). The sign in front of the square root in (375) could in principle be chosen negative: one would then also obtain a solution of the Helmholtz equation. The choice of the sign of \(k_z\) is determined by the direction in which the light propagates, which in turn depends on the location of the sources and on the convention chosen for the time dependance. We have to choose here the + sign in front of the square root because the sources are in \(z<0\) and the time dependence of time-harmonic fields is (as always in this book) given by \(e^{-i\omega t}\) with \(\omega >0\).

Eq. (374) can be written alternatively as

where now \(k_z\) has to be considered as a function of \((\xi, \eta)\):

Note that one can interpret this as a diagonalisation of the propagation operator, as explained in Basis transformations.

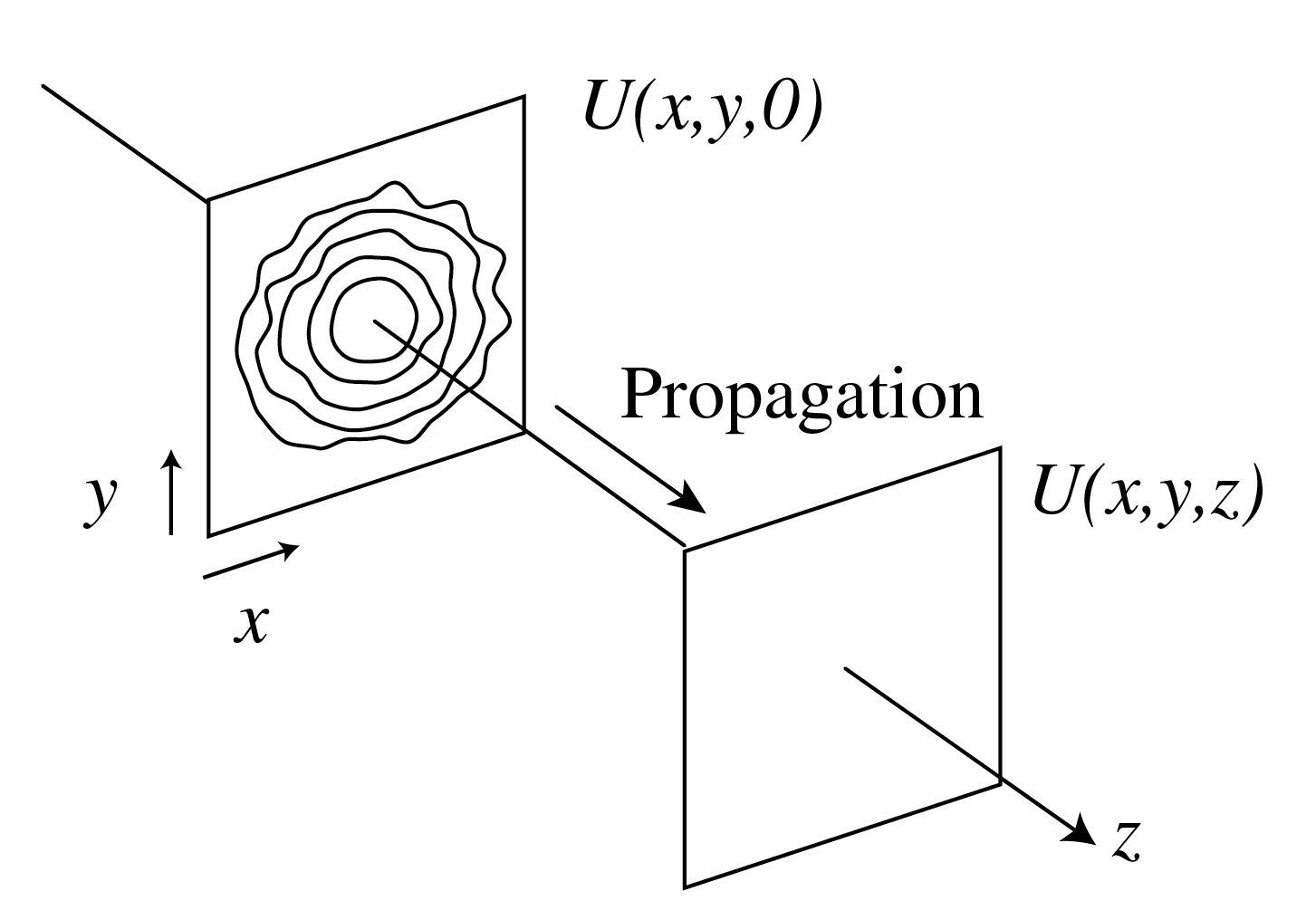

We can observe something interesting: if \(k_x^2+k_y^2 > \left(\frac{2\pi }{\lambda}\right)^2\), then \(k_z\) becomes imaginary, and \(\exp(i k_z z)\) decays exponentially for increasing \(z\):

These exponentially decaying waves are called evanescent in the positive \(z\)-direction. We have met evanescent waves already in the context of total internal reflection discussed in Total Internal Reflection and Evanescent Waves. The physical consequences of evanescent waves in the angular spectrum decomposition will be explained in Intuition for the Spatial Fourier Transform in Optics.

The waves for which \(k_z\) is real have constant amplitude: only their phase changes due to propagation. These waves therefore are called propagating waves.

Fig. 87 The spatial frequencies \(k_x\), \(k_y\) of the plane waves in the angular spectrum of a time-harmonic field which propagates in the \(z\)-direction. There are two types of waves: the propagating waves with spatial frequencies inside the circle \(\sqrt{k_x^2+k_y^2}<k=2\pi/\lambda \) and which have phase depending on the propagation distance \(z\) but constant amplitude, and the evanescent waves for which \(\sqrt{k_x^2+k_y^2}>k\) and of which the amplitude decreases exponentially during propagation.#

Remark. In homogeneous space, the scalar Helmholtz equation for every electric field component is equivalent to Maxwell’s equations and hence we may propagate each component \(E_x\), \(E_y\) and \(E_z\) individually using the angular spectrum method. If the data in the plane \(z=0\) of these field components are physically consistent, the electric field thus obtained will automatically satisfy the condition that the electric field is free of divergence, i.e.

everywhere in \(z>0\). This is equivalent to the statement that the electric vectors of the plane waves in the angular spectrum are perpendicular to their wave vectors. Alternatively, one can propagate only the \(E_x\)- and \(E_y\)-components and afterwards determine \(E_z\) from the condition that (378) must be satisfied.

Rayleigh-Sommerfeld Diffraction Integral#

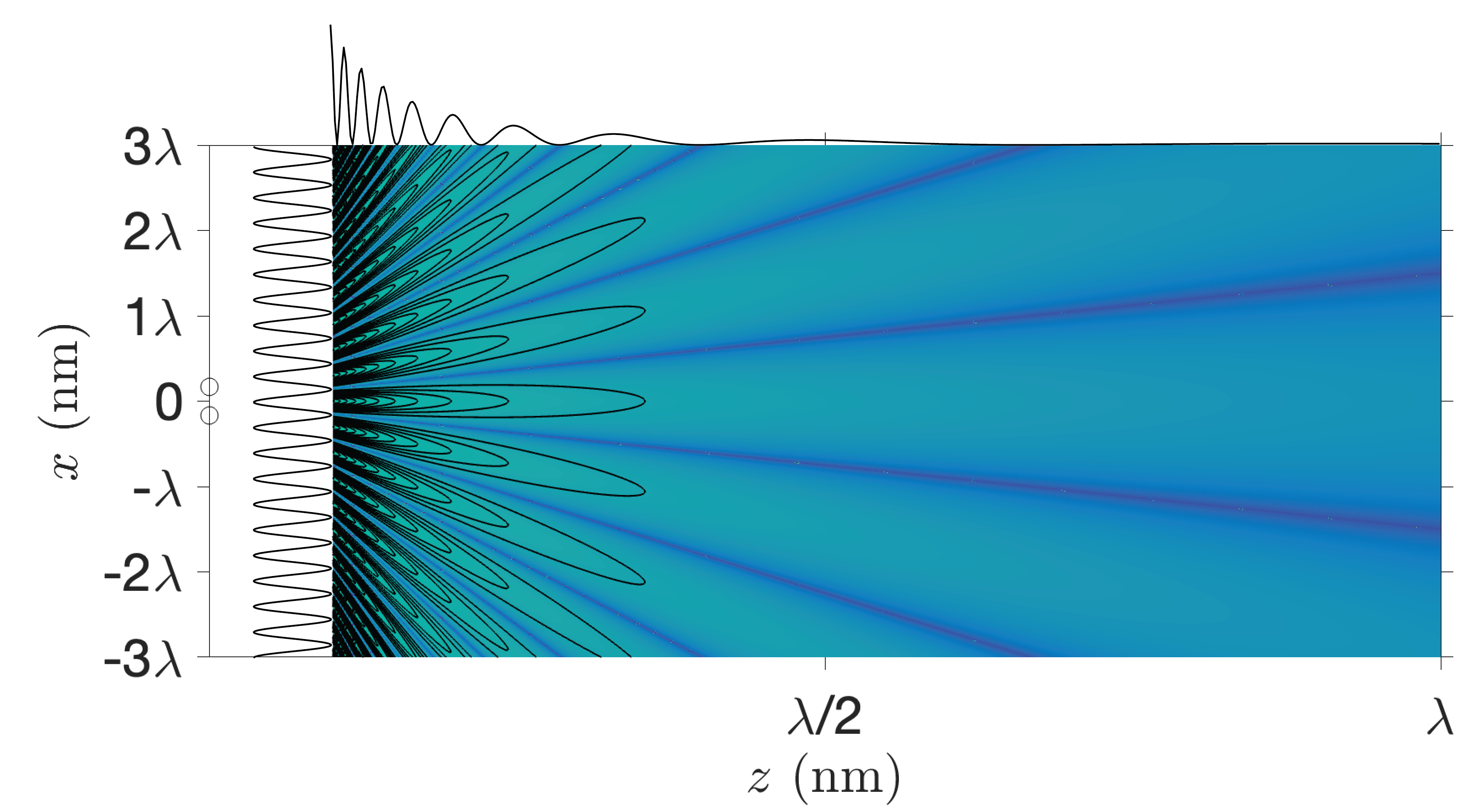

Another method to propagate a wave field is by using the Rayleigh-Sommerfeld integral. A very good approximation of this integral states that each point in the plane \(z=0\) emits spherical waves with amplitude proportional to the field in the plane \(z=0\). To find the field in a point \((x, y,z)\), we have to add the contributions from all these point sources together. This corresponds to the Huygens-Fresnel principle postulated earlier in Spatial Coherence and Young’s Experiment. Because a more rigorous derivation starting from the Helmholtz equation[2] would be rather lengthy, we will just give the final result:

Note

where we wrote again \(U_0(x,y)=U(x,y,0)\) and where

Remarks.

The formula (379) is not completely rigorous because a term that is a factor \(1/(kr)\) smaller (and which in practice therefore is very much smaller) has been omitted.

In (379) there is an additional factor \(z/r\) that has been omitted in the time-harmonic spherical wave as given in (52) and at the right-hand side of (321). This factor means that the amplitudes of the spherical waves in the Rayleigh-Sommerfeld diffraction integral depend on direction (although their wave fronts are spherical), the amplitudes being largest in the forward direction.

The angular spectrum method amounts to a multiplication by \(\exp(i z k_z)\) in Fourier space, while the Rayleigh-Sommerfeld integral is a convolution with respect to \((x,y)\). It is a property of the Fourier transform that a multiplication in Fourier space corresponds to a convolution in real space and vice versa. Indeed a mathematical result called Weyl’s identity implies that the rigorous version of (379) and the plane wave expansion (i.e. the angular spectrum method) give identical results.

Intuition for the Spatial Fourier Transform in Optics#

Since spatial Fourier transformations play an important role in our discussion of the propagation of light, it is important to understand them not just mathematically, but also intuitively.

What happens when an object is illuminated and the reflected or transmitted light is detected at some distance from the object? Let us look at transmission for example. When the object is much larger than the wavelength, a transmission function \(\tau(x,y)\) is often defined and the field transmitted by the object is then assumed to be simply the product of the incident field and the function \(\tau(x,y)\). For example, for a hole in a metallic screen with diameter large compared to the wavelength, the transmission function would be 1 inside the hole and 0 outside. However, if the object has features of the size of the order of the wavelength, this simple model of multiplying by a transmission function breaks down and the transmitted field must instead be determined by solving Maxwell’s equations. This is not easy, but some software packages can do it.

Now suppose that the transmitted electric field has been obtained in a plane \(z=0\) very close to the object (a distance within a fraction of a wavelength). This field is called the transmitted near field and it may have been obtained by simply multiplying the incident field with a transmission function \(\tau(x,y)\) or by solving Maxwell’s equations. This transmitted near field is a kind of footprint of the object. But it should be clear that, although it is quite common in optics to speak in terms of “imaging an object”, strictly speaking we do not image an object as such, but we image the transmitted or reflected near fields which are a kind of copy of the object. After the transmitted near field has been obtained, we apply the angular spectrum method to propagate the individual plane waves through homogeneous matter (e.g. air) from the object to the detector plane or to an optical element like a lens.

Let \(U_0(x,y)=U(x,y,0)\) be a component of the transmitted near field. The first step is to Fourier transform it, by which the field component is decomposed in plane waves. To each plane wave, characterised by the wave numbers \(k_x\) and \(k_y\), the Fourier transform assigns a complex amplitude \(\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\), the magnitude of which indicates how important the role is which this particular wave plays in the formation of the near field. So what can be said about the object field \(U_0(x,y)\), by looking at the magnitude of its spatial Fourier transform \(|\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)|\)?

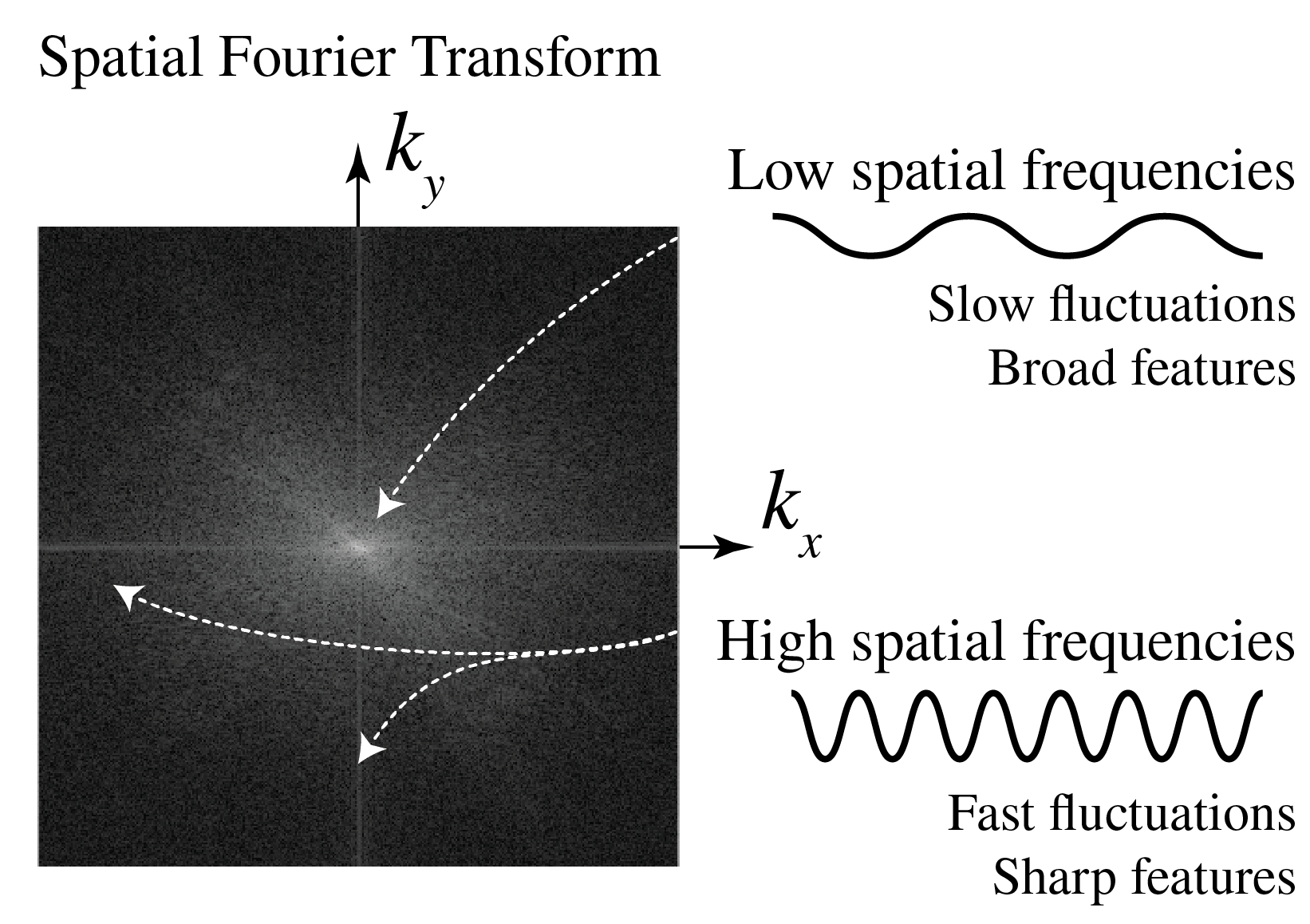

Suppose \(U_0(x,y)\) has sharp features, i.e. there are regions where \(U_0(x,y)\) varies rapidly as a function of \(x\) and \(y\). To describe these features as a combination of plane waves, these waves must also vary rapidly as a function of \(x\) and \(y\), which means that the length of their wave vectors \(\sqrt{k_x^2+k_y^2}\) must be large. Thus, the sharper the features that \(U_0(x,y)\) has, the larger we can expect \(|\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)|\) to be for large \(\sqrt{k_x^2+k_y^2}\), i.e. high spatial frequencies can be expected to have large amplitude. Similarly, the slowly varying, broad features of \(U_0(x,y)\) are described by slowly fluctuating waves, i.e. by \(\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\) for small \(\sqrt{k_x^2+k_y^2}\), i.e. for low spatial frequencies. This is illustrated in Fig. 88.

To investigate these concepts further we choose a certain field, take its Fourier transform, remove the higher spatial frequencies and then invert the Fourier transform. We then expect that the resulting field has lost its sharp features and only retains its broad features, i.e. the image is blurred. Conversely, if we remove the lower spatial frequencies but retain the higher, then the result will only show its sharp features, i.e. its contours. These effects are shown in Fig. 90. Recall that when \(k_x^2+k_y^2 > \left(\frac{2\pi}{\lambda}\right)^2\), the plane wave decays exponentially as the field propagates. Because by propagation through homogeneous space, the information contained in the high spatial frequencies corresponding to evanescent waves is lost (only exponentially small amplitudes of the evanescent waves remain), perfect imaging is impossible, no matter how well-designed an optical system is.

Note

Propagation of light leads to irrecoverable loss of resolution.

It is this fact that motivates near-field microscopy, which tries to detect these evanescent waves by scanning close to the sample, thus obtaining subwavelength resolution.

So we have seen how we can guess properties of some object field \(U_0(x,y)\) given the amplitude of its spatial Fourier transform \(|\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)|\). But what about the phase of \(\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\)? Although one cannot really guess properties of \(U_0(x,y)\) by looking at the phase of \(\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\) the same way as we can by looking at its amplitude, it is in fact the phase that plays a larger role in defining \(U_0(x,y)\). This is illustrated in Fig. 92: if the amplitude information of \(\mathcal{F}(U_0)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\) is removed, features of the original \(U_0(x,y)\) may still be retrieved. However, if we only know the amplitude \(|\mathcal{F}(U_0)(k_x,k_y)|\) but not the phase, then the original object is completely lost. Thus, the phase of a field \(\mathcal{F}(U_0)\) is very important, arguably often more important than its amplitude. However, we cannot measure the phase of a field directly, only its intensity \(I=|\mathcal{F}(U_0)|^2\) from which we can calculate the amplitude \(|\mathcal{F}(U_0)|\). It is this fact that makes phase retrieval an entire field of study on its own: how can we find the phase of a field, given that we can only perform intensity measurements? This question is related to a new field of optics called “lensless imaging”, where amplitudes and phases are retrieved from intensity measurements and the image is reconstructed computationally. Interesting as this topic may be, we will not treat it in these notes and refer instead to master courses in optics [3].

Remark. The importance of the phase for the field can also be seen by looking at the plane wave expansion (374). We have seen that the field in a plane \(z=\text{constant}\) can be obtained by propagating the plane waves by multiplying their amplitudes by the phase factors \(\exp(i z k_z)\), which depends on the propagation distance \(z\). If one leaves the evanescent waves out of consideration (since after some distance they hardly contribute to the field anyway), it follows that only the phases of the plane waves change upon propagation, while their amplitudes (the moduli of their complex amplitudes) do not change. Yet, depending on the propagation distance \(z\), widely differing light patterns are obtained (see e.g. Fig. 96).

Fig. 88 A qualitative interpretation of spatial Fourier transforms. The low spatial frequencies (i.e. small \(\sqrt{k_x^2+k_y^2}\)) represent slow fluctuations, and therefore contribute to the broad features of the real-space object. The high spatial frequencies (i.e. large \(\sqrt{k_x^2+k_y^2}\)) fluctuate rapidly, and can therefore form sharp features in the real-space object.#

Fig. 89 (a) Removing the high spatial frequencies#

Fig. 90 (b) Removing the low spatial frequencies#

Demonstration of the roles of different spatial frequencies. By removing the high spatial frequencies, only the broad features of the image remain and resolution s lost. If the low spatial frequencies are removed, only the sharp features (i.e. the contours) remain.

Fig. 91 (a) Removing the amplitude information by setting the amplitude of propagating and evanescent waves to 1 and 0, respectively.#

Fig. 92 (b) Removing the phase information by setting the phase equal to 0.#

Demonstration of the role of the phase of the spatial Fourier transform. If the amplitude information is removed, but phase information is kept, some features of the original image are still recognizable. However, if the phase information is removed but amplitude information is kept, the original image is completely lost.

Another aspect of the Fourier transform is the uncertainty principle. It states that many waves of different frequencies have to be added to get a function that is confined to a small space[4]. Stated differently, if \(U(x,y)\) is confined to a very small region, then \(\mathcal{F}(U)(k_x,k_y)\) must be very spread out. This can also be illustrated by the scaling property of the Fourier transform:

which simply states that the more \(h(x)\) is squeezed by increasing \(a\), the more its Fourier transform \(\mathcal{F}(h)\) spreads out. This principle is illustrated in Fig. 93. The uncertainty principle is familiar from quantum physics where it is stated that a particle cannot have both a definite momentum and a definite position. In fact, this is just one particular manifestation of the uncertainty principle just described. A quantum state \(\ket{\psi}\) can be described in the position basis \(\psi_{x}(x)\) as well as in the momentum basis \(\psi_p(p)\). The basis transformation that links these two expressions is the Fourier transform

Hence, the two are obviously subject to the uncertainty principle! In fact, any two quantum observables which are related by a Fourier transform (also called conjugate variables), such as position and momentum, obey this uncertainty relation. The uncertainty relation states:

Note

If a function \(f(x)\) has width \(\Delta x\), its Fourier transform has a width \(\Delta k_x \approx 2\pi/ \Delta x\).

Since after propagation over a distance \(z\), the evanescent waves do not contribute to the Fourier transform of the field, it follows that this Fourier transform has maximum width \(\Delta k_x = k\). By the uncertainty principle it follows that after propagation, the minimum width of the field is \(\Delta x, \Delta y \approx 2\pi/k=\lambda\).

Note

The minimum feature size of a field after propagation is of the order of the wavelength.

This poses a fundamental limit to resolution given by the wavelength of the light.

Fig. 93 Demonstration of the uncertainty principle. The more confined \(U(x,y)\) is, the larger the spread of \(\mathcal{F}(U)\left(\frac{k_x}{2\pi},\frac{k_y}{2\pi}\right)\).#

Fresnel and Fraunhofer Approximations#

The Fresnel and Fraunhofer approximation are two approximations of the Rayleigh-Sommerfeld integral (379). The approximations are accurate provided the propagation distance \(z\) is sufficiently large. In the Fraunhofer approximation, \(z\) has to be quite large, i.e. larger than for the Fresnel approximation, which is already accurate for typical distances occuring in optical systems. Putting it differently: in order of most accurate to least accurate (i.e. only valid for large propagation distances), the diffraction integrals would rank as:

Note

[Most accurate] Rayleigh-Sommerfeld \(\rightarrow\) Fresnel \(\rightarrow\) Fraunhofer [Least accurate].

Fresnel Approximation#

We assume that \(z\) in Eq. (379) is so large that in the denominator we can approximate \(r\) by \(z\):

The reason why we can not apply the same approximation for \(r\) in the exponent, is that in the exponent \(r\) is multiplied by \(k= 2\pi /\lambda\), which is a very large number at optical frequencies, so any error introduced by approximating \(r\) would be drastically magnified by multiplying by \(k\) which can easily lead to a completely different value of \(\exp(ikr)=\cos(kr)+i\sin(kr)\). To approximate \(r\) in \(\exp(ikr)\) we must be more careful and apply a Taylor expansion. Recall that

We know that the Taylor expansion around \(s=0\) implies:

Since we assume that \(z\) is large, \(\frac{(x-x')^2+(y-y')^2}{z^2}\) is small, so we have

With this approximation, we arrive at the Fresnel diffraction integral, which can be written in the following equivalent forms:

Especially the last expression is interesting, because it shows that

Note

The Fresnel approximation is proportional to the Fourier transform of the field \(U_0(x',y')\) multiplied by the Fresnel propagator \(\exp\left(\frac{ik(x'^2+y'^2)}{2z}\right)\).

Note that this propagator depends on the distance of propagation \(z\).

Remark. By Fourier transforming (385), one can get the plane wave amplitudes of the Fresnel approximation. It turns out that these amplitudes are equal to \({\cal F}(U_0)\) multiplied by a phase factor which is obtained from a paraxial approximation of the exact phase factor \(\exp(i z k_z)\), i.e. \(k_z\) is approximated by a quadratic function of \(k_x, k_y\). Therefore the Fresnel approximation is also called the paraxial approximation. In fact, it can be shown that the Fresnel diffraction integral is a solution of the paraxial wave equation and conversely, that every solution of the paraxial wave equation can be written as a Fresnel diffraction integral[5].

Fraunhofer Approximation#

To obtain the Fraunhofer approximation, we will make one further approximation to \(r\) in \(\exp(ikr)\) in addition to the Fresnel approximation:

Hence we have omitted the quadratic terms \(x'^2+y'^2\), and in comparison with the Fresnel diffraction integral, we omit the factor \( \exp\left[ik(x'^2+y'^2)/(2z)\right]\) to obtain the Fraunhofer diffraction integral:

This leads to the following important observation:

Note

The Fraunhofer far field of \(U_0(x',y')\) is proportional to the Fourier transform of \(U_0\).

Note that to obtain the Fraunhofer field in \((x,y,z)\), the Fourier transform has to be evaluated at spatial frequencies \(x/(\lambda z)\) and \(y/(\lambda z)\). These spatial frequencies scale with \(1/z\) and the overall field \(U(x,y,z)\) is proportional to \(1/z\). This implies that when \(z\) is increased the field pattern spreads out without changing its shape while its amplitude goes down so that the total energy is preserved. Stated differently, apart from the factor \(1/z\) in front of the integral, the Fraunhofer field depends on the angles \(x/z\) and \(y/z\). Therefore for large propagation distances \(z\) for which the Fraunhofer approximation is accurate, the field becomes broader (it diverges) when the propagation distance increases.

Note

Eventually, for sufficiently large propagation distances, i.e. in the Fraunhofer limit, light always spreads out without change of shape of the light distribution.

Remarks.

The Fresnel approximation is, like the Fraunhofer approximation, a Fourier transform of the field in the starting plane (\(z=0\)), evaluated in spatial frequencies which depend on the point of observation:

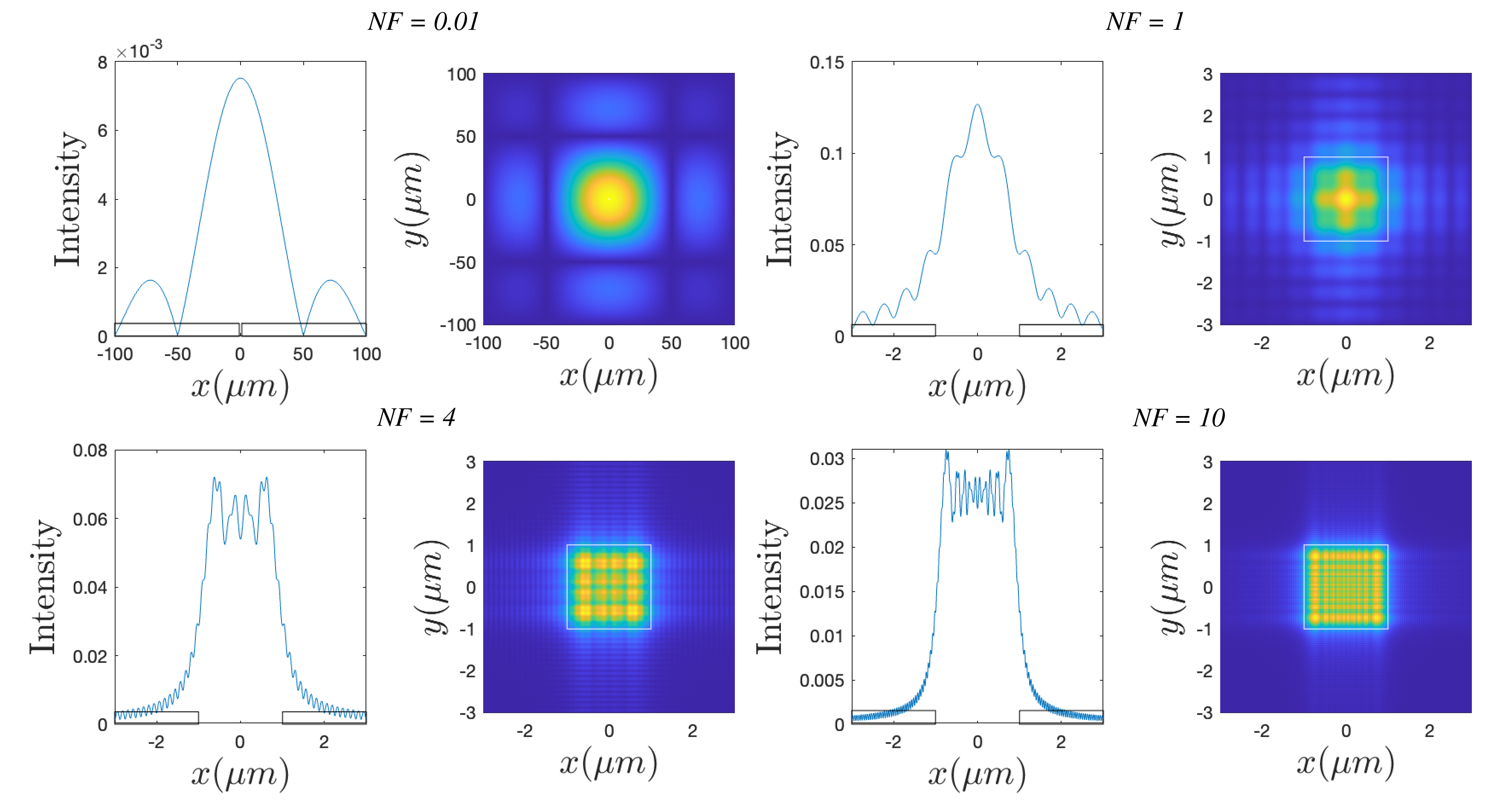

However, in contrast to the Fraunhofer approximation, the Fresnel approximation depends additionally in a different way on the propagation distance \(z\), namely through the exponent of the Fresnel propagator in the integrand. Therefore the Fresnel approximation gives quite diverse patterns depending on the propagation distance \(z\), as is shown in Fig. 96.

In the Rayleigh-Sommerfeld diffraction integral, the field is written as a superposition of spherical waves. In the Fresnel approximation the spherical waves are approximated by parabolic waves. Finally, in the Fraunhofer approximation the propagation distance is so large that the parabolic waves can be approximated by plane waves.

Let \(f_{a,b}(x,y)=f(x-a,y-b)\) be the function obtained from \(f\) by translation. From the general property of the Fourier transform:

it follows that when the field \(U_0\) is translated, the intensity in the Fraunhofer far field is not changed. In contrast, due to the additional quadratic phase factor in the integrand of the Fresnel integral, the intensity of the Fresnel field in general changes when \(U_0\) is translated.

Suppose that \(U_0\) is the field immediately behind an aperture \({\cal A}\) with diameter \(D\) in an opaque screen. It can then be shown that points \((x,y,z)\) of observation, for which the Fresnel and Fraunhofer diffraction integrals are sufficiently accurate, satisfy:

The Fresnel number is defined by

When \(N_F < 0.1\) the Fraunhofer approximation is accurate, while for \(N_F>0.1\) it is better to use the Fresnel approximation (see Fig. 96). Suppose that \(D= 1\text{ mm}\) and the wavelength is that of green light: \(\lambda=550 \text{ nm}\), then Fraunhofer’s approximation is accurate if \(z> 10 \text{ m}\).

The points of observation where the Fraunhofer approximation can be used must in any case satisfy:

When \(x/z>1\), the spatial frequency \(k_x = \frac{2 \pi x}{z \lambda} > k\) associated with this point corresponds to an evanescent wave. An evanescent wave obviously cannot contribute to the Fraunhofer far field because it exponentially decreases with distance \(z\). In practice the Fresnel and Fraunhofer approximations are used only when \(x/z\) and \(y/z\) are smaller than 0.3.

In any expression for an optical field, one may always omit factors of constant phase, i.e. an overall phase which does not depend on position. If one is only interested in the field in certain planes \(z=\text{constant}\), then a factor like \(\exp(ikz)\) may also be omitted. Further, in some cases also a position dependent phase factor in front of the Fresnel and Fraunhofer diffraction integrals is omitted, namely when only the intensity is of interest. In exercises it is usually mentioned that this factor may be omitted: if this is not stated, it should be retained in the formulae.

Examples of Fresnel and Fraunhofer approximations#

Fresnel approximation of the field of two point sources.

Consider two mutual coherent time-harmonic point sources in \(\mathbf{r}_s^+=(a/2,0,0)\) and \(\mathbf{r}_s^-=(-a/2,0,0)\). The fields in \(\mathbf{r}=(x,y,z)\) emitted are according to (321) proportional to

We apply the Fresnel approximation for large \(z\):

Hence,

where in the denominator we replaced \(|\mathbf{r}-\mathbf{r}_s^\pm|\) by \(z\). Note that the Fraunhofer approximation amounts to \(e^{ i k a^2/(8z)} \approx 1\) while the phase factor \(e^{i k \frac{x^2+y^2}{2z} } \) remains. The intensity on a screen \(z=\text{constant}\) of the sum of the two fields for the case that the sources have equal strength and emit in phase is:

It is seen that the intensity results from the interference of two plane waves: \(\exp[\pm i k ax/(\lambda z)]\) and is given by a cosine function (see Fig. 94). Note that for two point sources, the intensity pattern is the same in the Fresnel and the Fraunhofer approximation. However, this is special for two point sources: when more than two point sources are considered, the Fresnel and Fraunhofer patterns are different. The intensity pattern is independent of \(y\), and vanishes on lines

and has maxima on lines

for integer \(m\).

Fig. 94 Intensity pattern of two mutually coherent point sources of equal strength and emitting in phase at the wavelength \(\lambda =600\) nm from Eq. (397). The distance between the point source is 200 nm. On the top the cross-section along along the \(z\)-axis is shown.#

Fraunhofer approximation of a rectangular aperture in a screen.

Let the screen be \(z=0\) and the aperture be given by \(-a/2 < x < a/2\), \(-b/2 < y < b/2\). The transmission function \(\tau(x,y)\) is:

where

and similarly for \(1_{[-b/2, b/2]}(y)\). Let the aperture be illuminated by a perpendicular incident plane wave with unit amplitude. Then the field immediately behind the screen is:

We have

where \(\text{ sinc}(u)=\sin(u)/u\). Hence,

The Fraunhofer far field at large distance \(z\) from a rectangular aperture in mask is obtained by substituting (404) into (388).

Remarks.

The first zero along the \(x\)-direction from the centre \(x=0\) occurs for

The distance between the first two zeros along the \(x\)-axis is \(2\lambda z/a\) and is thus larger when the width along the \(x\)-direction of the aperture is smaller.

The inequalities (393) imply that when \( a< \lambda\), the far field pattern does not have any zeros as function of \(x\). When \(a\) is further decreased it becomes more and more difficult to deduce the width \(a\) from the Fraunhofer intensity. This is an illustration of the fact that information about features that are than the wavelength cannot propagate to the far field.

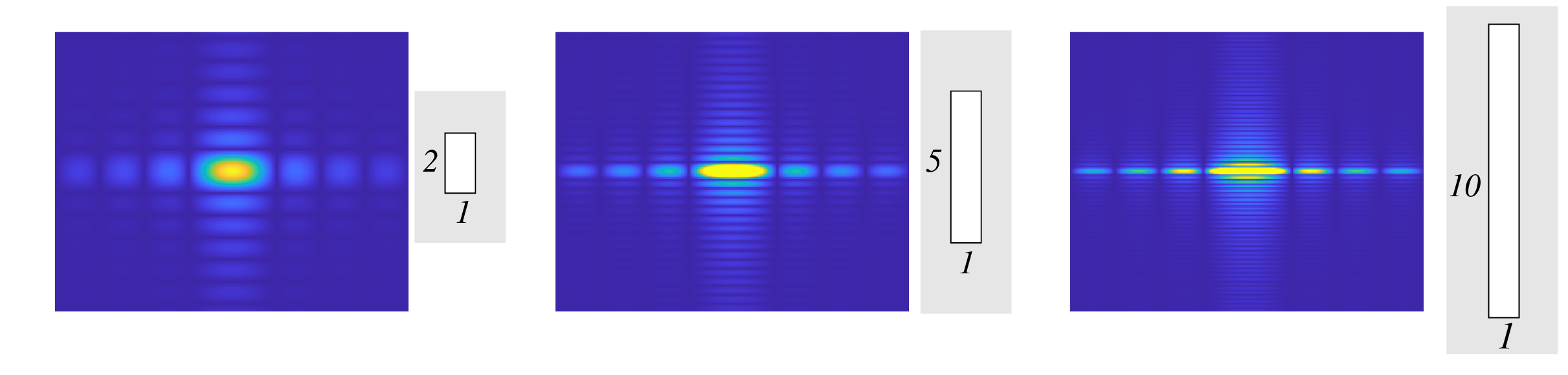

As illustrated in Fig. 95, the Fraunhofer diffraction pattern as function of diffraction angle is narrowest in the direction in which the aperture is widest.

Fig. 95 Fraunhofer diffraction pattern of a rectangular aperture in an opaque screen.Left: the width of the aperture in the \(y\)-direction is twice that in the \(x\)-direction; middle: the width in the \(y\)-direction is 5 times that in the \(x\)-direction; right: the width in the \(y\)-direction is 10 times that in the \(x\)-direction.#

Fresnel approximation of a rectangular aperture in a mask

The integral in the Fresnel approximation for the field of a rectangular aperture in a mask can be computed analytically and leads to functions that are actually called “Fresnel integrals” which can be studied using the Cornu spirals. We will not go deeper in this matter but simply show the results of the simulations in Fig. 96. The distance to the mask increases (\(N_F\) decreases), from very close to the mask at the bottom right, to further from the mask at the bottom left, to rather far from the mask in the upper right, to Fraunhofer distance in the upper left figures. Note the change in scale along the axis in the figures and the decrease of intensity with propagation distance. It is seen that the pattern changes and broadens drastically with distance from what is more or less a copy of the aperture, to a patterns that is equal to the Fourier transform of the aperture. Once the Fraunhofer approximation is accurate, a further increase of distance only results in a widening of the pattern and a decrease of overall amplitude without change of shape. In contrast, in the region where the Fresnel approximation is accurate, the shape of the pattern is seen to change a lot with distance to the mask.

Fig. 96 Diffraction patterns of a square opening in a mask with corresponding cross-sections along the \(x\)-axis, showing the transition from Fresnel to Fraunhofer approximations. The distance to the mask increases with the Fresnel number \(N_F\) from the near field pattern close to the mask in the right bottom figures to the Fraunhofer diffraction pattern in the upper left. Note the different scales along the axis in the figures.#

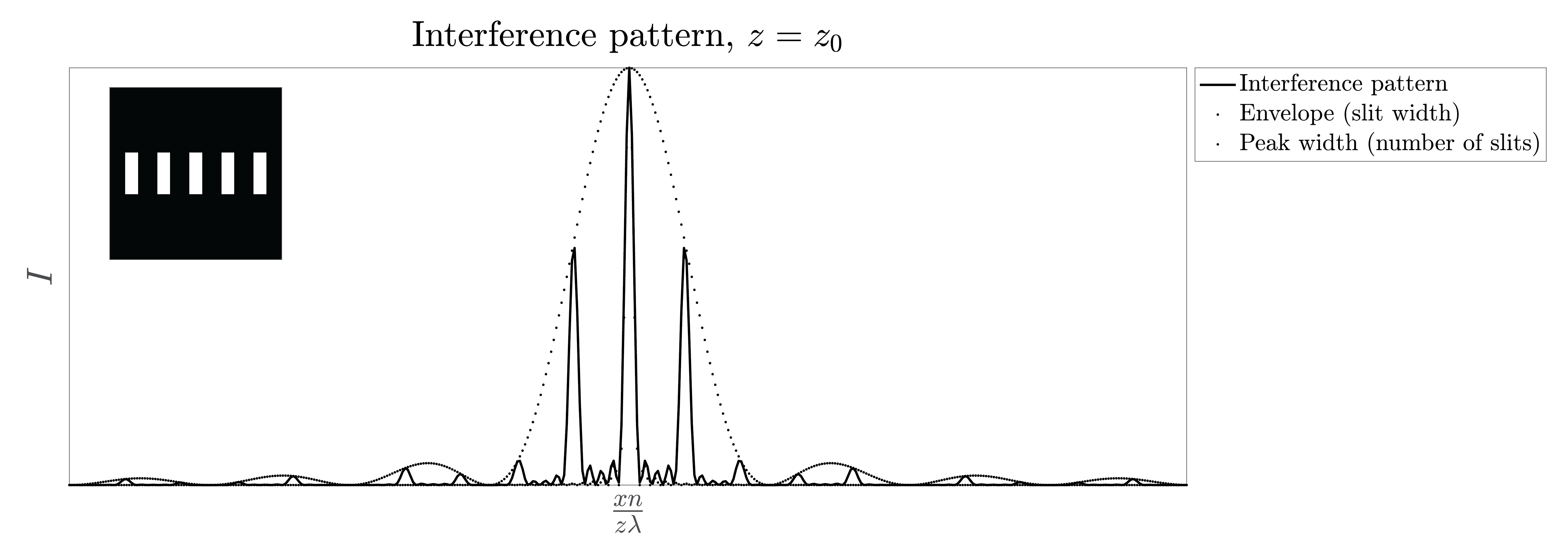

Fraunhofer approximation of a periodic array of slits

We can now predict what is the diffraction pattern of an array of slits of finite width. It follows from the Fraunhofer pattern of a single rectangular aperture that, if the sides parallel to the \(y\)-direction are very long, the Fraunhofer diffraction pattern as function of angle in the \(y\)-direction is very narrow. In Fig. 95b the Fraunhofer diffraction pattern of a rectangular aperture is shown, of which the width in the \(y\)-direction is 10 times that in the \(x\)-direction. The diffraction pattern is then strongly concentrated along the \(x\)-axis. If we only consider the Fraunhofer pattern for \(y/z=0\) while still considering it as a function of \(x/z\), it suffices to compute the Fourier transform only with respect to \(x\). The problem then becomes a diffraction problem for a one-dimensional slit.

We consider now an array of such slits of which the long sides are all parallel to the \(y\)-axis and we neglect from now on the \(y\)-variable. Suppose \(W_{\text{slit}}(x)\) is the function that describes the transmission of a single slit. Then the transmission function of \(M\) equidistant slits is

where \(p\) is the distance of neighbouring slits, i.e. \(p\) is the period of the row. If the illumination is by a perpendicular incident plane wave with unit amplitude, the transmitted near field \(U_0(x)\) is simply \(\tau(x)\). Then

where for a slit with width \(a\) we have according to (403):

There must obviously hold \(a< p\). Using

we get

The intensity of the Fraunhofer far ield is:

where \(\theta=x/z\) is the diffraction angle. The factor

is, due to the factor \(M\) under the sinus in the numerator, a fast oscillating function of \(\theta\) while \(|{\cal F}(W_{slit})(\theta/\lambda)|^2\) is a slowly varying envelope. This is a manifestation of the property of the Fourier transform that small details of a structure (e.g. the size of a single slit) cause large scale features of the far field pattern, whereas large scale properties such as the length \(M p\) of the total structure, cause quickly changing features. This is illustrated in Fig. 97.

The diffraction amplitude is maximum for angles where both the denominator and numerator of (410) vanish:

These directions are called diffraction orders and since

which follows by applying l’H^{o}pital’s rule, the intensity of the m\(^{th}\) order is

hence it is proportional to the square of the number of periods and to the squared modulus of the envelope in \(\theta_m\).

Between two adjacent diffraction orders, (410) has \(M-1\) zeros and \(M-2\) secondary maxima (see Fig. 97). The angular width of a diffraction order is half the angular distance to the nearest zeros next to the order, i.e.

If there are more slits the intensity peaks into which the energy is diffracted are narrower and the peaks are higher.

Fig. 97 An illustration of a diffraction pattern of a series of five slits.#

As explained above, there holds in the Fraunhofer far field: \(\theta= x/z<1\). This sets a limit to the number of diffracted orders:

Hence, the larger the ratio of the period and the wavelength, the more diffraction orders.

The property (411) that the diffraction orders \( m\neq 0\) depend on wavelength is used to separate wavelengths. Grating spectrometers use periodic structures such as an array of slits to very accurately separate and measure wavelengths. The \(m\)th diffraction order of two wavelengths \(\lambda_1\) and \(\lambda_2>\lambda_1\) are just separated if

which implies with \(\Delta \lambda=\lambda_2-\lambda_1\) and \(\lambda=\lambda_1\) that

It follows that the resolution is higher when there are more slits and for larger diffraction order. However, the disadvantage of using higer diffraction orders is that often their intensity is less. For a grating with 1000 periods one can obtain a resolution of \(\Delta \lambda/\lambda =10^{-3}\) in the first order.

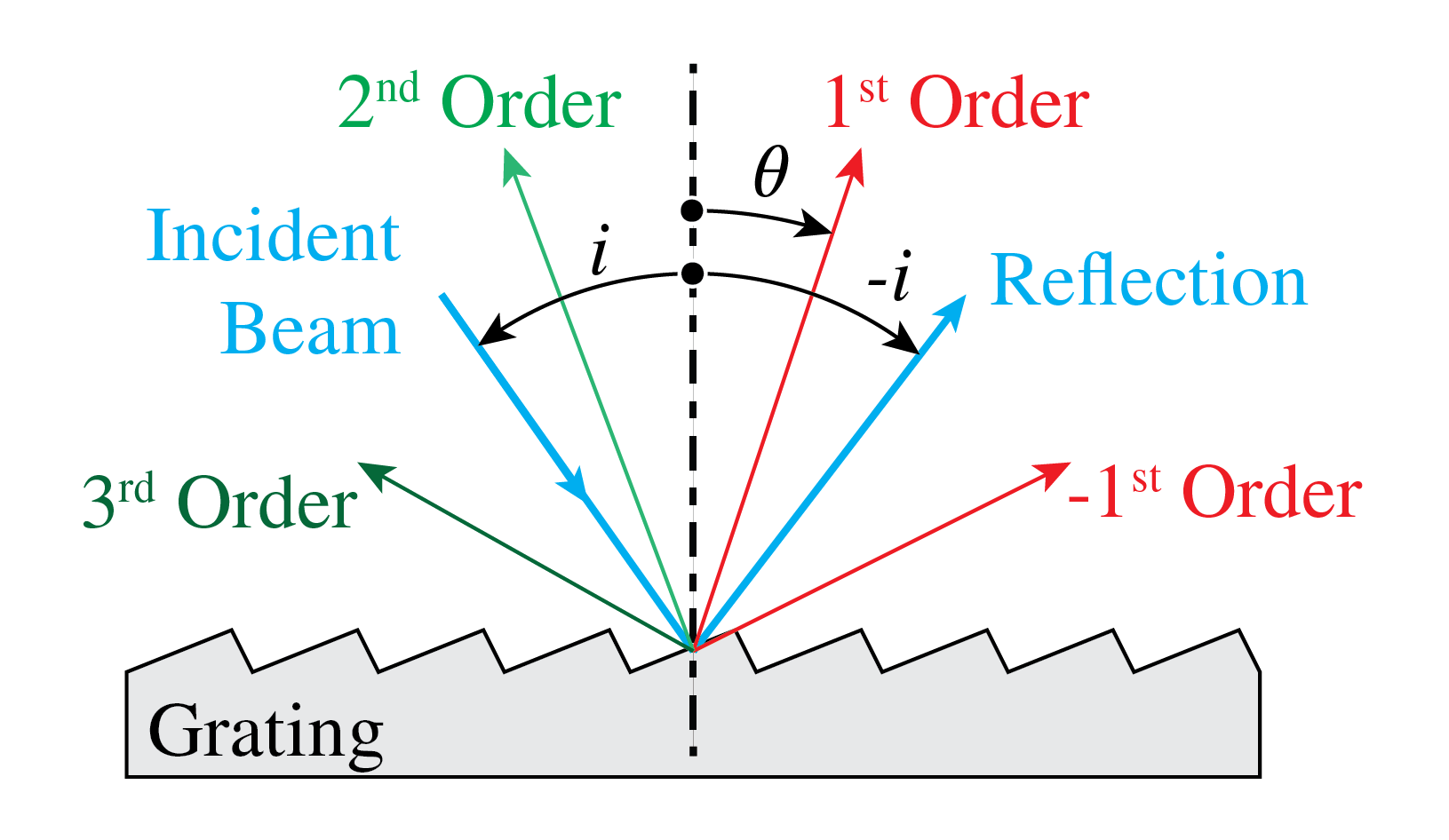

It should be remarked that a grating is obtained for any periodic variation of the refractive index. If the proper transmission function for the unit cell of the grating is substituted for \(W_{slit}\), the formulae above also give the Fraunhofer far field of such more general diffraction gratings. By changing the unit cell, the envelope of the diffraction pattern can be changed and a certain order can be given more intensity. In Fig. 98 a so-called blazed grating is shown which is used in reflection and which has a strong first diffracted order for a certain angle of incidence.

Fig. 98 Diffraction grating used in reflection with a so-called blazed unit cell.#

Remark. A periodic row of slits is an example of a diffraction grating. A grating is a periodic structure, i.e. the refractive index is a periodic function of position. Structures can be periodic in one, two and three directions. A crystal acts as a three-dimensional grating whose period is the period of the crystal, which typically is a few Angstrom. Electromagnetic waves with wavelength less than one Angstrom are called x-rays. When a beam of x-rays illuminates a crystal, a detector in the far field measures the Fraunhofer diffraction pattern given by the intensity of the Fourier transform of the refracted near field. These diffraction orders of crystals for x-rays where discovered by Von Laue and are used to study the atomic structure of crystals.

Fraunhofer Diffraction Revisited#

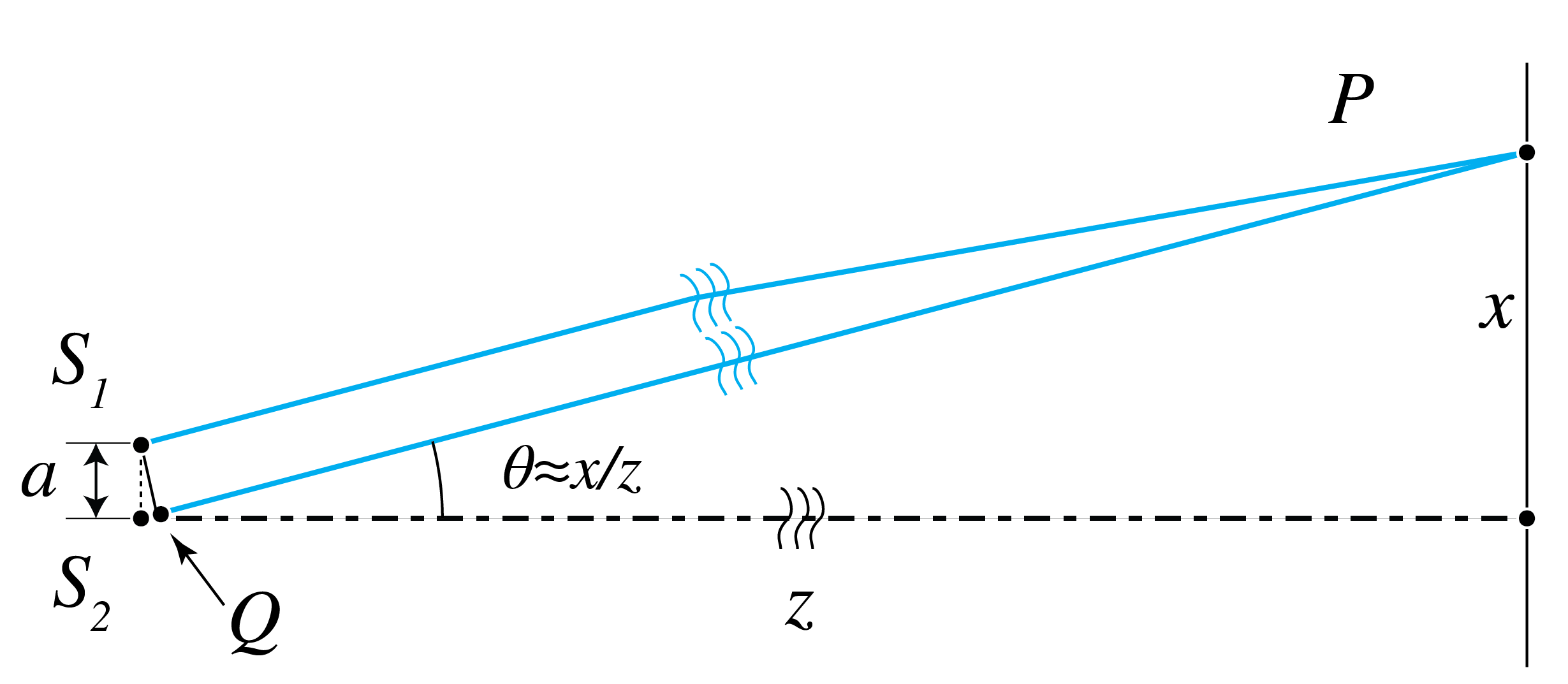

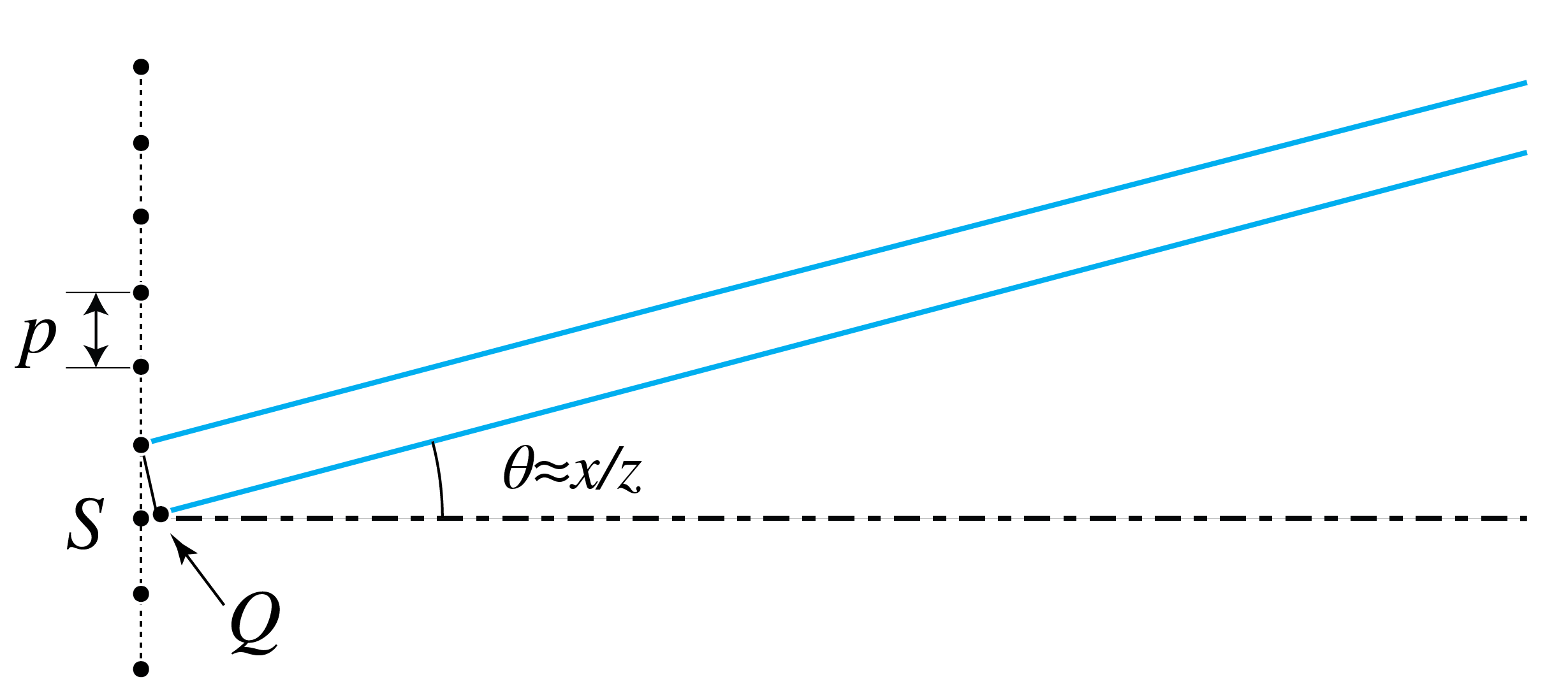

Fraunhofer diffraction patterns can qualitatively be explained by considering directions in which destructive and constructive interferences occur. Consider two mutually coherent point sources \(S_1\), \(S_2\) on the \(x\)-axis as shown in Fig. 99. We assume that these point sources are in phase. On a screen at large distance \(z\) an interference pattern is observed. If the distance \(z\) of the screen is very large, the spherical wave fronts emitted by the point sources are almost plane at the screen and the field is the Fraunhofer far field of the two point sources. In point \(P\) on the screen at a distance \(x\) above the \(z\)-axis the optical path differences of the waves emitted by the two sources is approximately given by \(S_2Q=a \theta\), where \(\theta=x/z\) is assumed small. Hence constructive interference occurs for angles \(\theta\) such that \(S_2Q=m\lambda\) for some integer \(m\), i.e. when

Destructive interference occurs when the path length difference satisfies \(S_2Q=\lambda/2 + m \lambda\) for some integer \(m\), hence for angles

If the point sources have the same strength, their fields perfectly cancel for these angles.

Fig. 99 Interference of to mutually coherent point sources. For \(z\) very large points \(P\) where constructive and destructive interference occurs are such that for some integer \(m\): \(S_2Q=m \lambda\) and \(S_2Q=(1/2 + m) \lambda\), respectively.#

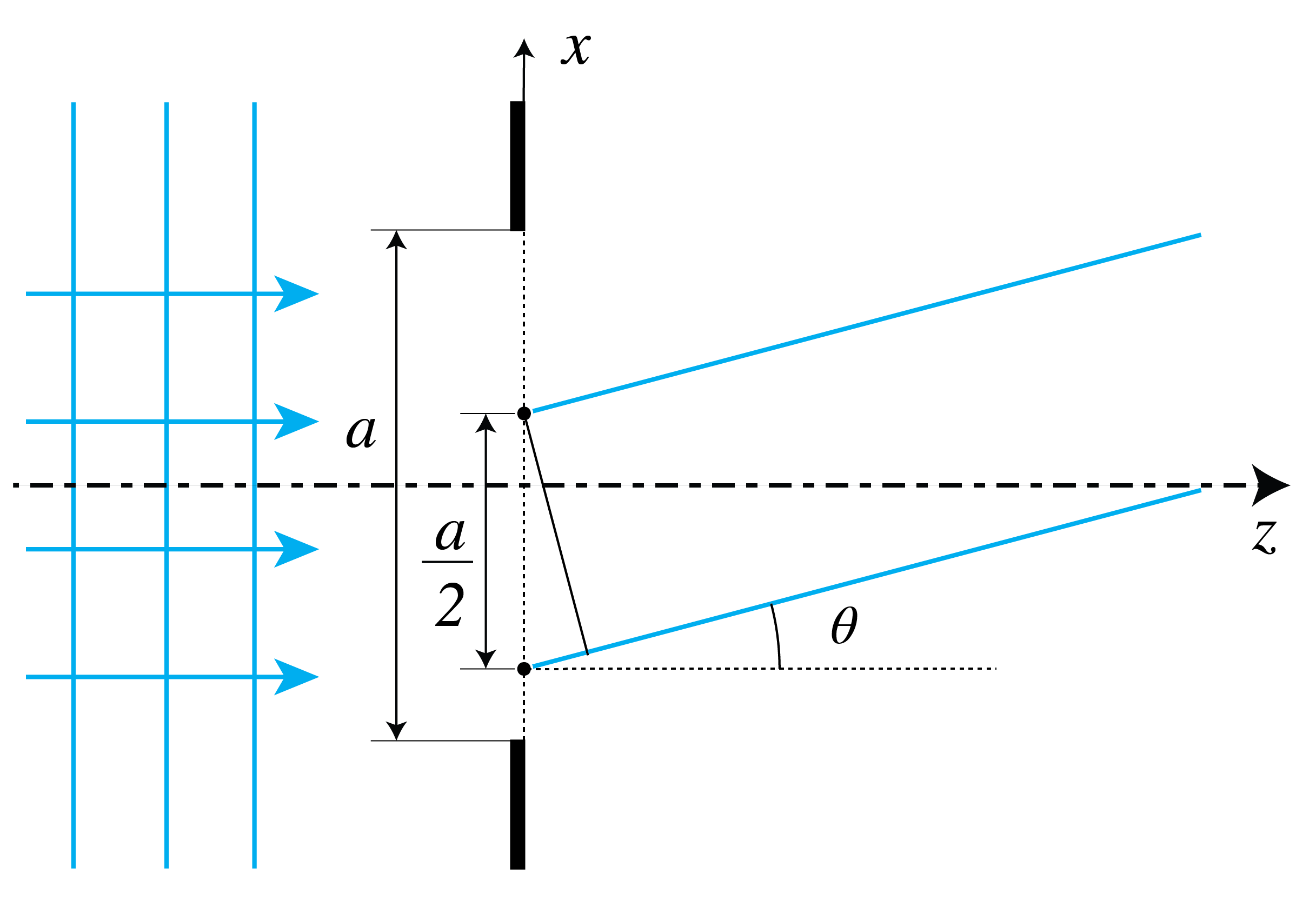

Now consider a slit as shown in Fig. 100 which is illuminated by a perpendicular incident plane wave. By the Huygens-Fresnel principle, the field at a screen far from the slit is the sum of the fields of point sources in the aperture, with strengths proportional to the field in the slit at the position of the point sources. When the slit is illuminated by a plane wave at perpendicular incidence, all point sources are in phase and have equal strength. Divide the slit in two equal halves as shown in Fig. 100. The point sources in the slit can be arranged into pairs, of which one point source is in the upper half of the slit and the other is at the equivalent position (at distance \(a/2\) from the other point source) in the lower half of the slit. Let \(\theta\) be an angle for which the two point sources of a pair cancel each other i.e.

since the distance between the point sources is \(a/2\). By translating the pair of point sources through the slits, it follows that both half slits perfectly cancel each other for these angles. In this way we have found the angles \(\theta = m\lambda/a\) with \(m\) odd for which destructive interference occurs. Destructive interference for \(m\) even can be derived by further subdivisions of the aperture.

Fig. 100 By dividing the slit into two slits of size \(a/2\) each and considering pairs of point sources of which one is in the upper half of the slit and the other is at the corresponding position in the lower half, angles where destructive interference occurs between these point sources lead to minima in the diffraction pattern. Note that the point sources have corresponding positions in the two parts of the slit if their distance is \(a/2\).#

In general it is easier to find the angles for which the far field vanishes than to find (local) maxima of the field. An exception is the case of a diffraction grating. It follows from Fig. 101 that there will be constructive interference between adjacent periods, and hence for all periods, for angles for which the distance \(SQ\) in Fig. 101 is a multiple of the wavelength, i.e. for

which corresponds to the direction of the diffraction orders. For other angles the phases of the fields of the different periods differ widely and therefore the fields almost cancel at these angles when there are many periods. This explains that for a diffraction grating of many periods, the far field intensity is highly concentrated in particular directions given by the orders (419) which depend only on the ratio of the wavelength and the period.

Fig. 101 If the angle \(\theta\) is such that \(SQ\) is a multiple of the wavelength, two adjacent periods, and hence all periods of the grating, constructively interfere. These angles correspond to the diffraction orders.#

Fourier Optics#

In this section we apply diffraction theory to a lens. We consider in particular the focusing of a parallel beam and the imaging of an object.

Focusing of a Parallel Beam#

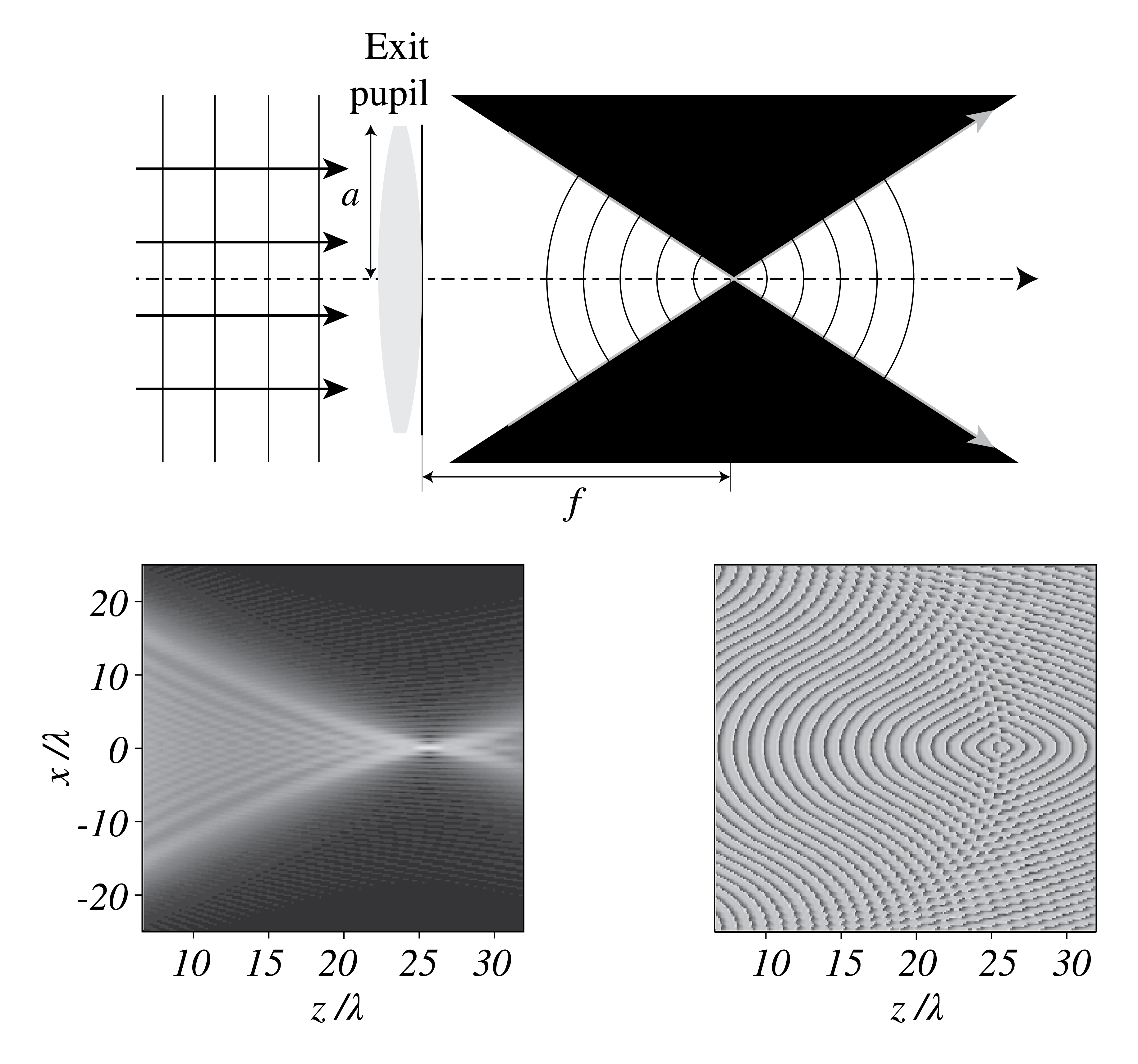

A lens induces a local phase change to an incident field in proportion to the local thickness of the lens. Let a plane wave which propagates parallel to the optical axis be incident on a positive lens. In Gaussian geometrical optics the incident rays are all focused into the image focal point. According to the Principle of Fermat, all rays have travelled the same optical distance when they intersect in the image focal point where they constructively interfere and cause an intensity maximum. The wavefronts are in the focal region pats of spheres with centre the focal point and cut off by the cone with the focal point as top and opening angle \(2 a/f\), as shown in Fig. 102. Behind the focal point, there is a second cone with again spherical wavefronts, but there the light is propagating away from the focal point. According to Gaussian geometrical optics it is in image space completely dark outside of the two cones in Fig. 102. However, as we will show, in diffraction optics this is not true.

We assume that the lens is thin and choose as origin of the coordinate system the centre of the thin lens with the positive \(z\)-axis along the optical axis. Let \(f_i\) be the \(z\)-coordinate of the image focal point according to Gaussian geometrical optics. Then \((0,0,f_i)\) is the image focal point. Let \((x,y,z)\) be a point between the lens and this focal point. According to geometrical optics the field in \((x,y,z)\) is proportional to

where we have included the time-dependence. Indeed the surfaces of constant phase:

are spheres with centre the focal point. For increasing time, these spheres converge to the focal point, while the amplitude increases as the distance to the focal point decreases, so that energy is preserved.

Remark. For a point \((x,y,z)\) to the right of the focal point, i.e. for \(z>f_i\), the spherical wave fronts propagate away from the focal point and therefore there \(-ik\) should be replaced by \(+ik\) in the exponent in (420).

The exit pupil of the lens is in the plane \(z=0\) where, according to (420), the field is

where the time dependence has been omitted and

i.e. \(1_{\bigodot_a}(x,y)=1\) for \((x,y)\) in the exit pupil of the lens and \(=0\) otherwise. If \(a/f_i\) is sufficiently small, we may replace the distance \(\sqrt{x^2 +y^2 + f_i^2}\) between a point in the exit pupil and the image focal point in the denominator of (421) by \(f_i\). This is not allowed in the exponent, however, because of the multiplication by the large wave number \(k\). In the exponent we therefore use instead the first two terms of the Taylor series (383):

which is valid for \(a/f_i\) sufficiently small. Then (421) becomes:

where we dropped the constant factors \(e^{i k f_i}\) and \(1/f_i\). For a general field \(U_0(x,y)\) incident on the lens, i.e. in the entrance pupil, the lens applies a transformation such that the field in the exit plane becomes:\

The function that multiplies \(U_0(x,y)\) is the transmission function of the lens:

This result makes sense: in the centre \((x,y)=0\) the lens is thickest, so the phase is shifted the most (but we can define this phase shift to be zero because only phase differences matter, not absolute phase). As is indicated by the minus-sign in the exponent, the further you move away from the centre of the lens, the less the phase is shifted. For shorter \(f_i\), the lens focuses more strongly, so the phase shift changes more rapidly as a function of the radial coordinate. Note that transmission function (425) has modulus 1 so that energy is conserved.

The field at the right of (424) is used in diffraction optics as the field in the exit pupil. But instead of using ray tracing, the field in the focal region is computed using diffraction integrals. We substitute the field in the exit pupil in the Fresnel diffraction integral (385) and obtain:

The intensity \(I=|U|^2\) is shown at the bottom left of Fig. 102. It is seen that the intensity does not monotonically increase for decreasing distance to the focal point. Instead, secondary maxima occur along the optical axis. Also the boundary of the light cone is not sharp, as predicted by geometrical optics, but diffuse. The bottom right of Fig. 102 shows the phase in the focal region. The wave fronts are close to but not exactly spherical inside the cones.

Fig. 102 Top: wavefronts of the incident plane wave and the focused field according to Gaussian geometrical optics. There is no light outside of the two cones. Bottom left: amplitude as predicted by diffraction optics. The boundary of the cones is diffuse and it is not absolutely dark outside of the cones. Furthermore, the intensity does not increase monotonically with decreasing distance to the focal point, as predicted by geometrical optics. Bottom right: phase of the focused field as predicted by diffraction optics.#

For points in the image focal plane of the lens, i.e. \(z=f_i\), we have

which is the same as the Fraunhofer integral for propagation over the distance \(f_i\)!

Note

The field in the entrance pupil of the lens and the field in the focal plane are related by a Fourier transform (apart from a quadratic phase factor in front of the integral).

It can be shown that the fields in the front focal plane \(U(x,y,-f_i)\) and the back focal plane \(U(x,y,f_i)\) are related exactly by a Fourier transform, i.e. without the quadratic phase factor[6]. So a lens performs a Fourier transform. Let us see if that agrees with some of the facts we know from geometrical optics.

We know from Gaussian geometrical optics that if we illuminate a positive lens with rays parallel to the optical axis, these rays all intersect in the image focal point. This corresponds with the fact that for \(U_0(x,y)=1\) (i.e. plane wave illumination, neglecting the finite aperture of the lens, i.e. neglecting diffraction due to the finite size of the pupil), the Fourier transform of the pupil field is a delta peak:

which represents the perfect focused spot (without diffraction).

If in Gaussian geometrical optics we illuminate a lens with tilted parallel rays of light (a plane wave propagating in an oblique direction), then the point in the back focal plane where the rays intersect is laterally displaced. A tilted plane wave is described by \(U_0(\mathbf{r})=\exp(i\mathbf{k}_0\cdot\mathbf{r})\), and its Fourier transform with respect to \((x,y)\) is given by

which is indeed a shifted delta peak (i.e. a shifted focal spot).

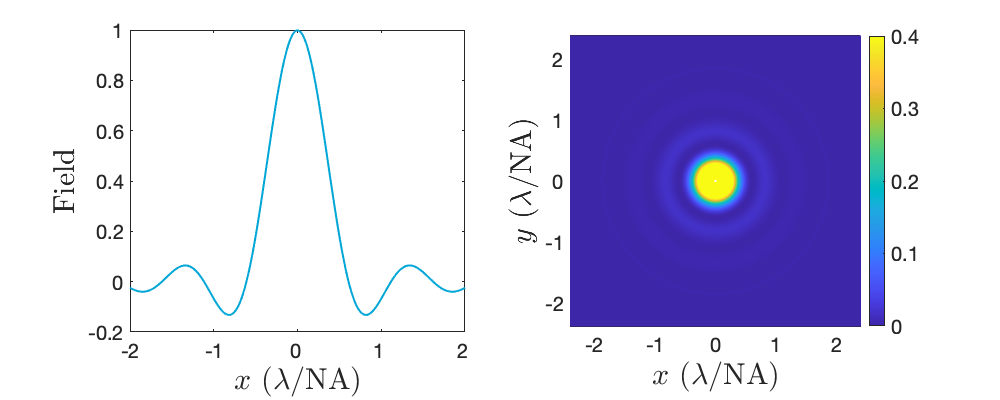

It seems that the diffraction model of light confirms what we know from geometrical optics. But in the previous two examples we discarded the influence of the finite size of the pupil, i.e. we have left out of consideration the function \(1_{\bigodot_a}\) in calculating the Fourier transform. If \(U_0(x,y)=1\) in the entrance pupil and we take the finite size of the pupil properly into account, the \(\delta\)-peaks become blurred: the focused field is then given by the Fourier transform of the circular disc with radius \(a\), evaluated at spatial frequencies \(\xi = \frac{x}{\lambda f_i}\), \(\eta =\frac{ y}{\lambda f_i}\). This field is called the Airy spot and is given by (See (514)):

where \(J_1\) is the Bessel function of the first kind and where the phase factors in front of the Fourier transform have been omitted. The pattern is shown in Fig. 103. It is circular symmetric and consists of a central maximum surrounded by concentric rings of alternating zeros and secondary maxima with decreasing amplitudes. In cross-section, as function of \(r=\sqrt{x^2+y^2}\), the Airy pattern is similar (but not identical) to the \(\text{ sinc}\)-function. From the uncertainty principle illustrated in Fig. 93 it follows that the size of the focal spot decreases as \(a\) increases, and from (428) we see that the Airy function is a function of the dimensionless variable \(a r/(\lambda f_i)\). Hence the focal spot becomes narrower as \(a/(\lambda f_i)\) increases. The Numerical Aperture (\(\textit{NA}\)) is defined by

Since the first zero of the Airy pattern occurs for \(a r/(\lambda f_i)= 0.61\), the width of the focal spot can be estimated by

Fig. 103 Left: cross section of the field of the Airy pattern. Right: intensity of the Airy pattern.#

Remark. In the simple case of a single thin lens, the entrance and exit pupils coincide with the lens itself. As has been explained in Stops, the exit pupil in systems consisting of several lenses is the real or virtual image of the aperture stop under all optical lenses to the right of the stop. To model the effect of diffraction in such more complicated systems, diffraction is assumed to take place entirely in the exit pupil. The field in the exit pupil is first determined by non-paraxial ray tracing and is then integrated over the exit pupil using the Fresnel diffraction integral.

Imaging by a lens#

It follows from the derivations in the previous section that the Airy pattern is the image of a point source infinitely far in front of a lens. In this section we study the imaging of a general object at finite distance to the lens. Consider first a real point object on the optical axis with coordinate \(z=s_o<f_o\) to the left of the object focal point of a positive lens with image focal coordinate \(f_i>0\). This lens will form a real image with positive coordinate \(s_i>0\).

The field in image space is derived using the Fresnel diffraction integral, similar to the focused field in the previous section. We postulate that the lens transforms the field radiated by the point object into a spherical wave in the exit pupil, which converges to the ideal image point of Gaussian geometrical optics. We substitute this spherical pupil field in the Fresnel diffraction integral to compute the field in image space. Then for an object point on the optical axis we find the same Airy pattern as in Fig. 103, except that the variable \(a r/(\lambda f_i)\) is replaced by \(a r/(\lambda s_i)\), where \(s_i\) is the image coordinate as given by the Lensmaker’s Formula. This field is called the Point Spread Function (PSF):

For object points that are not on the optical axis, the PSF is translated such that it remains centred on the ideal Gaussian image point.

A general object field \(U_o(x,y) \) can be considered a superposition of point objects and the images of these points are given by translated PSFs:

where \((x_i, y_i)\) are the transverse coordinates of the image point according to Gaussian geometrical optics. The total image field is obtained by summing (integrating) over these PSFs, weighted by the field at the object points:

where \(x_o=x_i/M\), \(y_o=y_i/M\) is the image point and \(M\) is the magnification. The integral can be made into a convolution by using the coordinates \(x_i, y_i\) as integration variables.

It is clear from (430) that larger radius \(a\) of the lens and smaller wavelength \(\lambda\) imply a narrower PSF. This in turn implies that the kernel in the convolution is more sharply peaked and hence that the resolution of the image is higher [7].

Remark.

If laser light is used to illuminate the object, the object field may be considered perfectly coherent. This implies that a detector in the image plane would measure the squared modulus of the complex field (431):

In this case the system is called a coherent imaging system.

If the object is a spatially incoherent extended source, the fields emitted by the point sources of which extended source consists cannot interfere in the image plane. Therefore, in this case the intensity in the image plane is given by the incoherent sum:

where \(I_o=|U_o|^2\) is the intensity distribution of the extended source. Hence the image intensity is expressed in the intensity of the source by a convolution with the intensity of the PSF. This system is called a incoherent imaging system.

An object is often illuminated by a spatially incoherent extended light source and then imaged. According to the discussion in More on Spatial Coherence, the field that illuminates the object is then partially coherent. It is more coherent when the angle that the source extends at the object is smaller. The intensity in the image plane can be computed by splitting the spatially incoherent source into sufficiently many mutually incoherent point sources and computing the intensities in the image plane due to the illuminaton of the object by each individual point source. The total intensity in the image plane is then the sum of these intensities.

We have considered only scalar diffraction theory. However, for a lens with numerical aperture larger than 0.6, the change of polarisation can be important. Then a more general diffraction theory is needed [8].

Spatial Light Modulators and Optical Fourier Filtering#

SLM. The field in the entrance pupil of a lens, in particular its phase can be changed spatially by a so-called spatial light modulator (SLM). A SLM has thousands of pixels by which very general focused fields can be made. An example is a focused fields with only a longitudinal component in the focal point (i.e. only the \(E_z\)-component is nonzero at the focal point) [9].

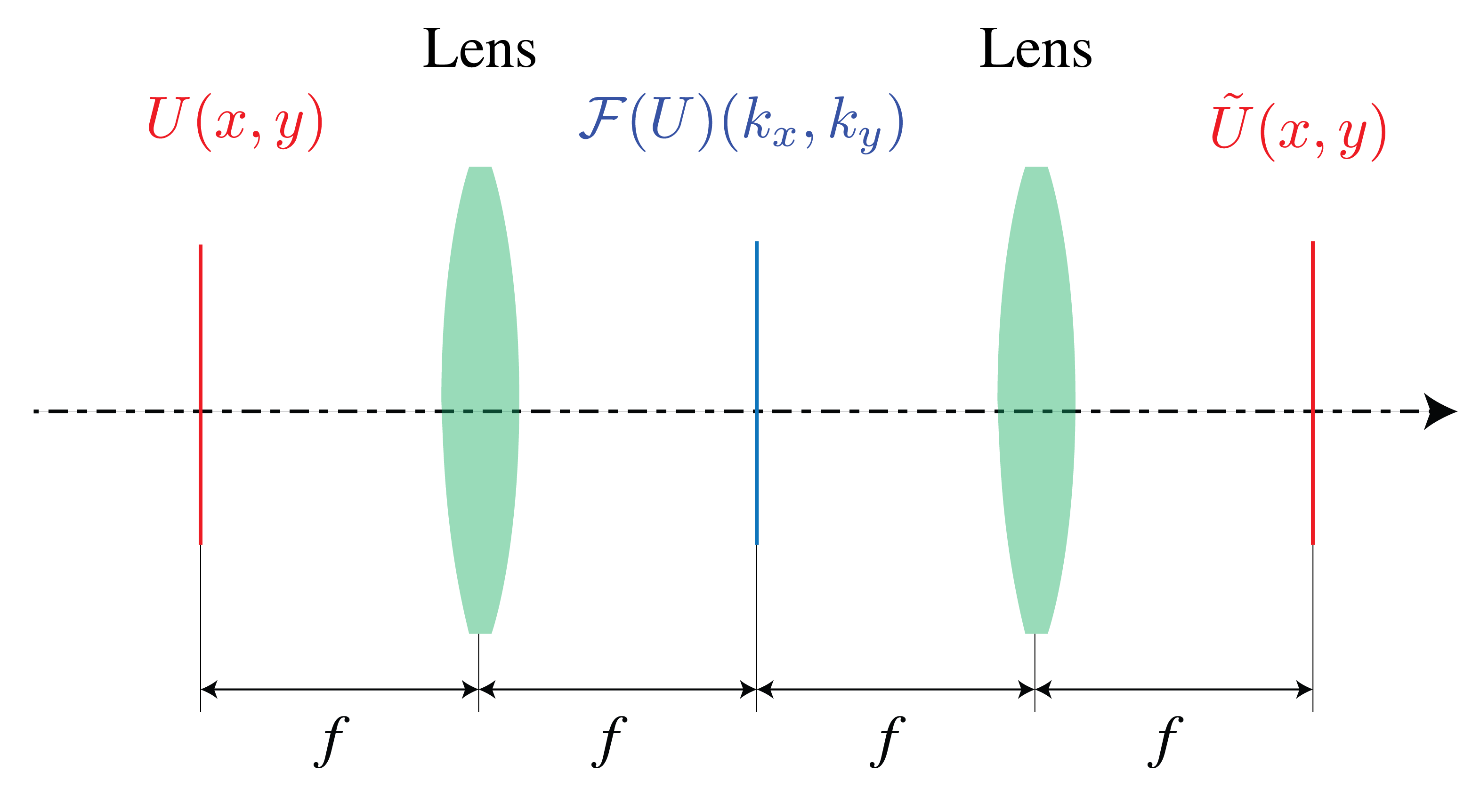

Fourier filtering. Suppose we have the setup as shown in Fig. 104. With one lens we can create the Fourier transform of some field \(U(x,y)\). Let a mask be put in the focal plane and a second lens be used to refocus the light. This implies that the amplitude and/pf phase of the plane waves in the angular spectrum of the field are manipulated. The procedure is called Fourier filtering using lenses. An application of this idea is the phase contrast microscope.

Fig. 104 Set-up for Fourier filtering. The first lens creates a Fourier transform of \(U(x,y)\), to which we can apply some operation (e.g. applying different phase shifts to different parts of the field). The second lens then applies another Fourier transform (which is the same as the inverse Fourier transform and a mirror transformation).#

Super-resolution#

We have emphasised that evanescent waves set the ultimate limit to resolution in optics. In Geometrical Optics it was explained that, although within geometrical optics one can image a single point perfectly using conical surfaces, several points, let alone an extended object, cannot be imaged perfectly. It was furthermore explained that when only paraxial rays are considered, i.e. within Gaussian geometrical optics, perfect imaging of extended objects is possible. However, rays of which the angle with the optical axis is large cause aberrations. But even when perfect imaging would be possible in geometrical optics, a real image can never be perfect due to the fact that information contained in the amplitudes and phase of the evanescent waves cannot propagate. The resolution that can be obtained with an optical system consisting of lenses is less than follows from considering the loss of information due to evanescent waves, because propagating waves with spatial frequencies that are too large to be captured by the optical system (i.e. waves of which the angles with the optical axis are larger than the numerical aperture) cannot contribute to the image. Therefore the image of a point object has the size

where \(\textit{NA}_i=a/s_i\) is the numerical aperture in image space, i.e. it is the sinus of half the opening angle of the cone extended by the exit pupil at the Gaussian image point on the optical axis. This resolution limit is called the diffraction limit.

The size of the image of a point as given by the PSF in (430) is influenced by the magnification of the system. To characterise the resolution of a diffraction-limited system, it is therefore better to consider the numerical aperture on the object side: \(\textit{NA}_o = \textit{NA}_i |M| = a/s_o\). The value of \(\textit{NA}_o\) is the sinus of the half angle of the cone subtended by the entrance pupil of the system on the object point on the optical axis. This is the cone of wave vectors emitted by this object point that can contribute to the image (they are “accepted” by the optical system). The larger the half angle of this cone, the more spatial frequencies can contribute to the image and hence the larger the information about finer details of the object that can reach the image plane.

It should be clear by now that beating the diffraction limit is extremely difficult. Nevertheless, a lot of research in optics is directed towards realising this goal. Many attempts have been made, some successful, others not so, but, whether successful or not, most were based on very ingenious ideas. To close this chapter on diffraction theory, we will give examples of attempts to achieve what is called super-resolution.

Confocal microscopy. A focused spot is used to scan the object and the reflected field is imaged onto a small detector (‘‘point detector’’). The resolution is roughly a factor 1.5 better than for normal imaging with full field of view using the same objective. The higher resolution is achieved thanks to the illumination by oblique plane waves that are present in the spatial Fourier transform of the illuminating spot. By illumination with plane waves with large angles of incidence, higher spatial frequencies of the object which under normal incidence are not accepted by the objective, are now ‘‘folded back’’ into the cone of plane waves accepted by the objective. The higher resolution comes at the prize of longer imaging time because of scanning. The confocal microscope was invented by Marvin Minsky in 1957.

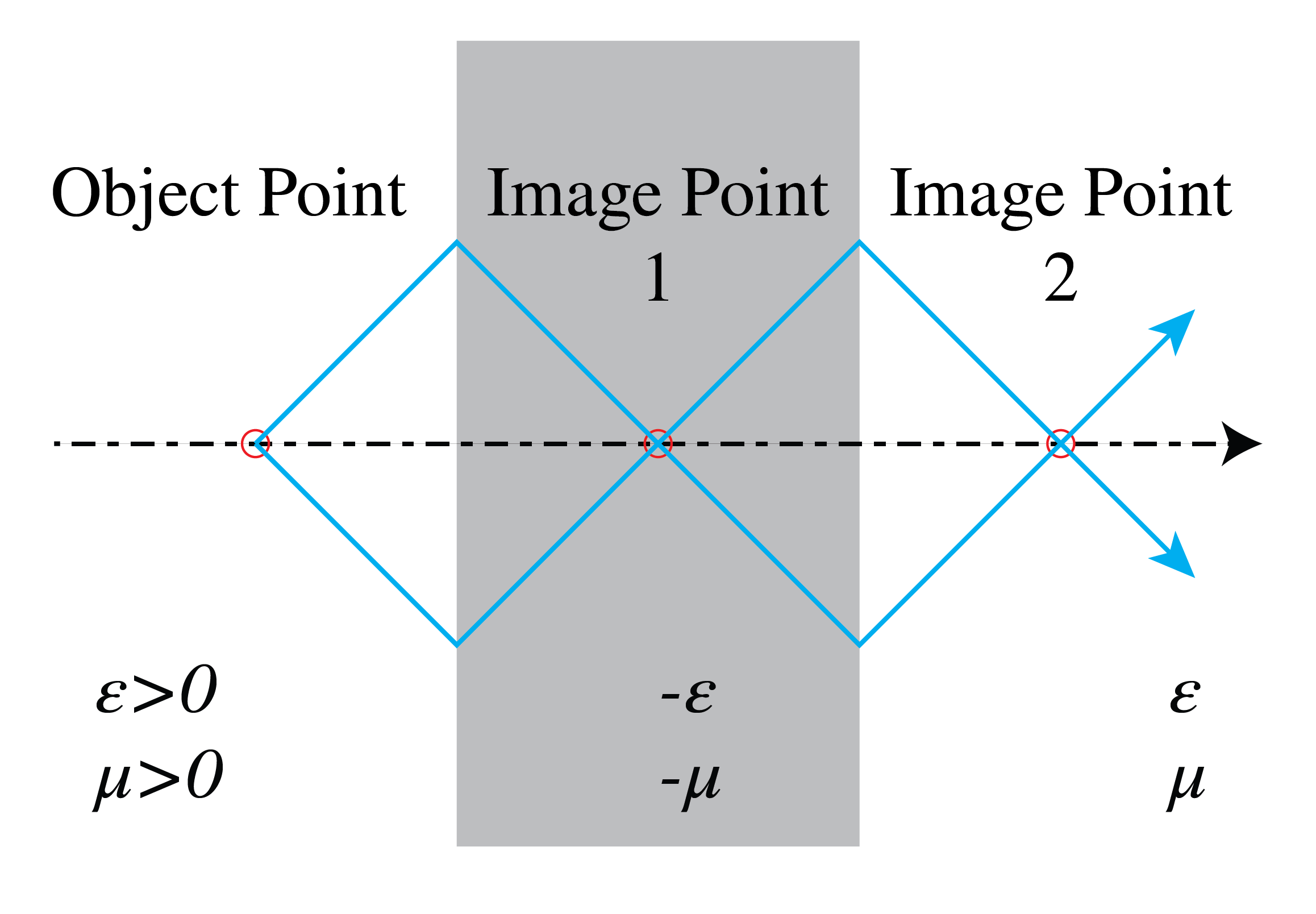

The Perfect Lens based on negative refraction. It can be shown that a slab of a material with negative permittivity and negative permeability, which are opposite to the permittivity and permeability of the surrounding medium, there is no reflection at the interfaces. Furthermore, the phase velocity in a material with negative permittivity and negative permeability is opposite to the direction of the flow of energy and plane waves are refracted at the interface as if the refractive index in Snell’s Law is negative. Therefore these media are called negative index media. Because the phase velocity is opposite to the energy velocity, it is as if time is reversed inside the slab. The change of phase of propagating waves of the field of point source due to propagating in the surrounding medium is reversed inside the slab and at some distance on the other side of the slab there is an image point where all propagating waves are in phase, as illustrated in Fig. 105. Furthermore, evanescent waves gain amplitude inside the slab and it turns out they have the same amplitude in the image point as in the source, hence the image point is perfect. Note that the increase of amplitude of an evanescent wave does not violate the conservation of energy, because an evanescent wave does not propagate energy in the direction in which it is evanescent.

Fig. 105 Pendry’s perfect lens consists of a slab of a material with negative permittivity and negative permeability such that its absolute values are equal to the positive permittivity and positive permeability of the surrounding medium. Points outside the slab are imaged perfectly in two planes: one inside the slab and the other on the opposite side of the slab.#

The simple slab geometry seen in Fig. 105 which acts as a perfect lens was proposed by John Pendry in 2000 [10]. Unfortunately, a material with negative permittivity and negative permeability has not been found in nature. Therefore, many researchers have attempted to mimic such a material by mixing metals and dielectrics on a sub-wavelength scale. It seems that a negative index without absorption violates causality. But when there is absorption, the image is not anymore perfect.

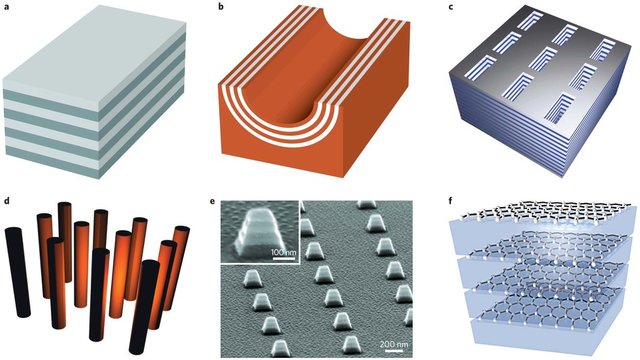

Hyperbolic materials. Hyperbolic materials are anisotropic, i.e. the phase velocity of a plane wave depends on the polarisation and on the direction of the wave vector. The permittivity of an anisotropic material is a tensor (loosely speaking a (3,3)-matrix). Normally the eigenvalues of the permittivity matrix are positive; however, in a hyperbolic material two eigenvalues are of equal sign and the third has opposite sign. In such a medium all waves with the so-called extraordinary state of polarisation propagate, no matter how high the spatial frequencies are. Hence, for the extraordinary state of polarisation evanescent waves do not exist and therefore super-resolution and perfect imaging should be possible in such a medium.

Fig. 106 Examples of composite materials consisting of thin (sub-wavelength) layers of metals and dielectrics. These artificial materials are called metamaterials. (A. Poddubny, I. Iorsh, P. Belov, & Y. Kivshar, Hyperbolic Metamaterials, {N}at. {P}hoton., 7(12), 948-957 (2013)).#

A few natural hyperbolic media exist for visible frequencies, but there are more in the mid-infrared. Researchers try to approximate hyperbolic media by metamaterials, made e.g. multilayers consisting of alternating thin metallic and dielectric layers, so that the effective permittivity has the desired hyperbolic property. Also metallic nanopilars embedded in a dielectric are used.

Nonlinear effects. When the refractive index of a material depends on the local electric field, the material is nonlinear. At optical frequencies nonlinear effects are in general very small, but with a strong laser they can become significant. One effect is self-focusing, where the refractive index is proportional to the local light intensity. The locally higher intensity causes an increase of the refractive index, leading to a waveguiding effect due to which the beam focuses even more strongly. Hence the focused beam becomes more and more narrow while propagating, until finally the material breaks down.

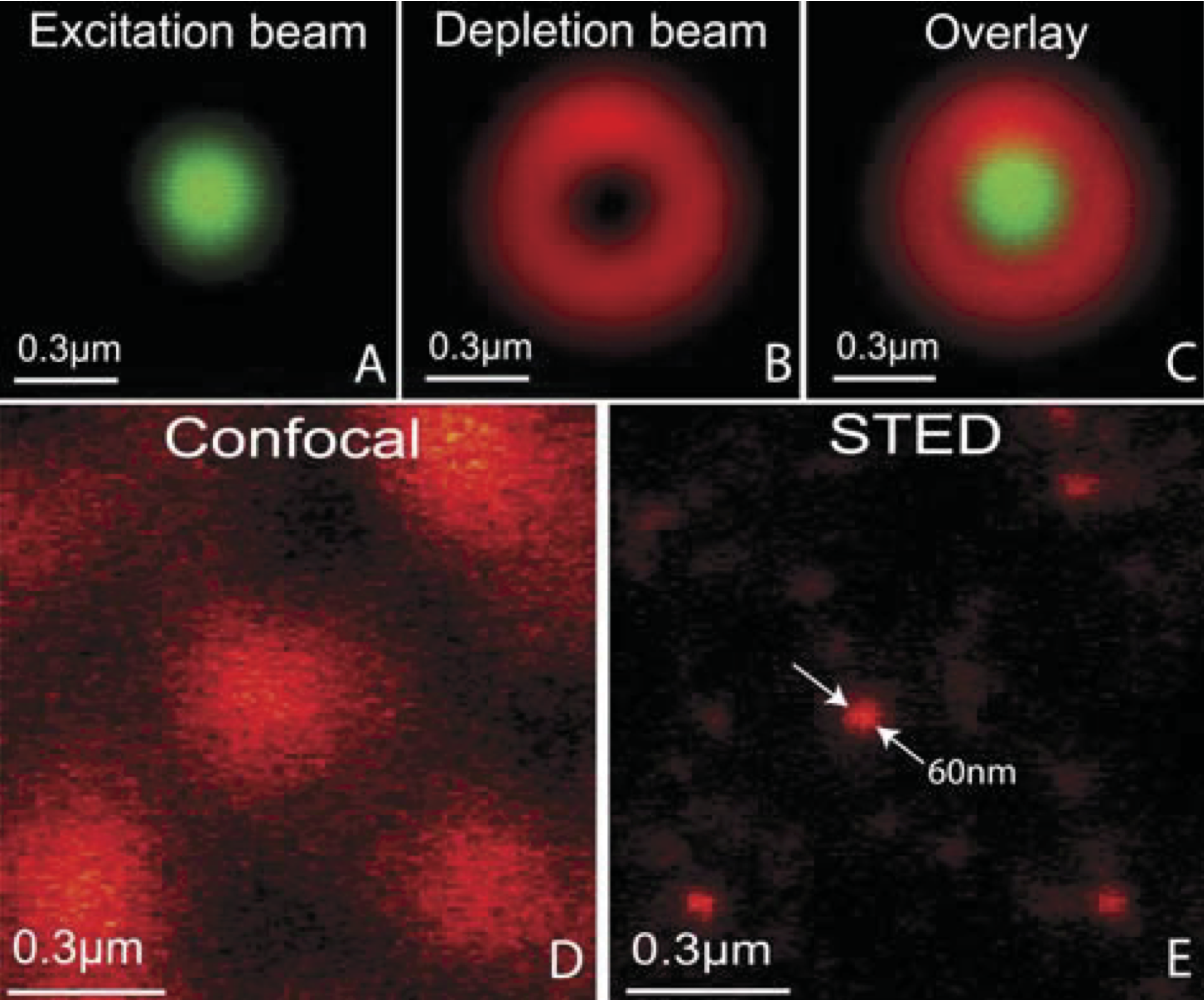

Stimulated Emission Depletion Microscopy (STED). This technique was invented by V. A. Okhonin in 1986 in the USSR and was further developed by Stefan Hell and his co-workers in the nineties. Hell received the Nobel Prize in chemistry for his work in 2014. STED is a non-linear technique with which super-resolution in fluorescence microscopy can be achieved. Images made with a fluorescence microscope are blurred when the fluorescent molecules are very close together. In the STED microscope a special trick is used to ensure that molecules which fluoresce at the same time are sufficiently distant from each other so that they can be imaged individually. To achieve this two focused spots are used: the first spot excites the molecules to a higher level. The second spot is slightly red-shifted and has a doughnut shape (see Fig. 107). It causes decay of the excited molecules to the lower level by stimulated emission (the excited state is depleted). Because of the doughnut shape of the second spot, the molecule in the centre of the spot is not affected and will still fluoresce. Crucial is that a doughnut spot has a central dark region which is very narrow, i.e. it can be much smaller than the Airy spot and this is the reason for the super-resolution.

Fig. 107 Spot used for excitation (top left) and for depletion (top middle). Fluorescence signal top right. In the lower figure the confocal image is compared to the STED image. (P.F. Rodriguez and al., Building a fast scanning stimulated emission depletion microscope, Materials Science (2012))#

External sources in recommended order

Every picture is made of waves - Sixty Symbols, 3:33 to 7:15: Basic explanation of Fourier transforms.

Heisenberg’s Microscope - Sixty Symbols, 0:20 to 2:38: Basic explanation of the uncertainty principle (though in the context of quantum physics).

E. Hecht, Optics, § 7.4.4, subsection ‘Fourier Analysis and Diffraction’.

J. Goodman, Introduction to Fourier Optics, §5.2.2: Several calculations on the Fourier transforming properties of lenses.

E. Hecht, Optics, § 10.2.6, subsection ‘Resolution of imaging systems’.