Basic Electromagnetic and Wave Optics#

Maxwell’s equations provide a very complete description of light which includes diffraction, interference and polarisation. Yet it is strictly speaking not fully accurate, because it allows monochromatic electromagnetic waves to carry any amount of energy, whereas according to quantum optics the energy is quantised. According to quantum optics, light is a flow of massless particles, the photons, which each carry an extremely small quantum of energy: \(\hbar\omega\), where \(\hbar = 6.63 \times 10^{-34}/(2\pi)\) Js and \(\omega\) is the frequency, which for visible light is of the order \(5 \times 10^{14}\) Hz. Hence for visible light \(\hbar\omega \approx 3.3\times {10^{-19}}\) J.

Quantum optics is only important in experiments involving a small number of photons, i.e. at very low light intensities and for specially prepared photons states (e.g. entangled states) for which there is no classical description. In almost all applications of optics the light sources emit so many photons that quantum effects are irrelevant see Table 1.

Light Source |

Number of photons/s.m\(^2\) |

|---|---|

Laserbeam (10m W, He-Ne, focused to 20 \(\mu\)m) |

\(10^{26}\) |

Laserbeam (1 mW, He-Ne) |

\(10^{21}\) |

Bright sunlight on earth |

\(10^{18}\) |

Indoor light level |

\(10^{16}\) |

Twilight |

\(10^{14}\) |

Moonlight on earth |

\(10^{12}\) |

Starlight on earth |

\(10^{10}\) |

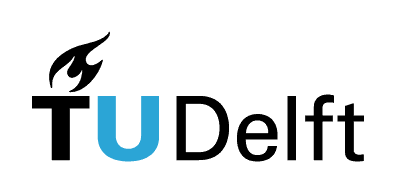

The visible part is only a small part of the overall electromagnetic spectrum (see Fig. 1). The results we will derive are however generally valid for electromagnetic waves of any frequency.

Fig. 1 The electromagnetic spectrum. (from Wikimedia Commons by NASA/ CC BY-SA ).#

The Maxwell Equations in Vacuum#

In a vacuum, light is described by vector fields \(\mathbf{\mathcal{E}}(\mathbf{r},t)\) [Volt/m][1] and \(\mathbf{\mathcal{B}}(\mathbf{r},t)\) [Tesla=Weber/\(\text{m}^2\)=g/(C.s)], which vary extremely rapidly with the position vector \(\mathbf{r}\) and time \(t\). These vector fields are traditionally called the electric field strength and the magnetic induction, respectively, and together they are referred to as “the electromagnetic field”. This terminology is explained by the fact that, because in optics these fields vary with time, the electric and magnetic fields always occur together, i.e. one does not exist without the other. Only when the fields are independent of time, there can be an electric field without a magnetic field and conversely. The first case is called electrostatics, the second magnetostatics. Time-dependent electromagnetic fields are generated by moving electric charges, the so-called sources. Let the source have charge density \(\rho(\mathbf{r},t)\) [C/\(\text{m}^3\)] and current density \(\mathbf{\mathcal{J}}(\mathbf{r},t)\) [C/(s.\(\text{m}^2\)]. Since charge can not be created nor destroyed, the rate of increase of charge inside a volume \(V\) must be equal to the flux of charges passing through its surface \(S\) from the outside to the inside of \(V\), i.e.:

where \(\hat{\mathbf{n}}\) is the outward-pointing unit normal on \(S\). Using the Gauss divergence (485), the left-hand side of (1) can be converted to a volume integral from which follows the differential form of the law of conservation of charge:

At every point in space and at every time, the field vectors satisfy the Maxwell equations[2]\(^{, }\)[3]:

where \(\epsilon_0= 8.8544 \times 10^{-12}\) C \(^2\)N\(^{-1}\)m\(^{-2}\) is the dielectric permittivity and \(\mu_0 = 1.2566 \times 10^{-6} \text{m kg C}^{-2}\) is the magnetic permeability of vacuum. The quantity \(c=(1/\epsilon_0\mu_0)^{1/2}=2.997924562 \times 10^{8} \pm 1.1\) m/s is the speed of light in vacuum and \(Z=\sqrt{\mu_0/\epsilon_0}=377\Omega =377\) Vs/C is the impedance of vacuum.

Atoms are neutral and consist of a positively charged kernel surrounded by a negatively charged electron cloud. In an electric field, the centres of charge of the positive and negative charges get displaced with respect to each other. Therefore, an atom in an electric field behaves like an electric dipole. In polar molecules, the centres of charge of the positive and negative charges are permanently separated, even without an electric field. But without an electric field, they are randomly orientated and therefore have no net effect, while in the presence of an electric field they line up parallel to the field. Whatever the precise mechanism, an electric field induces a certain net dipole moment density per unit volume \(\mathbf{\mathcal{P}}(\mathbf{r})\) [C/\(\text{m}^2\)] in matter which is proportional to the local electric field \(\mathbf{\mathcal{E}}(\mathbf{r})\):

where \(\chi_e\) is a dimensionless quantity, the electric susceptibility of the material. A dipole moment which varies with time radiates an electromagneticc field. It is important to realize that in (7) \(\mathbf{\mathcal{E}}\) is the total local electric field at the position of the dipole, i.e. it contains the contribution of all other dipoles, which are also excited and radiate an electromagnetic field themselves. Only in the case of diluted gasses, the influence of the other dipoles in matter can be neglected and the local electric field is simply given by the field emitted by a source external to the matter under consideration.

A dipole moment density that changes with time corresponds to a current density \(\mathbf{\mathcal{J}}_p\) [Ampere/\(\text{m}^2\)=C/(\(\text{m}^2\) s)] and a charge density \(\varrho_p\) [C/\(\text{m}^3\)] given by

All materials conduct electrons to a certain extent, although the conductivity \(\sigma\) [Ampere/(Volt m)=C/(Volt s] differs greatly between dielectrics, semi-conductors and metals (the conductivity of copper is \(10^7\) times that of a good conductor such as sea water and \(10^{19}\) times that of glass). The current density \(\mathbf{\mathcal{J}}_c\) and the charge density corresponding to the conduction electrons satisfy:

where (10) is Ohm’s Law. The total current density on the right-hand side of Maxwell’s Law (4) is the sum of \(\mathbf{\mathcal{J}}_p\), \(\mathbf{\mathcal{J}}_c\) and an external current density \(\mathbf{\mathcal{J}}_{ext}\), which we assume to be known. Similarly, the total charge density at the right of (5) is the sum of \(\varrho_p\), \(\varrho_c\) and a given external charge density \(\varrho_{ext}\). The latter is linked to the external current density by the law of conservation of charge (2). Hence, (4) and (5) become

We define the permittivity \(\epsilon\) in matter by

Then (12) and (13) can be written as

It is verified in Problem 1 that in a conductor any accumulation of charge is extremely quickly reduced to zero. Therefore we may assume that

If the material is magnetic, the magnetic permeability is different from vacuum and is written as \(\mu=\mu_0(1+\chi_m)\), where \(\chi_m\) is the magnetic susceptibility. In the Maxwell equations, one should then replace \(\mu_0\) by \(\mu\). However, at optical frequencies magnetic effects are negligible (except in ferromagnetic materials, which are rare). We will therefore always assume that the magnetic permeability is that of vacuum: \(\mu=\mu_0\).

It is customary to define the magnetic field by \(\mathbf{\mathcal{H}}=\mathbf{\mathcal{B}}/\mu_0\) [Ampere/m=C/(ms)]. By using the magnetic field \(\mathbf{\mathcal{H}}\) instead of the magnetic induction \(\mathbf{\mathcal{B}}\), Maxwell’s equations become more symmetric:

This is the form in which we will be using the Maxwell equations in matter in this book. It is seen that the Maxwell equations in matter are identical to those in vacuum, with \(\epsilon\) substituted for \(\epsilon_0\).

We end this section with remarking that our derivations are valid for non-magnetic materials which are electrically isotropic. This means that the magnetic permeability is that of vacuum and that the permittivity \(\epsilon\) is a scalar. In an anisotropic dielectric the induced dipole vectors are in general not parallel to the local electric field. Then \(\chi_e\) and therefore also \(\epsilon\) become matrices. Throughout this book all matter is assumed to be non-magnetic and electrically isotropic.

We consider a homogeneous insulator (i.e. \(\epsilon\) is independent of position and \(\sigma\)=0) in which there are no external sources:

In optics the external source, e.g. a laser, is normally spatially separated from the objects of interest with which the light interacts. Therefore the assumption that the external source vanishes in the region of interest is often justified. Take the curl of (18) and the time derivative of (19) and add the equations obtained. This gives

Now for any vector field \(\mathbf{\mathcal{A}}\) there holds:

where \(\mathbf{\nabla}^2 \mathbf{\mathcal{A}}\) is the vector:

with

Because Gauss’s law (20) with \(\varrho_{ext}=0\) and \(\epsilon\) constant implies that \(\mathbf{\nabla}\cdot \mathbf{\mathcal{E}}=0\), (24) applied to \(\mathbf{\mathcal{E}}\) yields

Hence, (23) becomes

By a similar derivation it is found that also \(\mathbf{\mathcal{H}}\) satisfies (28). Hence in a homogeneous dielectric without external sources, every component of the electromagnetic field satisfies the scalar wave equation:

The refractive index is the dimensionless quantity defined by

The scalar wave equation can then be written as

The speed of light in matter is

Time-Harmonic Solutions of the Wave Equation#

The fact that, in the frequently occurring circumstance in which light interacts with a homogeneous dielectric, all components of the electromagnetic field satisfy the scalar wave equation, justifies the study of solutions of this equation. Since in most cases in optics monochromatic fields are considered, we will focus our attention on time-harmonic solutions of the wave equation.

Time-Harmonic Plane Waves#

Time-harmonic solutions depend on time by a cosine or a sine function. One can easily verify by substitution that

where \({\cal A}>0\) and \(\varphi\) are constants, is a solution of (31), provided that

where \(k_0=\omega \sqrt{\epsilon_0 \mu_0}\) is the wave number in vacuum. The frequency \(\omega>0\) can be chosen arbitrarily. The wave number \(k\) in the material is then determined by (34). We define \(T=2\pi/\omega\) and \(\lambda=2\pi/k\) as the period and the wavelength in the material, respectively. Furthermore, \(\lambda_0=2\pi/k_0\) is the wavelength in vacuum.

Remark. With “the wavelength”, we always mean the wavelength in vacuum.

We can write (33) in the form

where \(c/n=1/\sqrt{\epsilon\mu_0}\) is the speed of light in the material. \({\cal A}\) is the amplitude and the argument under the cosine: \(k\left(x-\frac{c}{n} t\right)+\varphi\) is called the phase at position \(x\) and at time \(t\). A wave front is a set of space-time points where the phase is constant:

At any fixed time \(t\) the wave fronts are planes (in this case perpendicular to the \(x\)-axis), and therefore the wave is called a plane wave. As time proceeds, the wave fronts move with velocity \(c/n\) in the positive \(x\)-direction.

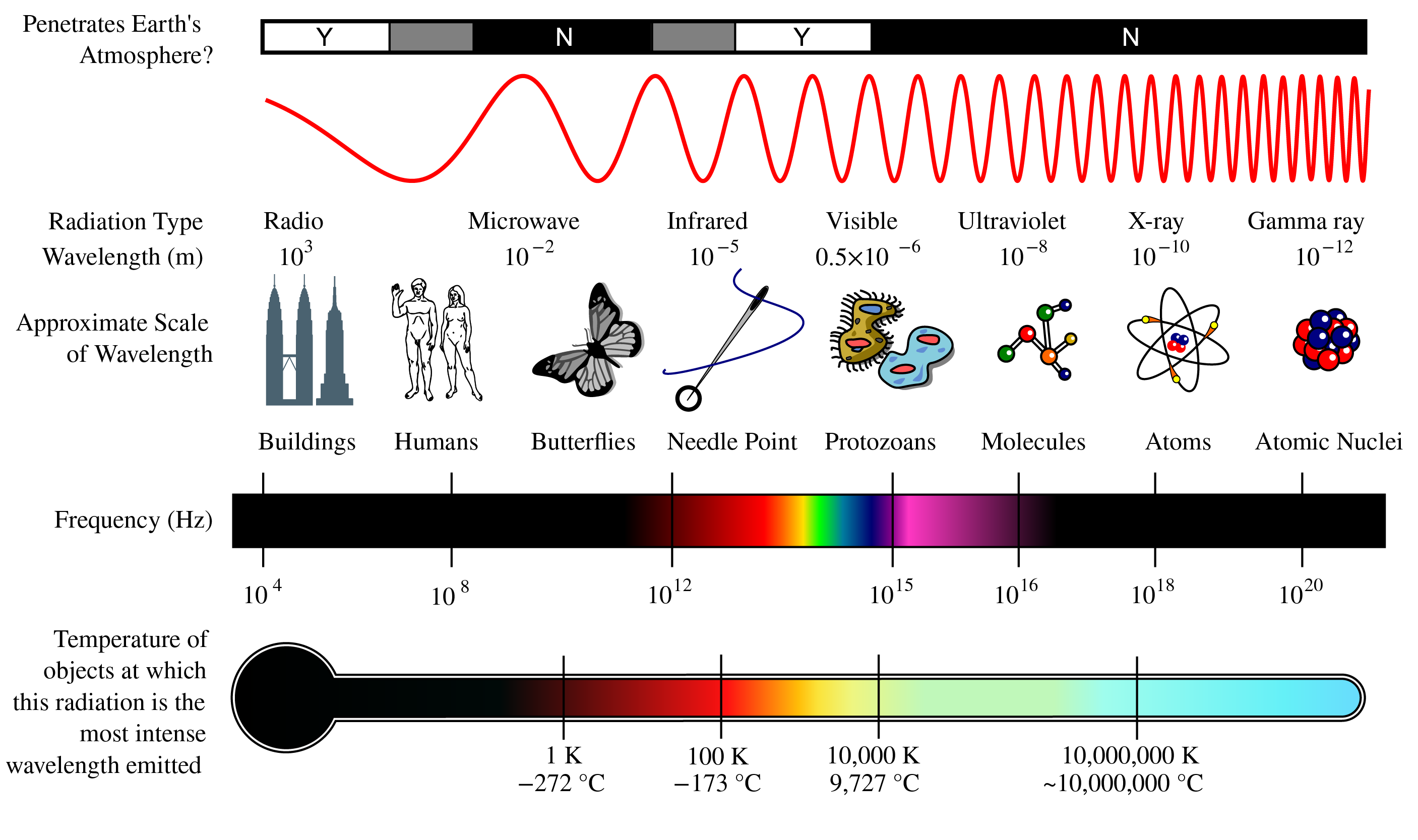

A time-harmonic plane wave propagating in an arbitrary direction is given by

where \({\cal A}\) and \(\varphi\) are again constants and \(\mathbf{k}=k_x\hat{\mathbf{x}}+k_y \hat{\mathbf{y}}+k_z \hat{\mathbf{z}}\) is the wave vector. The wave fronts are given by the set of all space-time points \((\mathbf{r}, t)\) for which the phase \(\mathbf{k}\cdot \mathbf{r} -\omega t + \varphi\) is constant, i.e. for which

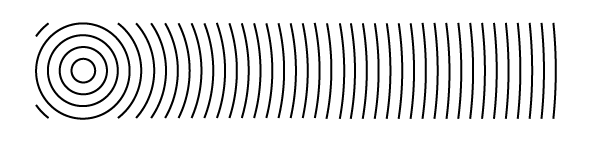

At fixed times the wave fronts are planes perpendicular to the direction of \(\mathbf{k}\) as shown in Fig. 2. Eq. (36) is a solution of (31) provided that

The direction of the wave vector can be chosen arbitrarily, but its length is determined by the frequency \(\omega\).

Fig. 2 Planes of constant phase.#

We consider a general time-harmonic solution of the wave equation (29):

where the amplitude \({\cal A}(\mathbf{r})>0\) and the phase \(\varphi(\mathbf{r})\) are functions of position \(\mathbf{r}\). The wave fronts consist of sets of space-time points \((\mathbf{r},t)\) where the phase is equal to some constant:

At fixed time \(t\), the sets of constant phase: \(\varphi(\mathbf{r})=\omega t + \text{constant}\) are surfaces which in general are not planes, hence the solution in general is not a plane wave. Eq. (39) could for example be a wave with spherical wave fronts, as discussed below.

Remark. A plane wave is infinitely extended and transports an infinite amount of electromagnetic energy. A plane plane can therefore not exist in reality, but it is nevertheless a usual idealisation. As will be demonstrated in Section 7.1, every time-harmonic solution of the wave equation can always be expanded in terms of plane waves of the form (36).

For time-harmonic solutions it is often convenient to use complex notation. Define the complex amplitude by:

i.e. the modulus of the complex number \(U(\mathbf{r})\) is the amplitude \({\cal A}(\mathbf{r})\) and the argument of \(U(\mathbf{r})\) is the phase \(\varphi(\mathbf{r})\) at \(t=0\). The time-dependent part of the phase: \(-\omega t\) is thus separated from the space-dependent part of the phase. Then (39) can be written as

Hence \({\cal U}(\mathbf{r},t)\) is the real part of the complex time-harmonic function

Remark. The complex amplitude \(U(\mathbf{r})\) is also called the complex field. In the case of vector fields such as \(\mathbf{E}\) and \(\mathbf{H}\) we speak of complex vector fields, or simply complex fields. Complex amplitudes and complex (vector) fields are only functions of position \(\mathbf{r}\); the time dependent factor \(\exp(-i\omega t)\) is omitted. To get the physical meaningful real quantity, the complex amplitude or complex field first has to be multiplied by \(\exp(-i\omega t)\) and then the real part must be taken.

The following convention is used throughout this book:

Real-valued physical quantities (whether they are time-harmonic or have more general time dependence) are denoted by a calligraphic letter, e.g. \(\mathcal{U}\), \(\mathcal{E}_x\), or \(\mathcal{H}_x\). The symbols are bold when we are dealing with a vector, e.g. \(\mathbf{\mathcal{E}}\) or \(\mathbf{\mathcal{H}}\). The complex amplitude of a time-harmonic function is linked to the real physical quantity by (42) and is written as an ordinary letter such as \(U\) and \(\mathbf{E}\).

It is easier to calculate with complex amplitudes (complex fields) than with trigonometric functions (cosine and sine). As long as all the operations carried out on the functions are linear, the operations can be carried out on the complex quantities. To get the real-valued physical quantity of the result (i.e. the physical meaningful result), multiply the finally obtained complex amplitude by \(\exp(-i\omega t)\) and take the real part. The reason that this works is that taking the real part commutes with all linear operations, i.e. taking first the real part to get the real-valued physical quantity and then operating on this real physical quantity gives the same result as operating on the complex scalar and taking the real part at the end.

By substituting (42) into the wave equation (31) we get

Since this must vanish for all times \(t\), it follows that the complex expression between the brackets \(\{.\}\) must vanish. To see this, consider for example the two instances \(t=0\) and \(t=\pi/(2\omega\). We conclude that the complex amplitude satisfies

where \(k_0=\omega \sqrt{\epsilon_0 \mu_0}\) is the wave number in vacuum.

Remark. The complex quantity of which the real part has to be taken is: \(U\exp(-i\omega t)\). As explained above, it is not necessary to drag the time-dependent factor \(\exp(-i \omega t )\) along in the computations: it suffices to calculate only with the complex amplitude \(U\), then multiply by \(\exp(-i\omega t)\) and then take the real part. However, when a derivative with respect to time has to be taken: \(\partial /\partial t\), the complex field much be multiplied by \(-i\omega\). This is also done in the time-harmonic Maxwell’s equations in Time-Harmonic Maxwell Equations in Matter below.

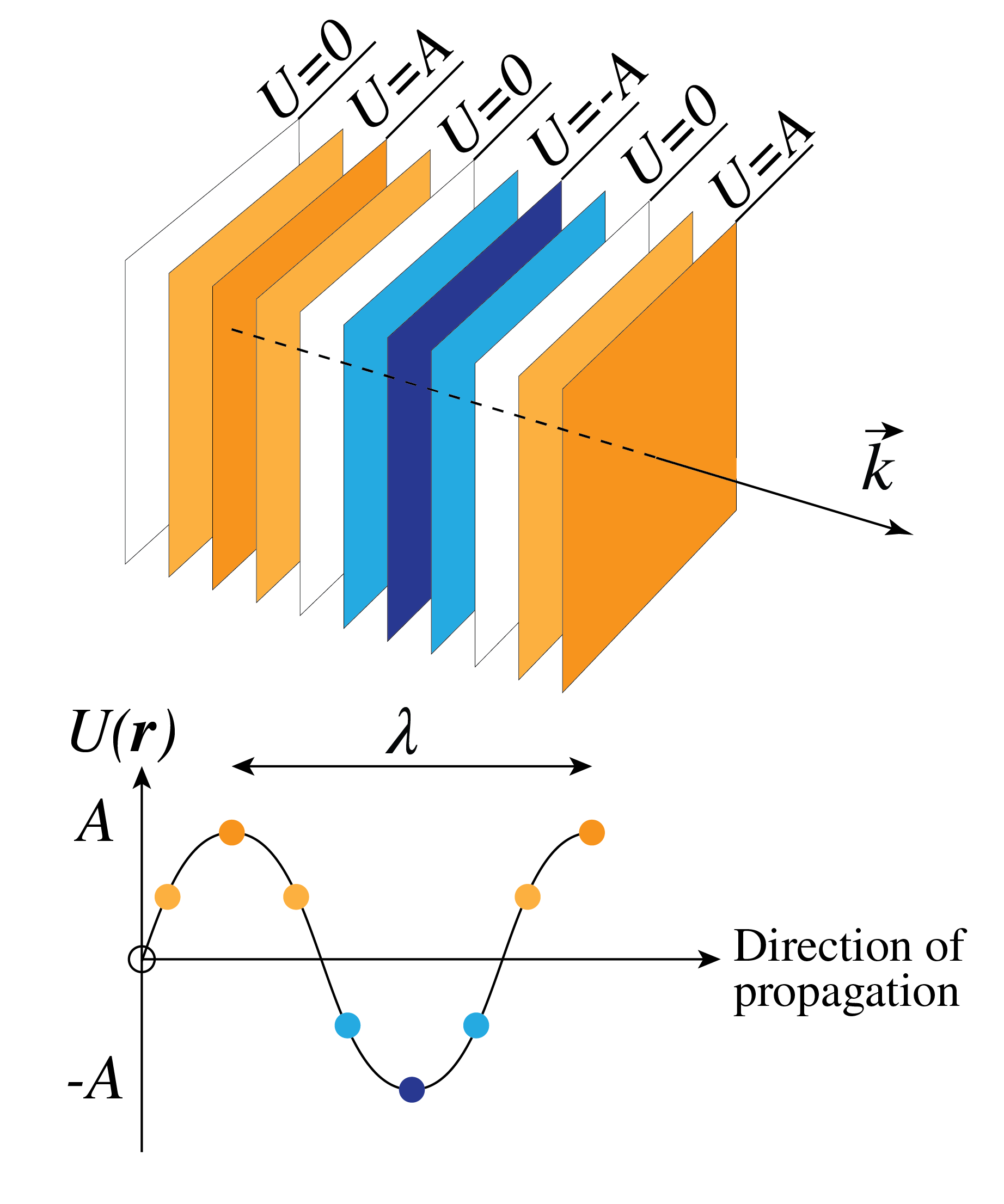

Time-Harmonic Spherical Waves#

A spherical wave depends on position only by the distance to a fixed point. For simplicity we choose the origin of our coordinate system at this point. We thus seek a solution of the form \({\cal U}(r,t)\) with \(r=\sqrt{x^2+y^2+z^2}\). For spherical symmetric functions we have

It is easy to see that outside of the origin

satisfies (46) for any choice for the function \(f\), where as before \(c=1/\sqrt{\epsilon_0\mu_0}\) is the speed of light and \(n=\sqrt{\epsilon/\epsilon_0}\). Of particular interest are time-harmonic spherical waves:

where \({\cal A}\) is a constant

and \(\pm kr - \omega t +\varphi\) is the phase at \(\mathbf{r}\) and at time \(t\). A wave front is a set of space-time points \((\mathbf{r},t)\) where the phase is equal to a constant:

Wave fronts are thus spheres which move with the speed of light in the radial direction. When the \(+\) sign is chosen, the wave propagates outwards, i.e. away from the origin. The wave is then radiated by a source at the origin. Indeed, if the \(+\) sign holds in (48), then if time \(t\) increases, (50) implies that a surface of constant phase moves outwards. Similarly, if the \(-\) sign holds, the wave propagates towards the origin which then acts as a sink.

Fig. 3 Spherical wave fronts with amplitude decreasing with distance.#

The amplitude of the wave \({\cal A}/r\) is proportional to the inverse distance to the source of sink. Since the time average of the local flux of energy is proportional to the square \({\cal A}^2/r^2\), the time averaged total flux through the surface of any sphere with centre the origin is independent of the radius of the sphere.

Fig. 4 Planes of constant phase in cross-section. For an observer at large distance to the source the spherical wave looks similar to a plane wave.#

Since there is a source or a sink at the origin, (48) satisfies (46) only outside of the origin. There is a \(\delta\)-function as source density on the right-hand side:

where the right-hand side corresponds to either a source or sink at the origin, depending on the sign chosen in the phase.

Using complex notation we have for the outwards propagating wave:

with \( U(\mathbf{r})=A \exp( ikr)/r\) and \(A={\cal A}\exp(i\varphi)\), where \(\varphi\) is the argument and \({\cal A}\) is the modulus of the complex number \(A\).

In Fig. 3 and Fig. 4 spherical wave fronts are shown. For an observer who is at a large distance from the source, the spherical wave looks like a plane wave which propagates from the source towards the observer (or in the opposite direction, if there is a sink).

Time-Harmonic Maxwell Equations in Matter#

We now return to the Maxwell equations and consider time-harmonic electromagnetic fields, because these are by far the most important fields in optics. Using complex notation we have

with

where \(\varphi_x(\mathbf{r})\) is the argument of the complex number \(E_x(\mathbf{r})\) etc. With similar notations for the magnetic field, we obtain by substitution into Maxwell’s equations (18), (19), (20) and (21), the time-harmonic Maxwell equations for the complex fields:

where the time derivative has been replaced by multiplication of the complex fields by \(-i\omega\).

In the time-harmonic Maxwell equations, the conductivity is sometimes included in the imaginary part of the permittivity:

Although it is convenient to do this in Maxwell’s Law (56), one should remember that in Gauss’s Law (57), the original permittivity: \(\epsilon=1+\chi_e\) should still be used. When there are no external sources: \(\varrho_{ext}=0\) and the material is homogeneous (i.e. \(\chi_e\) and \(\sigma\) are independent of position), then (57) is equivalent to

Hence in this (important) special case, (59) for the permittivity can safely be used without the risk of confusion.

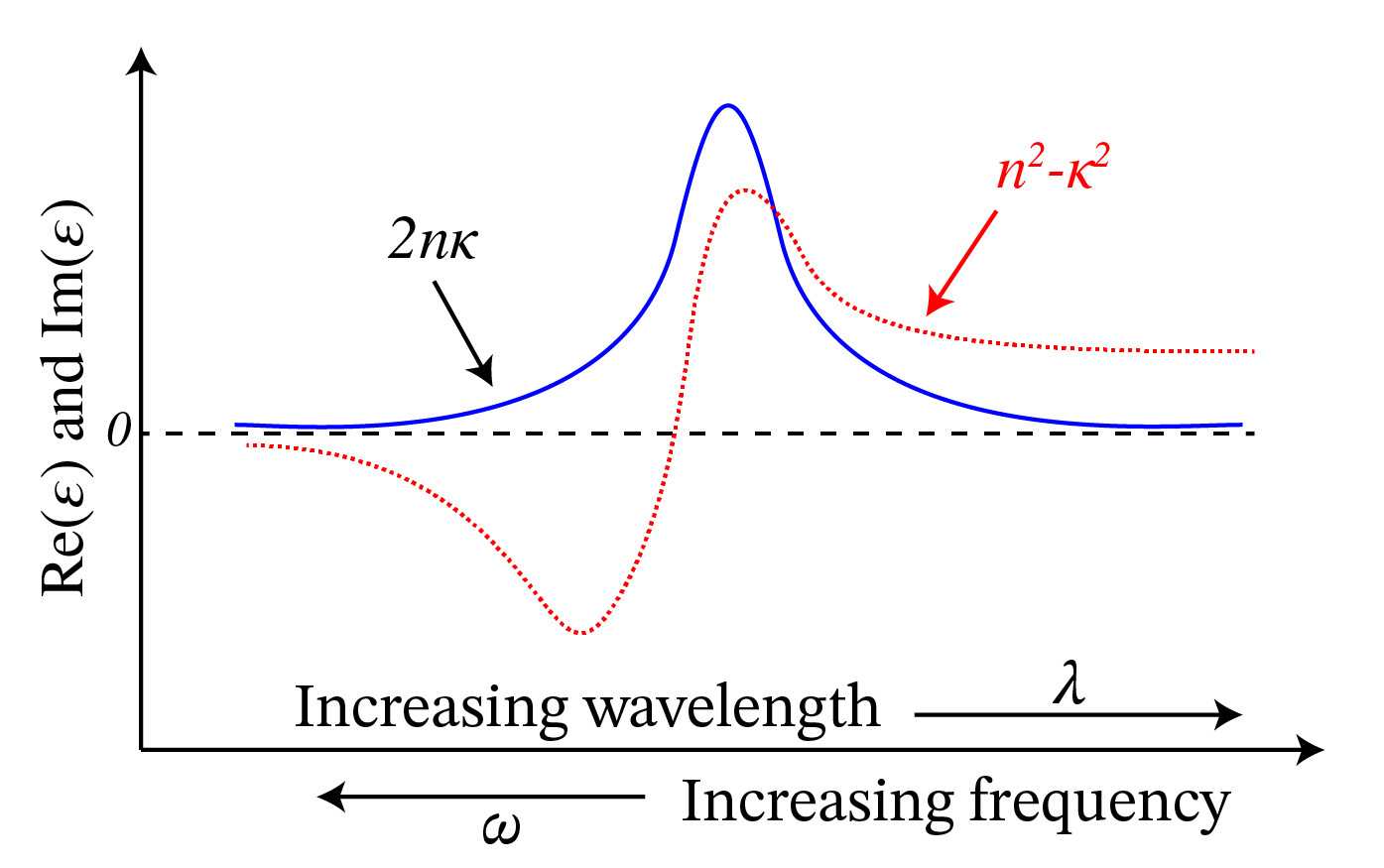

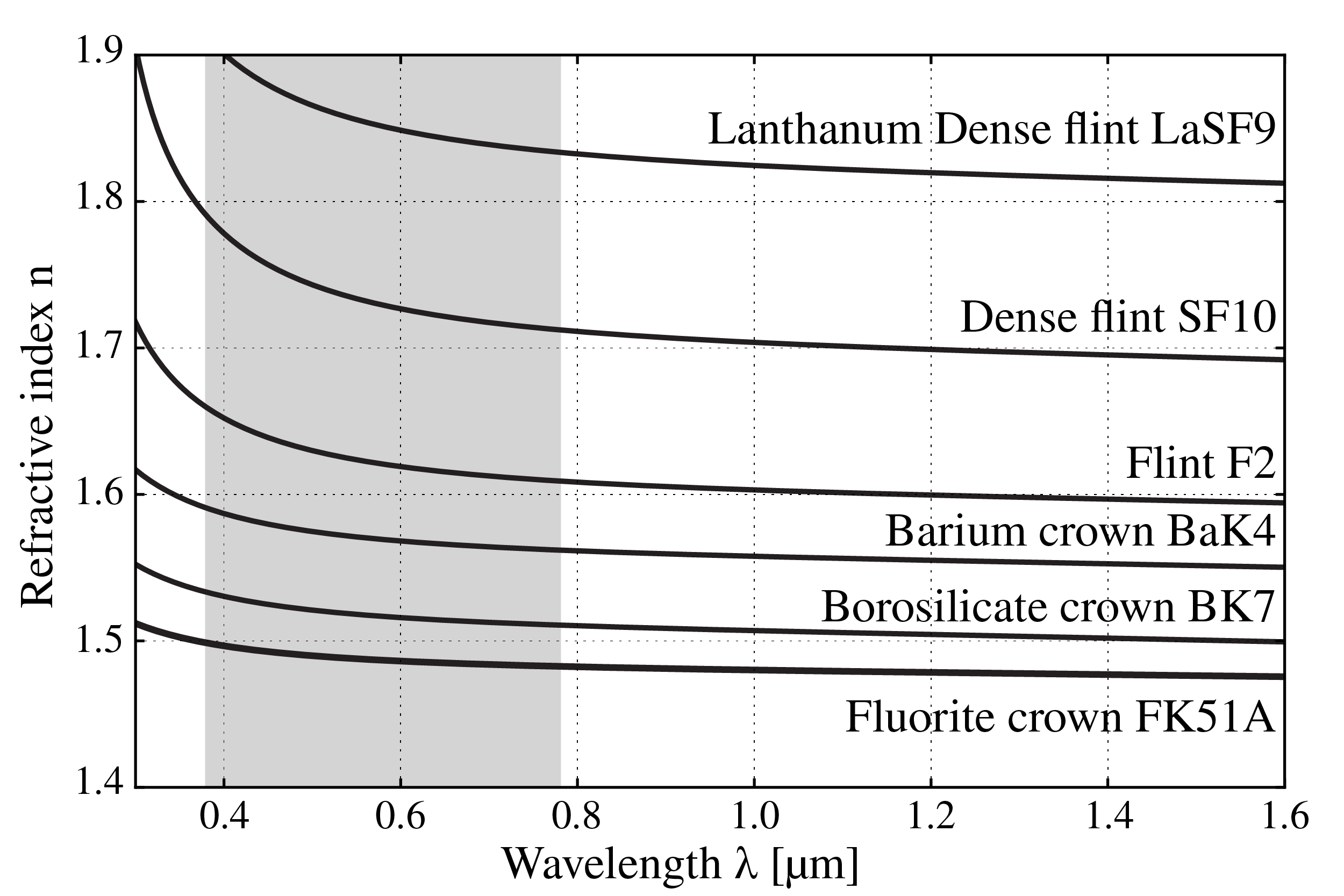

We see that when we use (59), the conductivity makes the permittivity complex and dependent on frequency. But actually, also for insulators (\(\sigma=0\)), the permittivity \(\epsilon\) depends in general on frequency and is complex with a positive imaginary part. The positive imaginary part of \(\epsilon\) is a measure of the absorption of the light by the material. The property that the permittivity depends on the frequency is called dispersion. Except close to a resonance frequency, the imaginary part of \(\epsilon(\omega)\) is small and the real part is a slowly increasing function of frequency. This is called normal dispersion. This is illustrated with the refractive index of different glass shown in Fig. 5

Fig. 5 Real part \(n^2-\kappa^2\) and imaginary part \(2n\kappa\) of the permittivity \(\epsilon=(n+i\kappa)^2\), as function of wavelength and of frequency near a resonance.#

Near a resonance, the real part is rapidly changing and decreases with \(\omega\) (this behaviour is called anomalous dispersion), while the imaginary part has a maximum at the resonance frequency of the material, corresponding to maximum absorption at a resonance as seen in Fig. 6. At optical frequencies, mostly normal dispersion occurs and for small-frequency bands such as in laser light, it is often sufficiently accurate to use the value of the permittivity and the conductivity at the centre frequency of the band.

Fig. 6 Refractive index as function of wavelength for several types of glass (from Wikimedia Commons by Geek3 / CC BY-SA).#

In many books the following notation is used: \(\epsilon=(n+i \kappa)^2\), where \(n\) and \(\kappa\) (“kappa”, not to be confused with the wave number \(k\)) are both real and positive, with \(n\) the refractive index and \(\kappa\) a measure of the absorption. We then have \(\text{Re}(\epsilon)=n^2-\kappa^2\) and \(\text{Im}(\epsilon)=2 n \kappa\) (see Fig. 5). Note that although \(n\) and \(\kappa\) are both positive, \(\text{Re}(\epsilon)\) can be negative for some frequencies. This happens for metals in the visible part of the spectrum.

Remark. When \(\epsilon\) depends on frequency, Maxwell’s equations in the form (19) and (20) for fields that are not time-harmonic can strictly speaking not be valid, because it is not clear which value of \(\epsilon\) corresponding to which frequency should be chosen. In fact, in the case of strong dispersion, the products \(\epsilon \mathbf{\mathcal{E}}\) should be replaced by convolutions in the time domain. Since we will almost always consider fields with a narrow-frequency band, we shall not elaborate on this issue further.

Time-Harmonic Electromagnetic Plane Waves#

In this section we assume that the material in which the wave propagates has conductivity which vanishes: \(\sigma=0\), does not absorb the light and is homogeneous, i.e. that the permittivity \(\epsilon\) is a real constant. Furthermore, we assume that in the region of space of interest there are no sources. These assumptions imply in particular that (60) holds. The electric field of a time-harmonic plane wave is given by

with

where \(\mathbf{A}\) is a constant complex vector (i.e. it is independent of position and time):

with \(A_x=|A_x| e^{i \varphi_x}\) etc… The wave vector \( \mathbf{k}\) satisfies (38). Substitution of (62) into (60) implies that

for all \(\mathbf{r}\) and hence (61) implies that also the physical real electric field is in every point \(\mathbf{r}\) perpendicular to the wave vector: \(\mathbf{\mathcal{E}}(\mathbf{r},t)\cdot \mathbf{k}=0\). For simplicity we now choose the wave vector in the direction of the \(z\)-axis and we assume that the electric field vector is parallel to the \(x\)-axis. This case is called a \(x\)-polarised electromagnetic wave. The complex field is then written as

where \(k=\omega \sqrt{\epsilon \mu_0}\) and \(A=|A| \exp(i \varphi)\). It follows from Faraday’s Law (55)) that

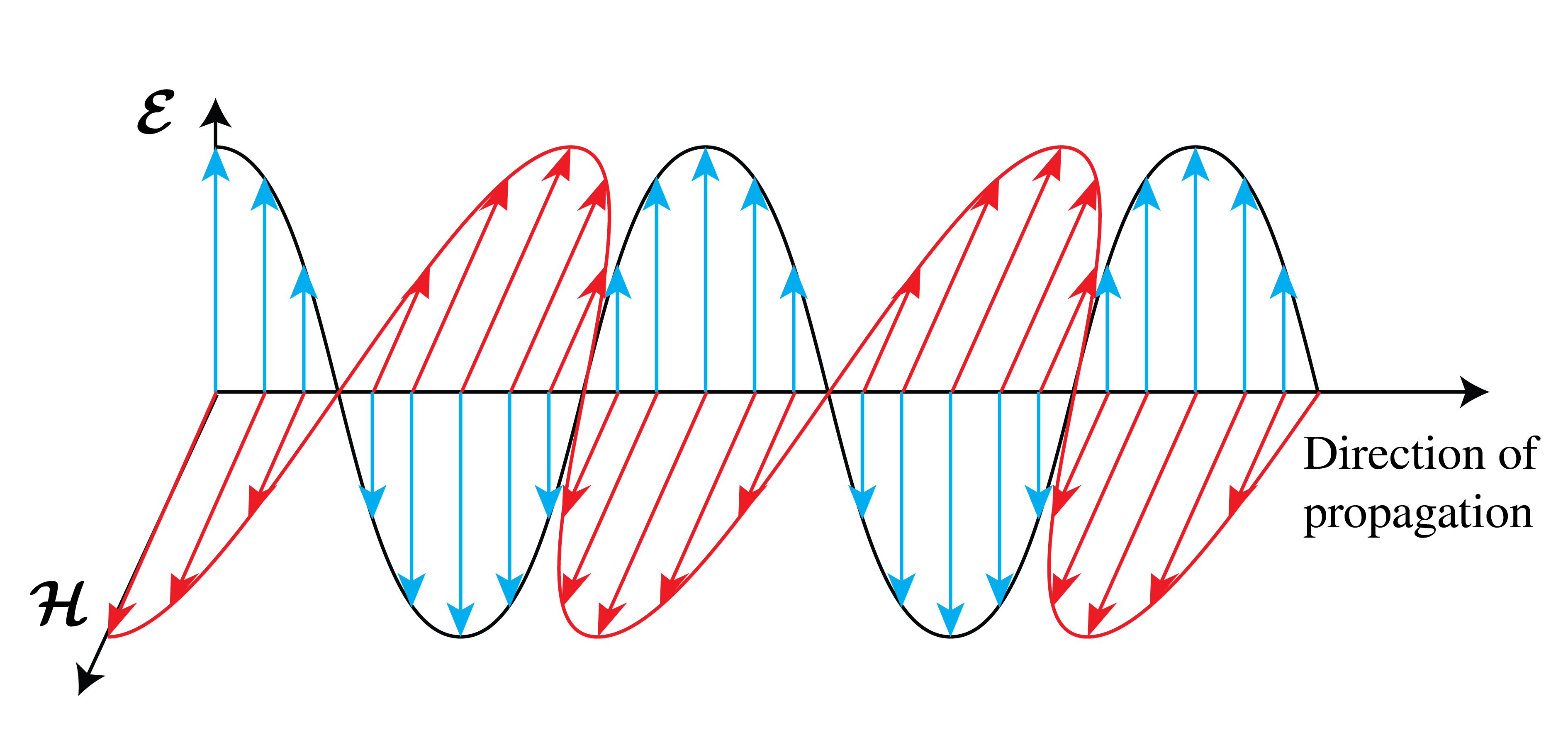

The real electromagnetic field is thus:

We conclude that in a lossless medium, the electric and magnetic field of a plane wave are in phase and at every point and at every instant perpendicular to the wave vector and to each other. As illustrated in Fig. 7, at any given time the electric and the magnetic field achieve their maximum and minimum values in the same points.

Fig. 7 The time-harmonic vectors \(\mathbf{\mathcal{E}}\) and \(\mathbf{\mathcal{H}}\) of a plane polarised wave are perpendicular to each other and to the direction of the wave vector which is also the direction of \(\mathbf{\mathcal{E}}\times \mathbf{\mathcal{H}}\).#

Field of an Electric Dipole#

An other important solution of Maxwell’s equation is the field radiated by a time-harmonic electric dipole, i.e. two opposite charges with equal strength that move time-harmonically around their centre of mass. In this section the medium is homogeneous, but it may absorb part of the light, i.e. the permittivity may have a nonzero imaginary part. An electric dipole is the classical electromagnetic model for an atom or molecule. Because the optical wavelength is much larger than an atom and molecule, these charges may be considered to be concentrated both in the same point \(\mathbf{r}_0\). The charge and current densities of such an elementary dipole are

with \(\mathbf{p}\) the dipole vector, defined by

where \(q>0\) is the positive charge and \(\mathbf{a}\) is the position vector of the positive with respect to the negative charge.

The field radiated by an electric dipole is very important. It is the fundamental solution of Maxwell’s equations, in the sense that the field radiated by an arbitrary distribution of sources can always be written as a superposition of the fields of elementary electric dipoles. This follows from the fact that Maxwell’s equations are linear and any current distribution can be written as a superposition of elementary dipole currents.

The field radiated by an elementary dipole in \(\mathbf{r}_0\) in homogeneous matter can be computed analytically and is given by[4]

where \(k=k_0 n \), with \(k_0\) bthe wave number in vacuum and \(n=\sqrt{\epsilon/\epsilon_0}\), and with \(\mathbf{R}=\mathbf{r}-\mathbf{r}_0\), \(\hat{\mathbf{R}}=\mathbf{R}/R\). It is seen that the complex electric and magnetic fields are proportional to the complex spherical wave:

discussed in Time-Harmonic Spherical Waves, but that these fields contain additional position dependent factors. In particular, at large distance to the dipole:

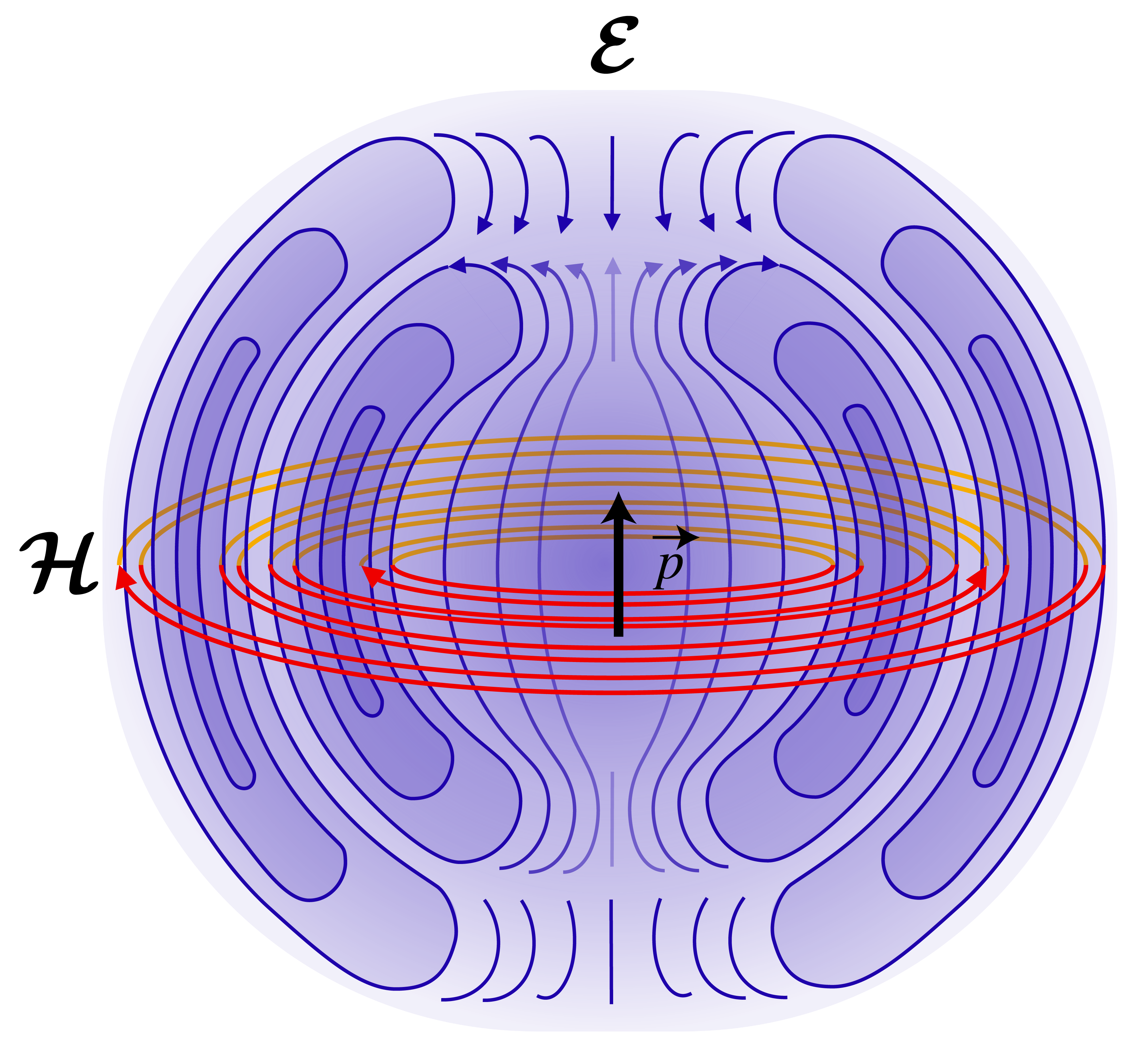

Fig. 8 Electric and magnetic field lines created by a radiating dipole (from Wikimedia Commons, original JPG due to Averse, SVG by Maschen. / CC0).#

In Fig. 8 are drawn the electric and magnetic field lines of a radiating dipole. For an observer at large distance from the dipole, the electric and magnetic fields are perpendicular to each other and perpendicular to the direction of the line of sight \(\hat{\mathbf{R}}\) from the dipole to the observer. Furthermore, the electric field is in the plane through the dipole vector \(\mathbf{p}\) and the vector \(\hat{\mathbf{R}}\), while the magnetic field is perpendicular to this plane. So, for a distant observer the dipole field is similar to that of a plane wave which propagates from the dipole towards the observer and has an electric field parallel to the plane through the dipole and the line of sight \(\hat{\mathbf{R}}\) and perpendicular to \(\hat{\mathbf{R}}\). Furthermore, the amplitudes of the electric and magnetic fields depend on the direction of the line of sight, with the field vanishing when the line of sight \(\hat{\mathbf{R}}\) is parallel to the dipole vector \(\mathbf{p}\) and with maximum amplitude when \(\hat{\mathbf{R}}\) is in the plane perpendicular to the dipole vector. This result agrees with the well-known radiation pattern of an antenna when the current of the dipole is in the same direction as that of the antenna.

Electromagnetic Energy#

The total energy stored in the electromagnetic field per unit of volume at a point \(\mathbf{r}\) is equal to the sum of the electric and the magnetic energy densities. We postulate that the results for the energy densities derived in electrostatics and magnetostatics are also valid for the fast-oscillating fields in optics; hence we assume that the total electromagnetic energy density is given by:

It is to be noticed that we assume in this section that the permittivity is real, i.e. there is no absorption and the permittivity does not include the conductivity.

Time dependent electromagnetic fields propagate energy. The flow of electromagnetic energy at a certain position \(\mathbf{r}\) and time \(t\) is given by the Poynting vector, which is defined by

More precisely, the flow of electromagnetic energy through a small surface \(\mathrm{d}S \) with normal \(\hat{\mathbf{n}}\) at point \(\mathbf{r}\) is given by

If this scalar product is positive, the energy flow is in the direction of \(\hat{\mathbf{n}}\), otherwise it is in the direction of \(-\hat{\mathbf{n}}\). Hence the direction of the vector \(\mathbf{\mathcal{S}}(\mathbf{r},t)\) is the direction of the flow of energy at point \(\mathbf{r}\) and the length \(\| \mathbf{\mathcal{S}}(\mathbf{r},t)\|\) is the amount of the flow of energy, per unit of time and per unit of area perpendicular to the direction of \(\mathbf{\mathcal{S}}\). This quantity has unit J/(s,\(\text{m}^2\)).

That the Poynting vector gives the flow of energy can be seen in a dielectric for which dispersion may be neglected by the following derivation. We consider the change with time of the total electromagnetic energy in a volume \(V\):

By substituting (18), (19) and using

which holds for any two vector fields, we find

where \(S\) is the surface bounding volume \(V\) and \(\hat{\mathbf{n}}\) is the unit normal on \(S\) pointing out of \(V\). Hence,

This equation says that the rate of change with time of the electromagnetic energy in a volume \(V\) plus the work done by the field on the conduction and external currents inside \(V\) is equal to the influx of electromagnetic energy through the boundary of \(V\).

Remark. The energy flux \(\mathbf{\mathcal{S}}\) and the energy density \(U_{em}\) depend quadratically on the field. For \(U_{em}\) the quadratic dependence on the electric and magnetic fields is clear. To see that the Poynting vector is also quadratic in the electromagnetic field, one should realise that the electric and magnetic fields are inseparable: they together form the electromagnetic field. Stated differently: if the amplitude of the electric field is doubled, then also that of the magnetic field is doubled and hence the Poynting vector is increased by the factor 4. Therefore, when computing the Poynting vector or the electromagnetic energy density of a time-harmonic electromagnetic field, the real-valued vector fields should be used, i.e. the complex fields should NOT be used. An exception is the calculation of the long-time average of the Poynting vector or the energy density. As we will show in the next section, the time averages of the energy flux and energy density of time-harmonic fields can actually be expressed quite conveniently in terms of the complex field amplitudes.

If we subsitute the real fields (65), (66) of the plane wave in the Poynting vector and the electromagnetic energy density we get:

We see that the energy flow of a plane wave is in the direction of the wave vector, which is also the direction of the phase velocity. Furthermore, it changes with time at frequency \(2\omega\).

Time-Averaged Energy of Time-Harmonic Fields#

Optical frequencies are in the range of \(5 \times 10^{14}\) Hz and the fastest detectors working at optical frequencies have integration times larger than \(10^{-10}\) s. Hence there is no detector which can measure the time fluctuations of the electromagnetic fields at optical frequencies and any detector always measures an average value, taken over an interval of time that is very large compared to the period \(2\pi/\omega\) of the light wave, typically at least a factor \(10^5\) longer. We therefore compute averages over such time intervals of the Poynting vector and of the electromagnetic energy. Because the Poynting vector and energy density depend nonlinearly (quadratically) on the field amplitudes, we can not perform the computations using the complex amplitudes and take the real part afterwards, but have instead to start from the real quantities. Nevertheless, it turns out that the final result can be conveniently expressed in terms of the complex field amplitudes.

Consider two time-harmonic functions:

with \(A=|A| \exp(i\varphi_A)\) and \(B=|B| \exp(i\varphi_B)\) the complex amplitudes. For a general function of time \(f(t)\) we define the time average over an interval T at a certain time \(t\), by

where \(T\) is much larger (say a factor of \(10^5\)) than the period of the light. It is obvious that for time-harmonic fields the average does not depend on the precise time \(t\) at which it is computed. and we therefore take \(t=0\) and write

With

where \(A^*\) is the complex conjugate of \(A\), and with a similar expression for \({\cal B}(t)\), it follows that

This important result will be used over and over again. In words:

Note

The average of the product of two time-harmonic quantities over a long time interval compared with the period, is half the real part of the product of the complex amplitude of one quantity and the complex conjugate of the other.

If we apply this to Poynting’s vector of a general time-harmonic electromagnetic field:

then we find that the time-averaged energy flow denoted by \(\mathbf{S}(\mathbf{r})\) is given by

Similarly, the time-averaged electromagnetic energy density is:

For the special case of plane wave (65), (66) in a medium without absorption, we get:

The length of vector (92) is the time-averaged flow of energy per unit of area in the direction of the plane wave and is commonly called the intensity of the wave. For the time-averaged electromagnetic energy density of the plane wave, we get:

Note

For a plane wave both the time-averaged energy flux and the time-averaged energy density are proportional to the squared modulus of the complex electric field.

Reflection and Transmission at an Interface#

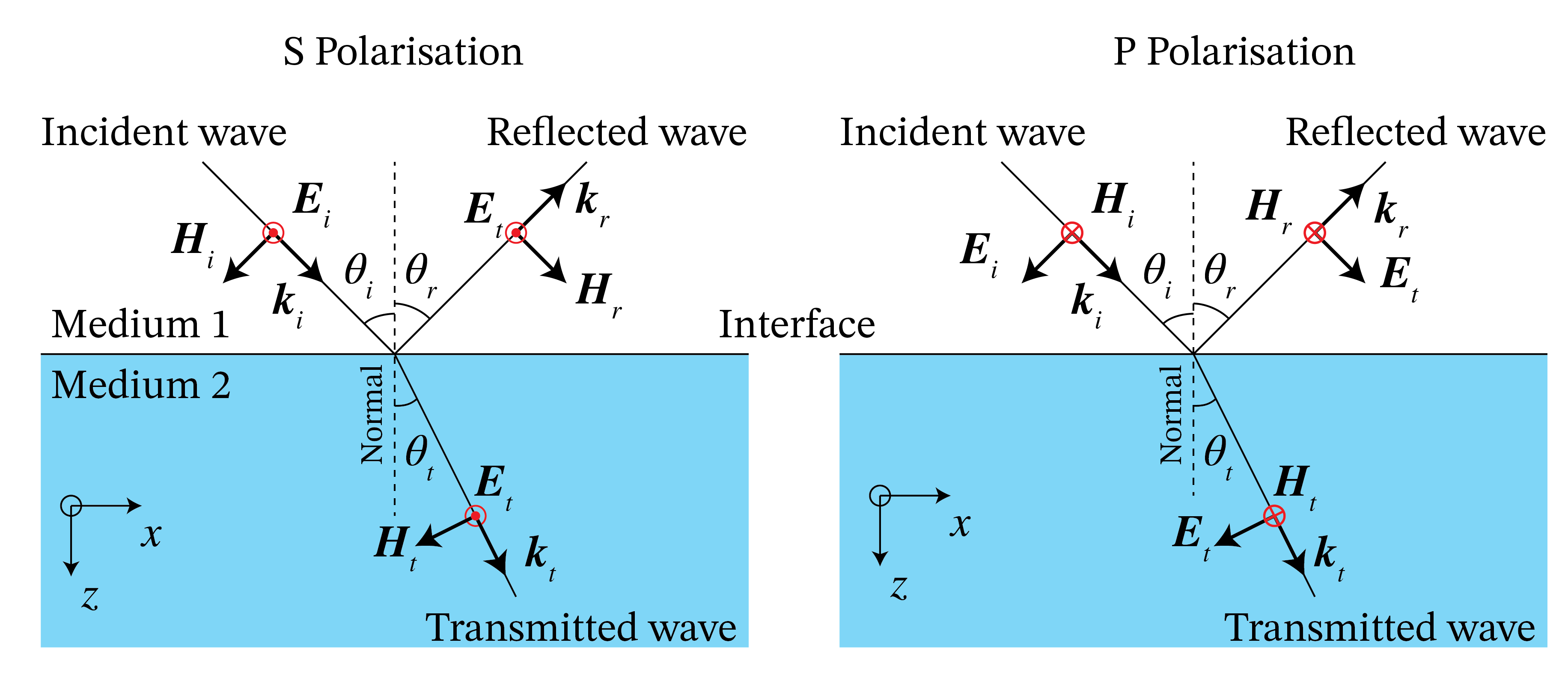

When an electromagnetic field is incident on an interface between different media, the field is partially reflected and partially transmitted. An important special case is that of a monochromatic plane wave which is incident on a planar interface as in Fig. 10.

Let the interface be the plane \(z=0\) between materials in \(z<0\) and \(z>0\) with permittivities \(\epsilon_i\) and \(\epsilon_t\), respectively. We first assume that the materials are lossless, i.e. that the permittivities are real. The plane wave is incident from medium \(z<0\) and the incident electromagnetic field is given by:

where \(\mathbf{k}^i= k_x^i \hat{\mathbf{x}} +k_y^i\hat{\mathbf{y}} + k_z^i \hat{\mathbf{z}}\), with

Because the time dependence is given by \(\exp(-i\omega t)\) with \(\omega>0\) and the incident wave propagates in the positive \(z\)-direction, the positive square root is chosen for \(k_z^i\). Part of the incident field is reflected into \(z<0\) and part is transmitted into \(z>0\). The reflected field is written as

where \(\mathbf{k}^r= k_x^r \hat{\mathbf{x}} +k_y^r\hat{\mathbf{y}} + k_z^r \hat{\mathbf{z}} \), with

where the minus sign is chosen because the reflected wave propagates in the negative \(z\)-direction. The transmitted field is for \(z>0\)

where \(\mathbf{k}^t= k_x^t \hat{\mathbf{x}} +k_y^t \hat{\mathbf{y}} + k_z^t \hat{\mathbf{z}}\), with

Our aim is to determine \(\mathbf{A}^r\) and \(\mathbf{A}^t\) for given \(\mathbf{A}^i\).

There exist conditions for the continuity of the tangential and the normal components of both the electric and magnetic fields at an interface between different media. The boundary conditions for the tangential components follow from the Maxwell equations that contain the curl-operator, i.e. (55) and (56). There holds for the interface \(z=0\) with the incident, reflected and transmitted plane waves introduced above:

where \(\hat{\mathbf{z}}\) is the unit normal on the interface. This means that the tangential components of the total electric and total magnetic field are continuous across the interface, or explicitly:

and similarly for the magnetic field.

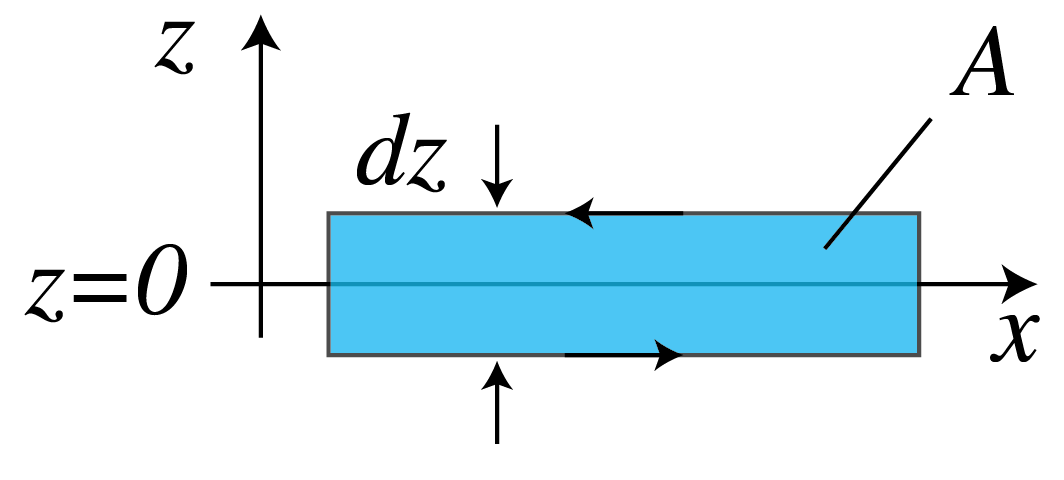

Fig. 9 Closed loop in the \((x,z)\)-plane enclosing the area \(A\) and surrounding part of the interface \(z=0\), as used in Stokes’ Law to derive the continuity of the electric and magnetic components which are tangential to the interface and parallel to the plane through the loop.#

We will only demonstrate that the tangential components of the electric field are continuous. By choosing a closed loop in the \((x,z)\)-plane which is intersected by the interface \(z=0\) as shown in Fig. 9, and integrating the \(y\)-component of Faraday’s Law (18) for the total electromagnetic field over the area \(A\) bounded by the loop \({\cal L}\), we obtain:

where in the last step we used Stokes’ theorem with the direction of integration over the loop given by that of the direction of rotation of a screw driver when it moves in the direction of the normal \(\hat{\mathbf{y}}\). In words: the rate of change of the magnetic flux through the surface \(A\) is equal to the integral of the tangential electric field over the bounding closed loop \({\cal L}\).

By taking the limit \(\mathrm{d}z\rightarrow 0\), the surface integral and the integrals over the vertical parts of the loop vanish and there remain only the integrals of the tangential electric field over the horizontal parts parallel to the \(x\)-axis of the loop on both sides of the interface \(z=0\). Since these integrals are traversed in opposite directions and the lengths of these parts are the same and arbitrary, we conclude for the loop as shown in Fig. 9 that

where \(\mathbf{\mathcal{E}}\) is the total electric field, i.e. it is equal to the sum of the incident and the reflected field for \(z<0\), and equal to the transmitted field in \(z>0\). By choosing the closed loop in the \((y,z)\)-plane instead of the \((x,z)\)-plane one finds similarly that the \(y\)-component of the electric field is continuous. The continuity of the tangential components of the magnetic field are derived in a similar manner.

Our derivation holds for electromagnetic fields of arbitrary time dependence. Furthermore, the derivation used above for the planar interface \(z=0\) can easily be generalized for curved surfaces. Therefore we conclude:

Note

The tangential electric and magnetic field components are continuous across any interface.

By integrating Maxwell’s equations that contain the div-operator (20), (21) over a pill box with height \(\mathrm{d}z\) and top and bottom surfaces on either side and parallel to the interface, and considering the limit \(\mathrm{d}z\rightarrow 0\), we find continuity relations for the normal components of the fields:

Note

The normal components of \(\epsilon \mathbf{\mathcal{E}}\) and \(\mathbf{\mathcal{H}}\) are continuous across an interface.

Remarks.

Since the derived boundary conditions hold for all times t, it follows that for time-harmonic fields they also hold for the complex fields. Hence (103) and (104) hold and similarly we find that the normal components of \(\epsilon \mathbf{E}\) and \(\mathbf{H}\) are continuous.

When the magnetic permeability is discontinuous, we have that the normal component of \(\mu \mathbf{\mathcal{H}}\) is continuous across the interface. But as has been remarked before, at optical frequencies the magnetic permeability is often that of vacuum and we assume this to be the case throughout this book.

Snell’s Law#

By substituting the complex electric fields derived from (94), (97) and (100) into equation (103), we get

Since this equation must be satisfied for all points \((x,y)\), it follows that

Hence, the tangential components of the wave vectors of the incident, reflected and transmitted waves are identical. In fact, if (112) would not hold, then by keeping \(y\) fixed, the exponential functions in (111) would not all have the same periodicity as functions of \(x\) and then (111) could never be satisfied for all \(x\). The same argument with \(x\) kept fixed leads to the conclusion (113).

Without restricting the generality, we will from now on assume that the coordinate system is chosen such that

The plane through the incident wave vector and the normal to the interface is called the plane of incidence. Hence in the case of (114) the plane of incidence is the \((x,z)\)-plane.

Since the length of the wave vectors \(\mathbf{k}^i\) and \(\mathbf{k}^r\) is \(k_0 n_i\), with \(k_0\) the wave number in vacuum and \(n_i=\sqrt{\epsilon_i/\epsilon_0}\) the refractive index, and since the length of \(\mathbf{k}^t\) is \(k_0n_t\), with \(n_t=\sqrt{\epsilon_t/\epsilon_0}\), it follows from (112)

and

where the angles are as in Fig. 10. Hence,

Fig. 10 The incident, reflected, and transmitted wave vectors with the electric and magnetic vectors for s- and p-polarisation. For s-polarisation the electric field points out of the plane at the instant shown while for p-polarisation the magnetic field points out of the plane at the instant shown.#

Snell’s Law[5] implies that when the angle of incidence \(\theta_i\) increases, the angle of transmission increases as well. If the medium in \(z<0\) is air with refractive index \(n_i=1\) and the other medium is glass with refractive index \(n_t=1.5\), then the maximum angle of transmission occurs when \(\theta_i=90^o\) with

In case the light is incident from glass, i.e. \(n_i=1.5\) and \(n_t=1.0\), the angle of incidence \(\theta_i\) cannot be larger than \(41.8^o\) because otherwise there is no real solution for \(\theta_t\). It turns out that when \(\theta_i> 41.8^o\), the wave is totally reflected and there is no propagating transmitted wave in air. As explained in Total Internal Reflection and Evanescent Waves, this does however not mean that there is no field in \(z>0\). In fact there is a non-propagating so-called evanescent wave in \(z>0\). The angle \(\theta_{i,crit}=41.8^o\) is called the critical angle of total internal reflection. It exists only if a wave is incident from a medium with larger refractive index on a medium with lower refractive index (\(n_t<n_i\)). The critical angle is independent of the polarisation of the incident wave.

Fresnel Coefficients#

Because of (112) and (114), we write \(k_x=k_x^i=k_x^r=k_x^t\) and therefore \(k_z^i = \sqrt{k_0^2\epsilon_i - k_x^2} = -k_z^r\) and \(k_z^t=\sqrt{k_0^2\epsilon_t-k_x^2}\). Hence,

and

According to (64), for the incident, reflected and transmitted plane waves there must hold:

We choose an orthonormal basis perpendicular to \(\mathbf{k}^i\) with unit vectors:

where

and where in writing the complex conjugate we anticipate the case the \(k_z^i\) is complex, which may happen for example when \(\epsilon_i\) is complex (a case that has been excluded so far but which later will be considered) or in the case of evanescent waves discussed in Total Internal Reflection and Evanescent Waves. Note that when \(k_z^i\) is real, \(|\mathbf{k}^i|=\sqrt{k_x^2 + (k_z^i)^2}=k_0n_i\). It is easy to see that the basis (123) is orthonormal in the space of two-dimensional complex vectors and that \(\hat{\mathbf{s}}\cdot\mathbf{k}^i=\hat{\mathbf{p}}^i\cdot \mathbf{k}^i=0\). The vector \(\hat{\mathbf{s}}\) is perpendicular to the plane of incidence, therefore the electric field component in this direction is polarised perpendicular to the plane of incidence and is called s-polarised (“Senkrecht” in German). The other basis vector \(\hat{\mathbf{p}}^i\) is (for real \(\mathbf{k}^i\)) parallel to the plane of incidence and when the electric component in this direction it is called p-polarised. The complex vector \(\mathbf{A}^i\) can be expanded on this basis:

Since

it follows that the electric and magnetic field of the incident plane wave can be written as

The reflected field is expanded on the basis \(\hat{\mathbf{y}}\) and \(\hat{\mathbf{p}}^r\) with

The sign in front of the unit vector \(\hat{\mathbf{p}}^r\) is chosen such that that its \(x\)-component is the same as that of \(\hat{\mathbf{p}}^i\). Since

it follows that

where we used that \(\mathbf{k}^r\cdot \mathbf{k}^r=k_0^2 n_i^2 \) and \(|\mathbf{k}^r|=\sqrt{k_x^2 + |k_z^r|^2}=\sqrt{k_x^2+|k_z^i|^2}=|\mathbf{k}^i|\). For the transmitted plane wave we use the basis \(\hat{\mathbf{y}}\) and \(\hat{\mathbf{p}}^t\) with

where \(\hat{\mathbf{p}}^t\) is chosen such that the \(x\)-component of \(\hat{\mathbf{p}}^t\) has the same sign as the \(x\)-component of \(\hat{\mathbf{p}}^i\). Since

we get

We now consider an s-polarised incident plane wave, i.e. \(A^i_p=0\). We will show that all boundary conditions can be satisfied by \(A^r_p=A^t_p=0\) and by appropriately expressing \(A^r_s\) and \(A^t_s\) in terms of \(A^i_s\). This implies that if the incident plane wave is s-polarised, the reflected and transmitted waves are s-polarised as well. For s-polarisation, the electric field has only a \(y\)-component and this component is tangential to the interface \(z=0\). This leads to the condition

The only tangential component of the magnetic field is the \(x\)-component and requiring it to be continuous for \(z=0\) leads to

Solving (137), (138) for \(A_s^r\) and \(A^t_s\) gives the following formula for the reflection and transmission coefficients:

Only the magnetic field has a \(z\)-component and it easy to verify that \(H^i_z + H^r_z = H_z\) for \(z=0\).

By looking at the case of a p-polarised incident wave: \(A^i_s=0\), we see that the expression for the magnetic field in the p-polarised case become similar (except for the chosen signs) to that of the electric field for s-polarisation and conversely. Enforcing the continuity of the tangential components at \(z=0\) gives for p-polarisation:

It is easy to verify that \(E_z\) is the only normal component and that \(\epsilon_i (E^i_z+E^r_z)=\epsilon_t E^t_z\) for \(z=0\).

The reflection and transmission coefficients \(r_s\), \(r_p\), \(t_s\) and \(t_p\) are called Fresnel coefficients. As follows from the derivation, there is no cross talk between s- and p-polarised plane waves incident on a planar interface. A generally polarised incident plane wave can always be written as a linear combination of s- and a p-polarised incident plane waves. Because in general \(r_s\neq r_p\) and \(t_s\neq t_p\), it follows that the reflected and transmitted fields are also linear combinations of s- and p-polarised fields, but with different coefficients (weights) of these two fundamental polarisation states than for the incident wave.

Remarks.

In the derivation of the Fresnel coefficients the continuity of the normal field components was not used and was automatically satisfied. The reason is that the electromagnetic fields of the plane waves where chosen to be perpendicular to the wave vectors. This implies that the divergence of \(\epsilon \mathbf{\mathcal{E}}\) and of \(\mathbf{\mathcal{H}}\) vanishes which in turns implies that the normal components are automatically continuous across the the interface.

When \(k_z^i\) and \(k_z^t\) are both real, we have \(|\mathbf{k}^i|=k_0n_i\) and \(|\mathbf{k}^t|=k_0n_t\) and the Fresnel coefficients can be expressed in the angles \(\theta_i\), \(\theta_r\) and \(\theta_t\) and the refractive indices \(n_i=\sqrt{\epsilon_i}/\epsilon_0\) and \(n_t=\sqrt{\epsilon_t/\epsilon_0}\). Because \(k^i_z=k_0n_i \cos\theta_i\) and \(k^t_z=k_0 n_t \cos \theta_t\), we find

and

To obtain the expressions at the far right in (143), (144), (143) and (146) Snell’s Law has been used.

The advantage of the expressions (139), (140), (141), (142) in terms of the wave vector components \(k_z^i\) and \(k_z^t\) is that these also apply when \(k_z^i\) and/or \(k_z^t\) are complex. The components \(k_z^i\) and/or \(k_z^t\) are complex when there is absorption in \(z<0\) and/or in \(z>0\). When \(\epsilon_i>\epsilon_t\) and the incident angle is above the critical angle, \(k_z^t\) is imaginary (see Total Internal Reflection and Evanescent Waves).

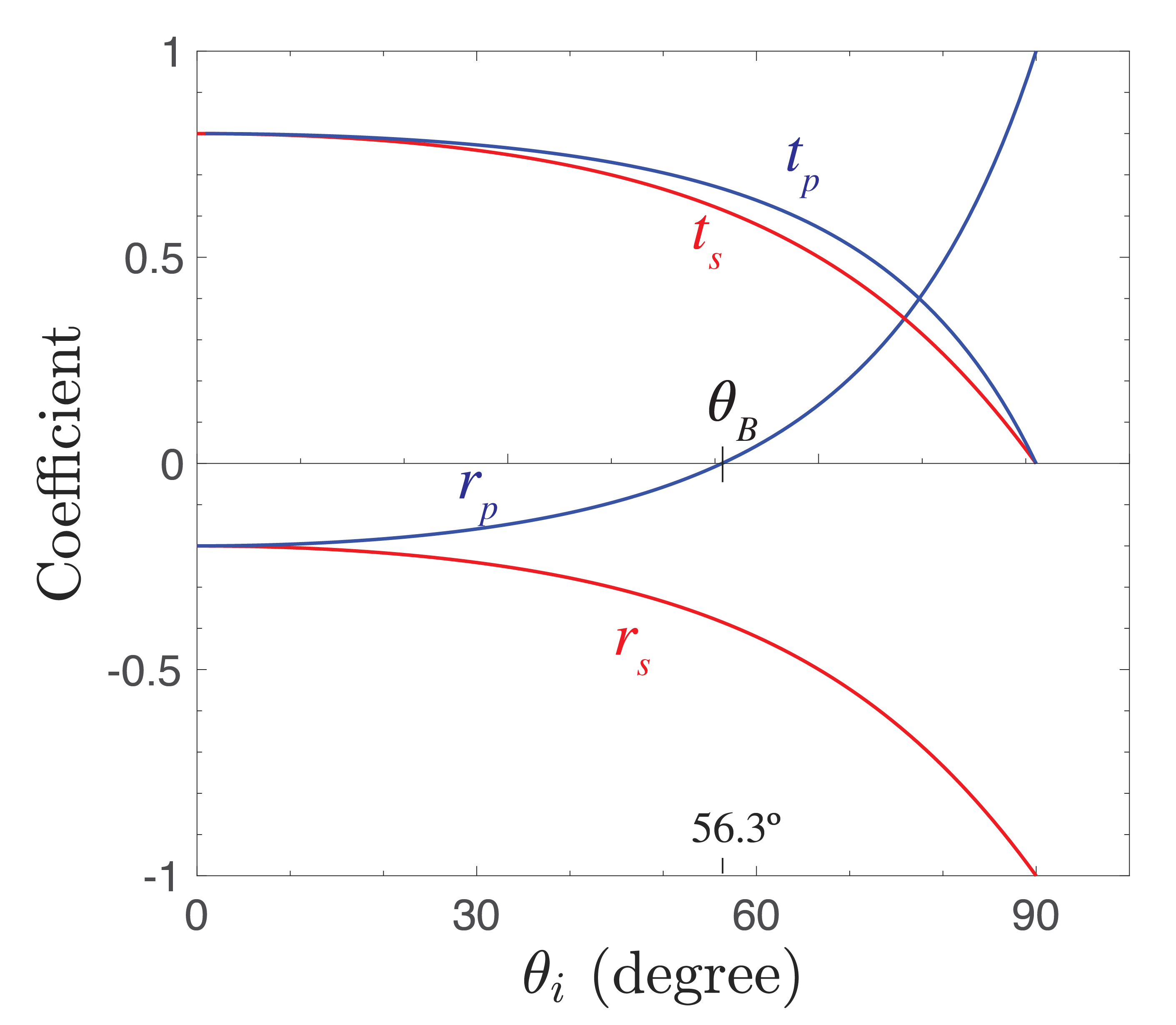

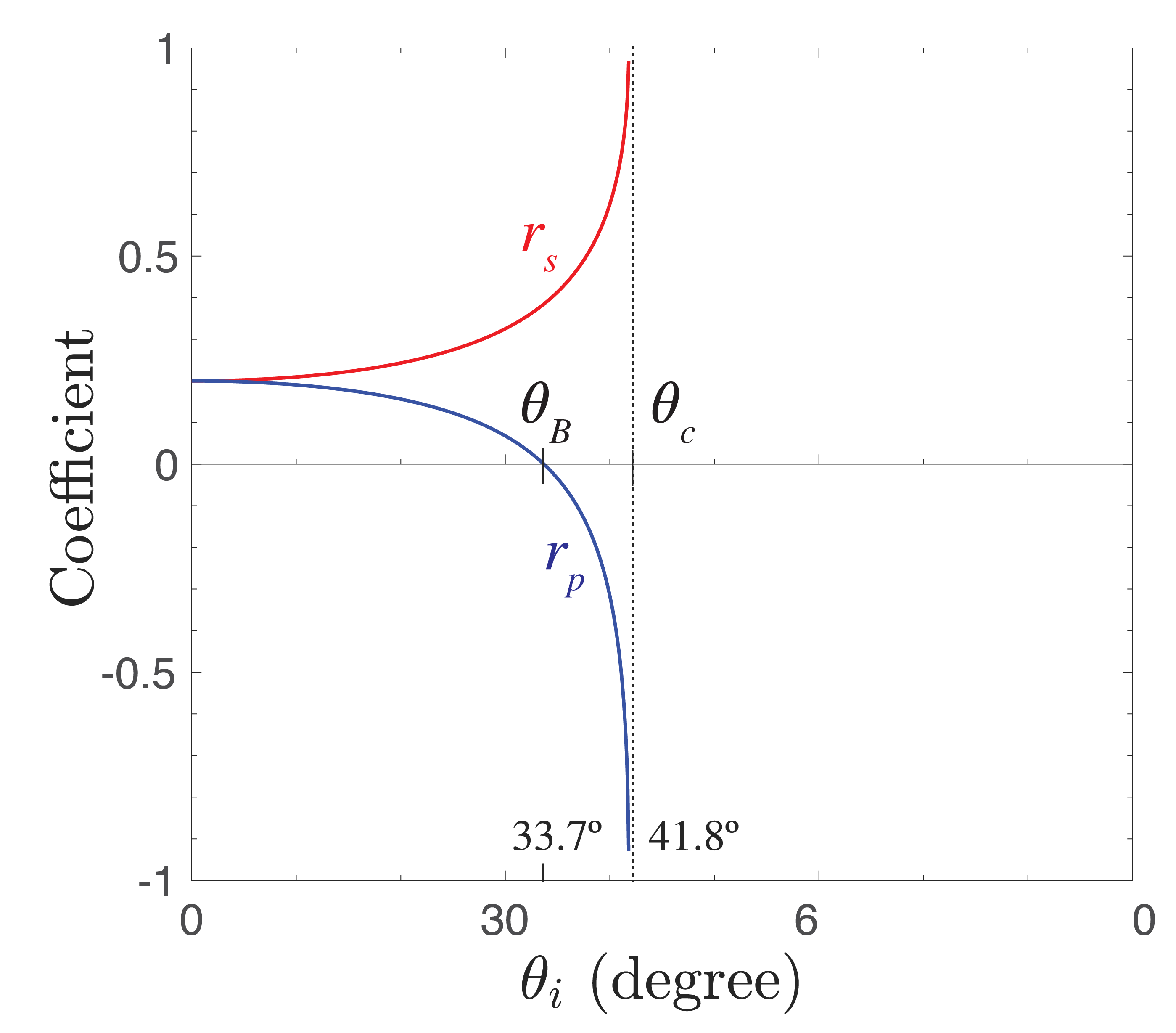

Fig. 11 Reflection and transmission coefficients as function of the angle of incidence of s- and p-polarised waves incident from air to glass. The Brewster angle \(\theta_B\) is indicated.#

Properties of the Fresnel Coefficients#

For normal incidence: \(\theta_i=0\), Snell’s Law implies: \(\theta_t=0\). Hence, (143), (145) give:

So for normal incidence: \(r_p=r_s\), as expected. Note however that if we would not have defined \(\hat{\mathbf{p}}^r\) such that its tangential components are the same as those of \(\hat{\mathbf{p}}^i\), the two reflection coefficients for normal incidence would have had the opposite signs (as is the case in some books). If the incident medium is air and the other medium is glass (\(n_i=1.0\), \(n_t=1.5\)), we get

and since the flow of energy is proportional to the square of the field, it follows that 4% of the normal incident light is reflected at the interface between air and glass. Hence a lens of glass without anti-reflection coating reflects approximately 4% of the light at normal incidence. The transmission coefficient for normal incidence is:

which for air-glass becomes \(0.8\).

Remark. Energy conservation requires that the normal component \(<S_z>\) of the time-averaged energy flux through the interface is continuous. By using the formula for the time-averaged Poynting vector of a plane wave (92), it can be verified that the Fresnel coefficients are such that the energy flux is indeed continuous.

It follows from Snell’s Law (118) that when both refractive indices \(n-i\) and \(n_t\) are real, \(\sin \theta_t = (n_i/n_t) \sin \theta_i\). Hence \(\theta_t\) monotonically increases with \(\theta_i\) and therefore there exists some \(\theta_i\) such that

For this particular angle of incidence, the denominator of (145) is infinite and hence \(r_p=0\), i.e. the p-polarised wave is not reflected at all. This angle of incidence is called the Brewster angle \(\theta_{B}\)[6]. It is easy to see from (143) that the reflection is never zero for s-polarisation.

Note

If unpolarised light is incident at the Brewster angle, the reflected light will be purely s-polarised.

Since at the Brewster angle s-polarised light is only partially reflected and the rest is transmitted, the transmitted light at the Brewster angle is a mixture of s- and p-polarisation. We have \(\theta_t=90^o-\theta_i\), hence \(\sin\theta_t=\cos\theta_i\) and by Snell’s Law (writing \(\theta_i=\theta_{B})\):

We see that there is always a Brewster angle when both refractive indces are real, independent of whether the wave is incident from the material with the smallest or largest refractive index. For the air-glass interface we have \(\theta_{B}=56.3^o\) and \(\theta_t=33.7^o\). By (143):

so that \((0.38)^2/2=0.07\), or 7 % of the unpolarised light is reflected as purely s-polarised light at the air glass interface at the Brewster angle. For a wave incident from glass, \(\theta_{B}=33.7^o\).

Fig. 12 Reflection and transmission coefficients as function of the angle of incidence of s- and p-polarised waves incident from glass to air.#

In Fig. 12 the Fresnel reflection and transmission coefficients of s- and p-polarised waves are shown as functions of the angle of incidence for the case of incidence from air to glass. There is no critical angle of total reflection in this case. The Brewster angle is indicated. It is seen that the reflection coefficients decrease from the values \(-0.2\) for \(\theta_i=0^o\) to -1 for \(\theta_i=90^o\). The transmission coefficients monotonically decrease to \(0\) at \(\theta_i=90^o\).

Fig. 12 shows the Fresnel coefficients when the wave is incident from glass to air. The critical angle is \(\theta_{i,crit}=41.8^o\) as derived earlier. At the angle of total internal reflection the absolute values of the reflection coefficients are identical to 1. There is again an angle where the reflection of p-polarised light is zero \(\theta_{B}=33.7^o\).

Depending on the refractive indices and the angle of incidence, the Fresnel reflection coefficients can be negative. The reflected electric field then has an additional \(\pi\) phase shift compared to the incident wave. In contrast, (provided that the materials are lossless), the transmitted field is always in phase with the incident field, i.e. the transmission coefficients are always positive.

Total Internal Reflection and Evanescent Waves#

We return to the case of a wave incident from glass to air, i.e. \(n_i=1.5\) and \(n_t=1\). As has been explained, there is then a critical angle, given by

This is equivalent to

The wave vector \(\mathbf{k}^t=k_x^t \hat{\mathbf{x}} + k_z^t\hat{\mathbf{z}}\) in \(z>0\) always satisfies:

and hence at the critical angle thre holds

For angles of incidence above the critical angle we have: \(k_x^t>k_0 n_t \) and it follows from (154) that \((k_z^t)^2=k_0^2n_t^2 -(k_x^t)^2<0\), hence \(k_z^t\) is imaginary:

where the last square root is a positive real number. It can be shown that above the critical angle the reflection coefficients are complex numbers with modulus 1: \(|r_s|=|r_p|=1\). This implies that the reflected intensity is identical to the incident intensity, while at the same time the transmission coefficients are not zero! For example, for s-polarisation we have according to (139), (140):

because \(r_s \neq -1\) (although \(|r_s|=1\)). Therefore there is an electric field in \(z>0\), given by

where we have chosen the \(+\) sign in (156) to prevent the field from blowing up for \(z \rightarrow \infty\). Since \(k_x^t\) is real, the wave propagates in the \(x\)-direction. In the \(z\)-direction, however, the wave is not propagating. Its amplitude decreases exponentially as a function of distance \(z\) to the interface and therefore the wave is confined to a thin layer adjacent to the interface. Such a wave is called an evanescent wave. The Poynting vector of the evanescent wave can be computed and is found to be parallel to the interface. Hence,

Note

The flow of energy of an evanescent wave propagates parallel to the interface, namely in the direction in which \(k_x^t\) is positive.

Hence no energy is transported away from the interface into the air region. We shall return to evanescent waves in the chapter on diffraction theory.

External sources in recommended order

Youtube video - 8.03 - Lect 18 - Index of Refraction, Reflection, Fresnel Equations, Brewster Angle - Lecture by Walter Lewin 2. MIT OCW - Reflection at The Air-glass Boundary: demonstration of reflection of polarised light and the Brewster angle.

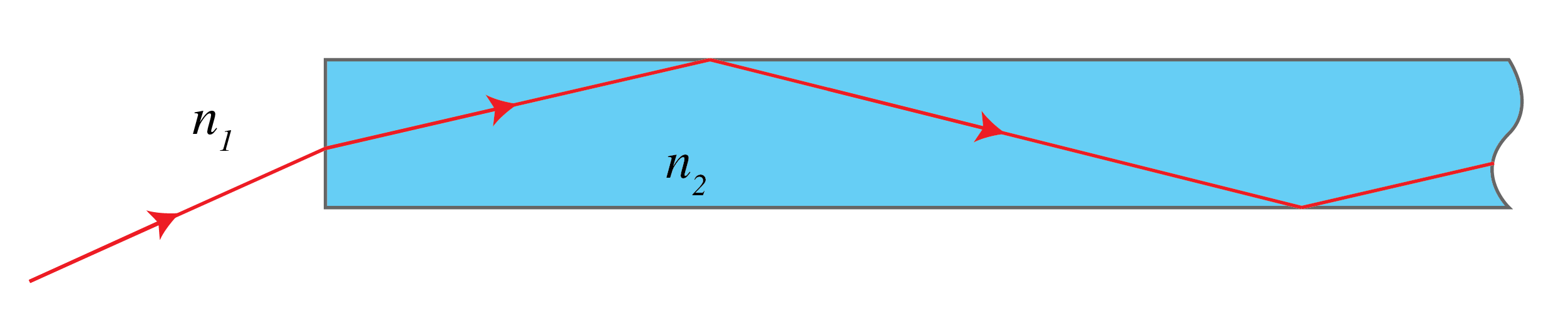

Fiber Optics#

We will show in Scalar Diffraction Optics on diffraction that a light beam ultimately always becomes broader for increasing propagation distance. The divergence means that the energy density in the beam decreases with propagation distance. This divergence can be prevented by letting the light propagate inside a fiber. The guiding of light inside a fiber is based on the phenomenon of total internal reflection. The principle has been known for a long time, but the topic was greatly boosted by the invention of the laser.

Consider a straight glass cylinder of refractive index \(n_2\), surrounded by air with refractive index \(n_1=1<n_2\). The core of the cylinder has a cross section approximately the size of a human hair and hence, although quite small, it is still many optical wavelengths thick. This implies that when light strikes the cylindrical surface, we can locally consider the cylinder as a flat surface. By focusing a laser beam at the entrance plane of the fiber, light can be coupled into the fiber. The part of the light inside the fiber that strikes the cylinder surface at an angle with the normal that is larger than the critical angle of total reflection will be totally reflected. As it hits the opposite side of the cylinder surface, it will again be totally reflected and so on (Fig. 13 top).

Fig. 13 Top: schematic of a light ray entering a glass fiber; inside the light is totally reflected and is guided by the fiber. middle: Light guided within a piece of glass. (from Wikimedia Commons by Keerthi - CC BY ); bottom: a glass fiber optic image inverter twists an image 180 degrees from its input surface to its output surface.(Image:© SCHOTT)#

Since visible light has such high frequencies (order \(10^{15}\) Hz), roughly a hundred thousand times more information can be carried through a fiber than at microwave frequencies. Today fibers with very low losses are fabricated so that signals can be sent around the earth with hardly any attenuation. Abraham van Heel, professor of optics at Delft University of Technology, showed for the first time in a paper published in Nature in 1954[7] that by packing thousands of fibers into a cable, images can be transferred, even if the bundle is bent (Fig. 13 bottom).

External sources in recommended order

MIT OCW - Single Mode Fiber: Demonstration of a single-mode fiber.

MIT OCW - Multi-mode Fiber: Demonstration of a multimode fiber.

Khan Academy - Magnetic field created by a current carrying wire (Ampere’s Law)