11. Basic probability distributions#

Recommended reference: Wasserman [Was04], in particular Sections 2.3 and 2.4.

In this section we cover some of the most important examples of probability distributions.

11.1. Discrete probability distributions#

11.1.1. Discrete uniform distribution#

The idea of the uniform distribution is that there is a finite collection of possible outcomes, each of which is equally likely.

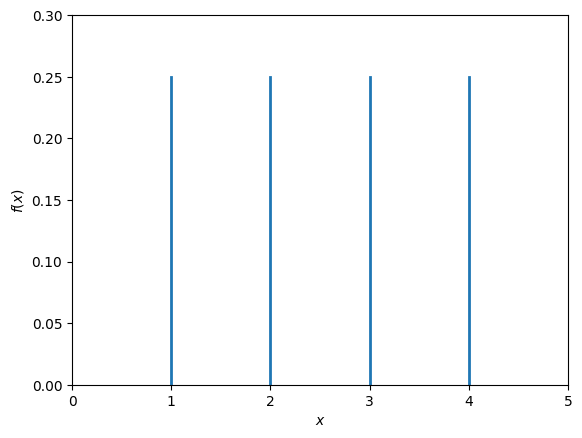

The discrete uniform distribution on a set \(S\) of size \(n\) (for example the set \(\{1,2,\ldots,n\}\)) assigns probability \(1/n\) to each element of \(S\):

Fig. 11.1 Probability mass function of a uniform discrete random variable with values \(1,2,3,4\).#

11.1.2. Bernoulli distribution#

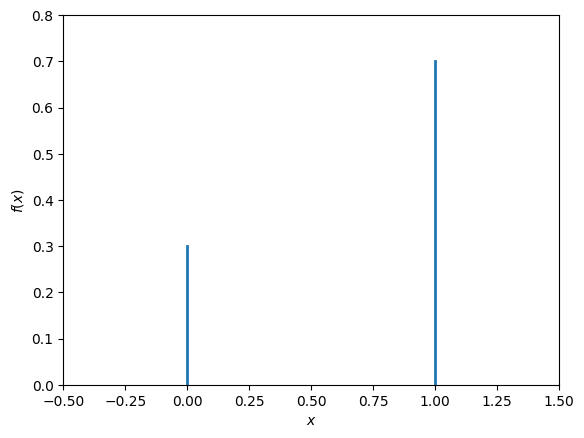

The Bernoulli distribution models an experiment that can have two outcomes, for example a (possibly biased) coin flip. We will denote the two possible outcomes by \(0\) and \(1\). The distribution depends on a parameter \(p\in[0,1]\), which is by definition the probability that the outcome equals 1. We have

Fig. 11.2 Probability mass function of a Bernoulli random variable with \(p=0.7\).#

11.1.3. Binomial distribution#

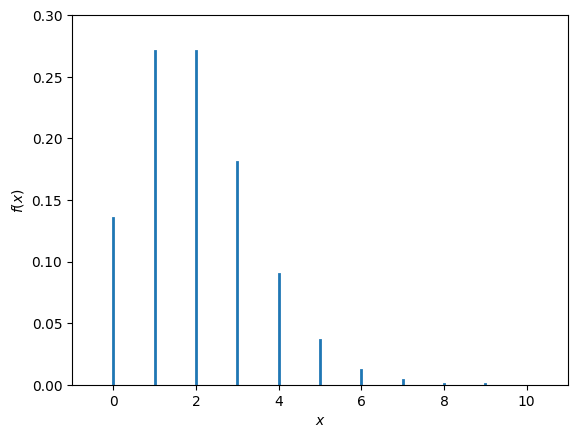

The binomial distribution with parameters \(n\) and \(p\) is defined by the probability mass function

Here \(\binom{n}{k}=\frac{n!}{k!(n-k)!}\) is the usual binomial coefficient.

This can be viewed as a sum of \(n\) Bernoulli random variables with parameter \(p\).

Fig. 11.3 Probability mass function of a binomial random variable with parameters \(n=10\) and \(p=0.7\).#

11.1.4. Poisson distribution#

The Poisson distribution with rate \(\lambda\) is defined by the probability mass function

Note

The total probability equals 1 because of the Taylor series expansion of the exponential function, which tells us that

The Poisson distribution is used to model situations where we have a series of independent random events that occur with some fixed rate in a given time interval.

(See also the section on the exponential distribution below.)

Fig. 11.4 Probability mass function of a Poisson random variable with parameter \(\lambda=2\).#

11.2. Continuous probability distributions#

11.2.1. Continuous uniform distribution#

This a continuous analogue of the discrete uniform distribution described above. In this case, the intuition that “all outcomes are equally likely” is strictly speaking meaningless, since every outcome has probability 0.

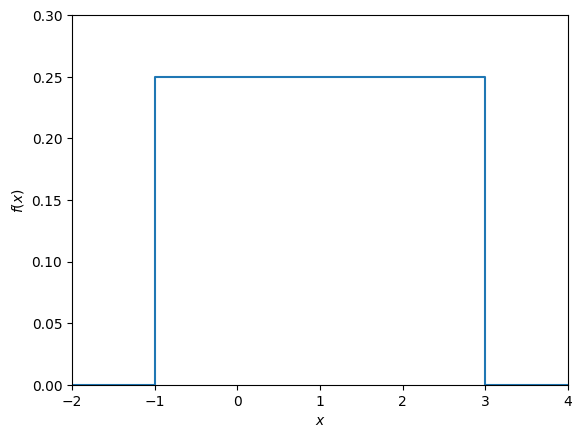

The continuous uniform distribution on a bounded interval \([a,b]\) has constant probability density function \(1/(b-a)\) on the interval:

Fig. 11.5 Probability density function of a uniform continuous random variable.#

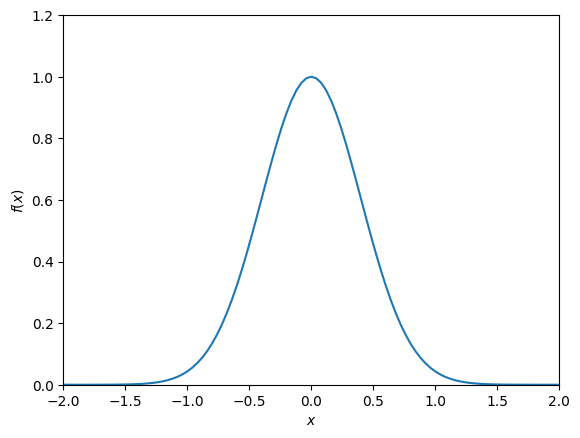

11.2.2. Normal or Gaussian distribution#

The normal or Gaussian distribution with mean \(\mu\) and variance \(\sigma^2\) is defined by the probability density function

The normal distribution with mean \(0\) and variance \(1\) is also called the standard normal distribution.

The normal distribution is one of the most common probability distributions. One of the reasons for this is the role that it plays in the The central limit theorem.

Fig. 11.6 Probability density function of a normal (Gaussian) random variable with mean \(0\) and variance \(\frac{1}{2\pi}\).#

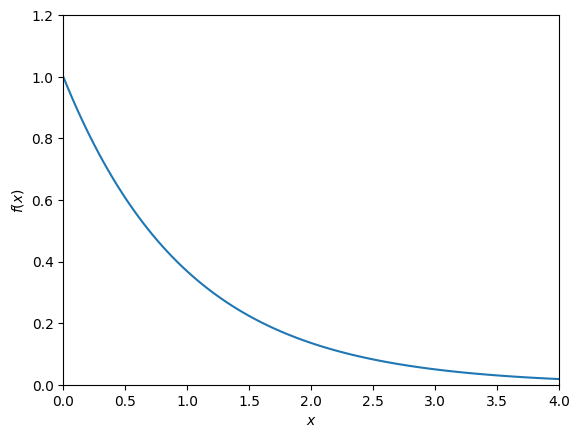

11.2.3. Exponential distribution#

The exponential distribution with rate \(\lambda>0\) is defined by the probability density function

Fig. 11.7 Probability density function of an exponential random variable.#

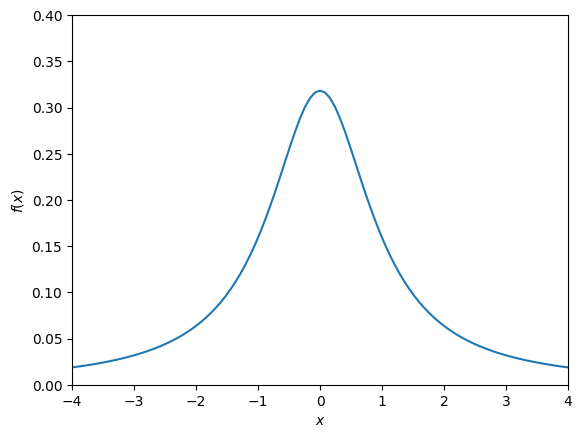

11.2.4. Cauchy or Lorentz distribution#

We include this example mostly to illustrate that seemingly nice probability distributions can display “pathological” behaviour.

Definition 11.1 (Cauchy distribution)

The Cauchy (or Lorentz) distribution is defined by the probability density function

The graph of this function (see Fig. 11.8 below) looks a bit like that of a normal distribution, but the ‘tails’ decrease much less rapidly.

Let us see what happens if we try to calculate the expectation of this distribution:

This shows that the expectation is undefined. The same holds for the variance.

Fig. 11.8 Probability density function of a Cauchy (Lorentz) random variable.#

11.3. Exercises#

Exercise 11.1

Calculate the expectation and variance of a Bernoulli distribution with parameter \(p\).

Exercise 11.2

Check that the total probability of a binomial distribution with parameters \(n\) and \(p\) equals 1 (as it should).

Exercise 11.3

Calculate the expectation and variance of the continuous uniform distribution on the interval \([-1,3]\).

Exercise 11.4

Check that the total probability of a normal distribution with mean \(\mu\) and variance \(\sigma^2\) equals 1 (as it should).

Hint: use the Gaussian integral (3.3).

Exercise 11.5

Compute the expectation of \(XS\) and of \(X^2\) for a Gaussian random variable \(X\) with mean \(\mu\) and variance \(\sigma^2\).

Hint: use integration by parts.

Exercise 11.6

Suppose \(X_1\) and \(X_2\) are independent continuous random variables, where \(X_1\) follows the uniform distribution on \([0,1]\) and \(X_2\) follows the uniform distribution on \([0,2]\). Find the probability density function for the random variable \(Z = X_1 + X_2\).