8.2. Quadratic forms#

8.2.1. Introduction and terminology#

The simplest functions from \(\R^n\) to \(\R\) are linear functions

In short \(f(\mathbf{x}) = \mathbf{a}^T\mathbf{x} + b\), for some vector \(\mathbf{a}\) in \(\R^n\) and some number \(b\) in \(\R\).

This is the common notion of linearity in calculus. To be linear in the linear algebra sense the constant term \(b\) must be zero.

After that come the quadratic functions

where all parameters \(a_{ij}\), \(b_i\) and \(c\) are real numbers.

A quadratic function in the two variables \(x_1\), \(x_2\) thus becomes

Note that this can be written as

In general, a shorthand representation of Equation (8.2.1) becomes

for an \(n\times n\)-matrix \(A\), a vector \(\vect{b}\) in \(\R^n\), and a number \(c\) in \(\R\).

The part \(\mathbf{x}^TA\mathbf{x}\) is called a quadratic form.

Example 8.2.1

For the matrix \(A = \begin{pmatrix} 1 & 2 \\ 4 & 3 \end{pmatrix}\) the corresponding quadratic form is

Note that the last expression does not uniquely determine the matrix. We can split the coefficient \(6\) of the term \(x_1x_2\) in a different way and will end up with a different matrix. If we distribute it evenly over \(x_1x_2\) and \(x_2x_1\) we get a symmetric matrix.

The above example leads to the first proposition about quadratic forms.

Proposition 8.2.1

Every quadratic form \(q(\mathbf{x})\) can be written uniquely as

for a symmetric matrix \(A\).

This symmetric matrix \(A\) is then called the matrix of the quadratic form.

Example 8.2.2

We will find the symmetric matrix \(A\) for the symmetric form

So we need a symmetric matrix \(A = \begin{pmatrix} a_{11} & a_{12} & a_{13} \\ a_{12} & a_{22} & a_{23} \\ a_{13} & a_{23} & a_{33} \end{pmatrix}\).

From

we read off

So \(A = \begin{pmatrix} 1 & -2 & 0 \\ -2 & 2 & 3 \\ 0 & 3 & 5 \end{pmatrix}\).

If we restrict ourselves to two variables, we see that the graph of a linear function \(z = a_1x_1 + a_2x_2 + b\) is a plane.

Fig. 8.2.1 The plane \(z = \frac13x_1 - x_2 +2\).#

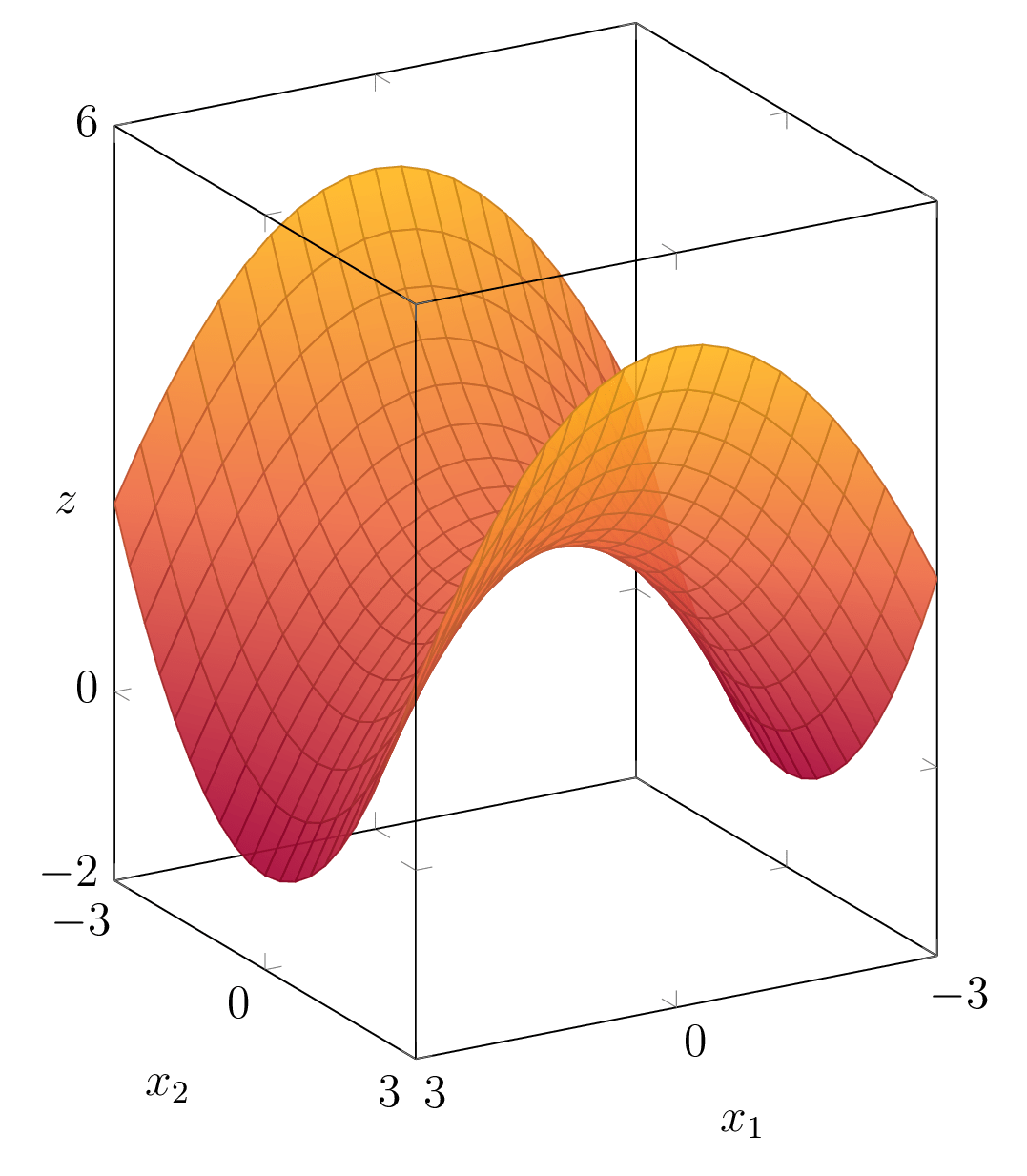

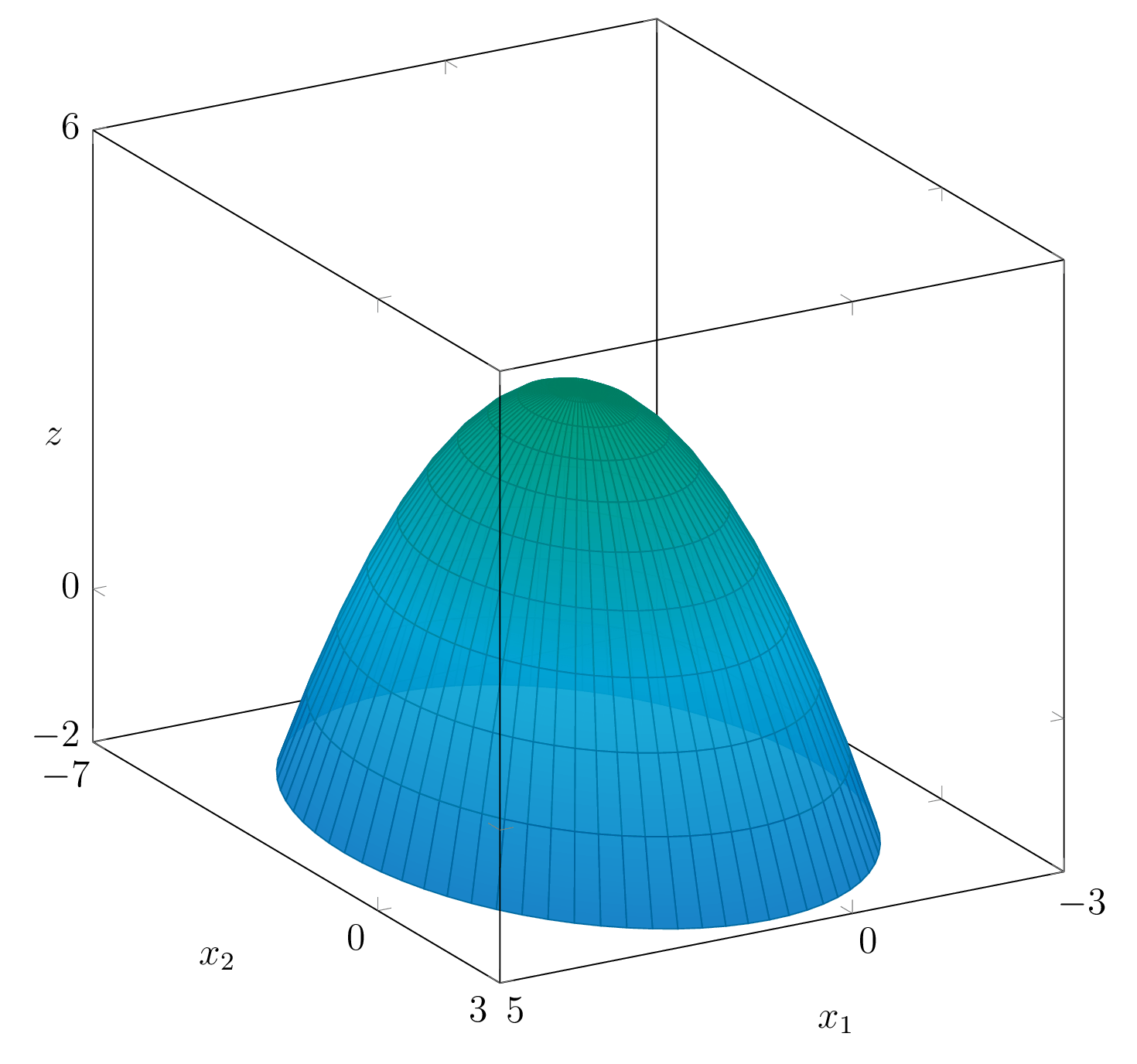

The graph of a quadratic function is a curved surface. Figure 8.2.2 and Figure 8.2.3 show two of these quadratic surfaces.

Fig. 8.2.2 The surface \(z = -\frac13x_1^2 + \frac13x_2^2 + 2 \).#

Fig. 8.2.3 The surface \(z = -\frac12x_1^2 - \frac14x_2^2 + x_1 - x_2 + 2\).#

The shape of the surfaces is in most cases determined by the quadratic part \(\vect{x}^TA\vect{x}\). The linear part is then only relevant for the position.

Example 8.2.3

Consider the quadratic surface described by

We will apply the shift

so

In the new variables \((\tilde{x}_1,\tilde{x}_2)\) we get

Note that the quadratic parts are the same,

Example 8.2.4

The surfaces defined by

are shifted versions of the same surface. Namely,

Thus \(\mathcal{S}_2\) is also described by

This means that if \(\mathcal{S}_1\) is translated over the vector \(\left(\begin{array}{c} -1 \\ 2 \\ -6 \end{array}\right)\) it becomes the surface \(\mathcal{S}_2\).

For the rest of the section we will therefore only look at the quadratic part \(\vect{x}^TA\vect{x}\).

One of the simplest quadratic forms results when we take \(A = I = I_n\), the identity matrix. Then we have

For this quadratic form, it is clear that it will only take on non-negative values. And that

Such a quadratic form is called positive definite. In the next subsection we will learn how to find out whether an arbitrary quadratic form has this property.

8.2.2. Diagonalisation of quadratic forms#

Let us first consider an example, to get some feeling for what is going on.

Example 8.2.5

Consider the quadratic form

At first sight you might think that this quadratic form only takes on non-negative values. One way to show that this is not actually true is by completing squares.

For the last expression, that does not contain a cross term, we can see how we can get a negative outcome. We can make the first term equal to \(0\) by taking \(x_2 = 1\) and \(x_1 = -2\), and then have

One way to describe in a more abstract/general way what we did in Example 8.2.5 is the following. We can introduce new variables \(y_1, y_2\) via the substitution

For short,

Then in terms of the new variables the quadratic form becomes

Actually, it proves slightly advantageous to express the substitution as \(\vect{x} = P\vect{y}\) for an invertible matrix \(P\). We then have the following proposition.

Proposition 8.2.2 (Quadratic form under a substitution)

The substitution \(\vect{x} = P\vect{y}\) brings the quadratic form

over to

Proof of Proposition 8.2.2

If we put \(\vect{x} = P\vect{y}\) we get

So in terms of \(\vect{y}\) we have the quadratic form

where

Example 8.2.6

In Example 8.2.5 we considered the substitution

or, equivalently

to the quadratic form

We then have

According to Proposition 8.2.2 we then get the quadratic form

This agrees with what we derived in Example 8.2.5.

The technique of completing the squares is one way to ‘diagonalise’ a quadratic form. It may be turned into an algorithm that also works for quadratic forms in \(n\) variables, but we will not pursue that track. There is a route that is more in line with the properties of symmetric matrices.

Suppose \(A\) is a symmetric matrix. We have seen (cf. Theorem 8.1.1) that it can be written as

for an orthogonal matrix \(Q\). The diagonal matrix \(D\) has the eigenvalues of \(A\) on its diagonal.

Since \(Q\) is an orthogonal matrix, we have

If we compare this to Proposition 8.2.2 the following proposition results.

Proposition 8.2.3

Suppose \(q(\vect{x})\) is a quadratic form with matrix \(A\), i.e.,

Let \(Q\) be an orthogonal matrix diagonalising \(A\). That is, \(A = QDQ^{-1}\).

Applying the substitution \(\vect{x} = Q\vect{y}\) then yields the quadratic form

where \(\lambda_1, \ldots, \lambda_n\) are the eigenvalues of the matrix \(A\).

Proof of Proposition 8.2.3

If we make the substitution \(\vect{x} = Q\vect{y}\) we find that

The last expression is indeed of the form

where \(\lambda_1,\lambda_2, \ldots, \lambda_n\) are the eigenvalues of \(A\).

Let us see how the construction of Proposition 8.2.3 works out in an earlier example.

Example 8.2.7

Consider again the matrix \(A = \left(\begin{array}{cc} 1 & 2 \\ 2 & 3 \end{array}\right)\) of Example 8.2.6.

Its characteristic polynomial is given by

The eigenvalues are

So if we take \(Q = \begin{pmatrix} \vect{q}_1 & \vect{q}_2 \end{pmatrix}\), where \(\vect{q}_1\) and \(\vect{q}_2\) are corresponding eigenvectors of unit length, we find that the substitution \(\vect{x} = Q\vect{y}\) leads to

Since \((2 + \sqrt{5})> 0\) and \((2 - \sqrt{5})<2-2=0\) we may again conclude that the quadratic form takes on both positive and negative values.

Remark 8.2.1

In Example 8.2.6 and Example 8.2.7 we applied two different substitutions to the same quadratic form with the matrix \(A = \left(\begin{array}{cc} 1 & 2 \\ 2 & 3 \end{array}\right)\).

They led to the two different quadratic forms

The diagonal matrices do not seem to have much in common. However, they do.

It can be shown that if for a symmetric \(n\times n\)-matrix \(A\) it holds that

for two invertible matrices \(P_1\), \(P_2\), then the signs of the values on the diagonals of \(D_1\) and \(D_2\) match in the following sense:

if \(p_1\), \(p_2\) denote the numbers of positive diagonal elements of \(D_1, D_2\), and \(n_i\) are the numbers of negative diagonal elements, then

It follows that the numbers of zeros on the diagonals, \(n - p_i - n_i\), \(i = 1,2\), must also be equal for the two matrices.

In the two examples we see that \(p_1 = p_2 = 1\) and also \(n_1 = n_2 = 1\), in accordance with the statement.

The property is known as Sylvester’s Law of Inertia.

Grasple exercise 8.2.1

To garner some evidence for Sylvester’s Law of Inertia.

Click to show/hide

The following proposition is a direct consequence of the diagonalisation (Proposition 8.2.3).

Proposition 8.2.4

Let \(q(x) = \vect{x}^TA\vect{x}\) be the quadratic form with matrix \(A\) and suppose that \(A\) has the (ordered) eigenvalues \(\lambda_1 \geq \lambda_2 \geq \cdots \geq \lambda_n\). Then the maximum and the minimum value attained by \(q(\vect{x})\) under the constraint \(\norm{\vect{x}} = 1\) are \(\lambda_1\) and \(\lambda_n\).

The proof contains the same type of reasoning as the proof of Proposition 8.1.4.

Proof of Proposition 8.2.4

Suppose that \(\vect{u}_1, \vect{u}_2,\ldots,\vect{u}_n\) is an orthonormal basis of eigenvectors for \(A\) for the eigenvalues \(\lambda_1 \geq \cdots \geq \lambda_n\).

First of all

Likewise \(q(\vect{u}_n) = \lambda_n\), so \(q(\vect{x})\) does take on the values \(\lambda_1\) and \(\lambda_n\).

Next, for an arbitrary unit vector \(\vect{x}\), which can always be written as

(cf. proof of Proposition 8.1.4), we deduce that

All cross terms \(\vect{u}_i^T\vect{u}_j\) with \(i\neq j\) drop out since \(\vect{u}_i\ip\vect{u}_j=0\), for \(i\neq j\), and \(\vect{u}_i^T\vect{u}_i = 1\) by the assumption that the vectors \(\mathbf{u}_i\) are unit vectors.

Now, invoking \(\lambda_1 \geq \lambda_2 \geq \cdots \geq \lambda_n\) and \(c_1^2 + c_2^2 + \cdots +c_n^2 = 1\), we see that

and also

so we may conclude that indeed

where the expression in the middle is equal to our \(\vect{x}^T A \vect{x} = q(\vect{x})\).

8.2.3. Positive definite matrices#

Let’s start with a list of definitions.

Definition 8.2.1 (Classification of Quadratic Forms)

Let \(A\) be a symmetric matrix and \(q_A(\vect{x}) = \vect{x}^TA\vect{x}\) the corresponding quadratic form.

-

\(q_A\) is called a positive definite quadratic form if \(q_A(\vect{x}) > 0\) for all \(\vect{x} \neq \vect{0}\).

-

\(q_A\) is called positive semi-definite if \(q_A(\vect{x}) \geq 0\) for all \(\vect{x} \).

-

\(q_A\) is called negative definite if \(q_A(\vect{x}) < 0\) for all \(\vect{x} \neq \vect{0}\).

-

\(q_A\) is called negative semi-definite if \(q_A(\vect{x}) \leq 0\) for all \(\vect{x} \).

If none of the above applies, then \(q_A\) is called an indefinite quadratic form.

The same classification is used for symmetric matrices. E.g., \(A\) is a positive definite matrix if the corresponding quadratic form is positive definite.

Note that every quadratic form \(\vect{x}^TA\vect{x}\) gets the value \(0\) when \(\vect{x}\) is the zero vector. That is the reason we exclude the zero vector in the definition of positive/negative definite.

The classification of a quadratic form follows immediately from the eigenvalues of its matrix.

Theorem 8.2.1

Suppose \(q_A(\vect{x}) = \vect{x}^TA\vect{x}\) for the symmetric \(n \times n\)-matrix \(A\). Let \(\lambda_1, \ldots, \lambda_n\) be the complete set of (real) eigenvalues of \(A\)

Then

-

\(q_A\) is positive definite if and only if all eigenvalues are positive.

-

\(q_A\) is positive semi-definite if and only if all eigenvalues are non-negative.

-

\(q_A\) is negative definite if and only if all eigenvalues are negative.

-

\(q_A\) is negative semi-definite if and only if all eigenvalues are non-positive.

And lastly

-

\(q_A\) is indefinite if at least one eigenvalue is positive and at least one eigenvalue is negative.

Proof of Theorem 8.2.1

This immediately follows from Proposition 8.2.3. If we make the substitution \(\vect{x} = Q\vect{y}\) with the matrix \(Q\) of the orthogonal diagonalisation, i.e.,

the quadratic form transforms to

Let us consider the case where all eigenvalues \(\lambda_i\) are positive. Then the expression for \(\tilde{q}(\vect{y})\) is positive for all \(\vect{y} \neq \vect{0}\). It remains to show that then also \(q(\vect{x}) > 0\) for all vectors \(\vect{x}\neq \vect{0}\).

Since \(Q\) is an orthogonal matrix it is also an invertible matrix. So any non-zero vector \(\vect{x}\) can be written as

for a (unique) non-zero vector \(\vect{y}\).

As a consequence, for any non-zero vector \(\vect{x}\) we have

Likewise the other possibilities of the signs of the eigenvalues may be checked.

Exercise 8.2.1

Verify the validity of the second statement made in Theorem 8.2.1.

Solution to Exercise 8.2.1

The transformed quadratic form is still

and \(q_A(\vect{x})=\tilde{q}(\vect{y})\) with \(\vect{x} = Q\vect{y}\).

Now we can chain the following equivalent statements:

Example 8.2.8

Consider the quadratic form

The matrix of this quadratic form is

The eigenvalues of \(A\) are computed as

With Theorem 8.2.1 in mind, we can conclude that the quadratic form is positive semi-definite but not positive definite.

Exercise 8.2.2

This exercise nicely recapitulates the ideas of the section. There is a cameo of the concept of completing the square, but that is of minor importance.

-

Show that the quadratic from in Example 8.2.8 can be rewritten as follows

(8.2.2)#\[q(x_1,x_2,x_3) = 2(x_1 - \tfrac12x_2 - \tfrac12x_3)^2 + \tfrac12(x_2 - x_3)^2.\] -

What is the corresponding transformation \(\vect{y} = P\vect{x}\) that brings the quadratic form in diagonal form \(\vect{y}^TD_2\vect{y}\), and what is the diagonal matrix \(D_2\)?

-

By inspection of \(D_2\) find the classification of \(q\).

-

By inspection of Equation (8.2.2), find a non-zero vector \(\vect{x}\) for which \(q(\vect{x}) = 0\).

-

Check that the vector you found in iii. is an eigenvector of the matrix of the quadratic form, i.e., \(A = \left(\begin{array}{cc} 2 & -1 & -1 \\ -1 & 1 & 0 \\ -1 & 0 & 1 \end{array}\right)\).

Solution to Exercise 8.2.2

-

We perform direct computations:

\[\begin{split} \begin{align*} q(x_1,x_2,x_3) &= 2x_1^2 + x_2^2 +x_3^2 - 2x_1x_2 - 2x_1x_3 \\ &= 2\left(x_1^2 - x_1x_2 - x_1x_3\right)+ x_2^2 +x_3^2 \\ &= 2\left(\left(x_1 - \frac12x_2 - \frac12x_3\right)^2-\left(\frac12x_2+\frac12x_3\right)^2\right)+ x_2^2 +x_3^2 \\ &= 2\left(\left(x_1 - \frac12x_2 - \frac12x_3\right)^2-\frac14x_2^2-\frac14x_2x_3-\frac14x_3^2\right)+ x_2^2 +x_3^2 \\ &= 2\left(x_1 - \frac12x_2 - \frac12x_3\right)^2-\frac12x_2^2-\frac12x_2x_3-\frac12x_3^2+ x_2^2 +x_3^2 \\ &= 2\left(x_1 - \frac12x_2 - \frac12x_3\right)^2+\frac12x_2^2-\frac12x_2x_3+\frac12x_3^2 \\ &= 2\left(x_1 - \frac12x_2 - \frac12x_3\right)^2+\frac12\left(x_2^2-x_2x_3+x_3^2\right) \\ &= 2(x_1 - \tfrac12x_2 - \tfrac12x_3)^2 + \tfrac12(x_2 - x_3)^2. \end{align*} \end{split}\] -

We only present the answer here:

\[\begin{split} P=\begin{pmatrix} -\frac{2}{\sqrt6} & 0 & \frac{1}{\sqrt2} \\ \frac{1}{\sqrt6} & -\frac{1}{\sqrt2} & \frac{1}{\sqrt2} \\ \frac{1}{\sqrt6}& \frac{1}{\sqrt2} & \frac{1}{\sqrt2} \end{pmatrix}\text{ and }D_2=\begin{pmatrix}3&0&0\\0&1&0\\0&0&0\end{pmatrix} \end{split}\] -

\(q\) is positive semi-definite.

-

The last term of Equation (8.2.2) gives rise to chosing \(x_2=x_3\). The first term then indicates \(x_1=\frac12x_2+\frac12x_3=x_3\). Chosing \(x_3=1\) gives \(x_2=1\) and \(x_1=1\). In vector form:

\[\begin{split} \vect{x}=\begin{pmatrix}1\\1\\1\end{pmatrix}. \end{split}\] -

The vector \(\vect{x}=\begin{pmatrix}1\\1\\1\end{pmatrix}\) is a non-zero scalar multiple of the third column of \(P\), so it is an eigenvector.

8.2.4. Conic sections#

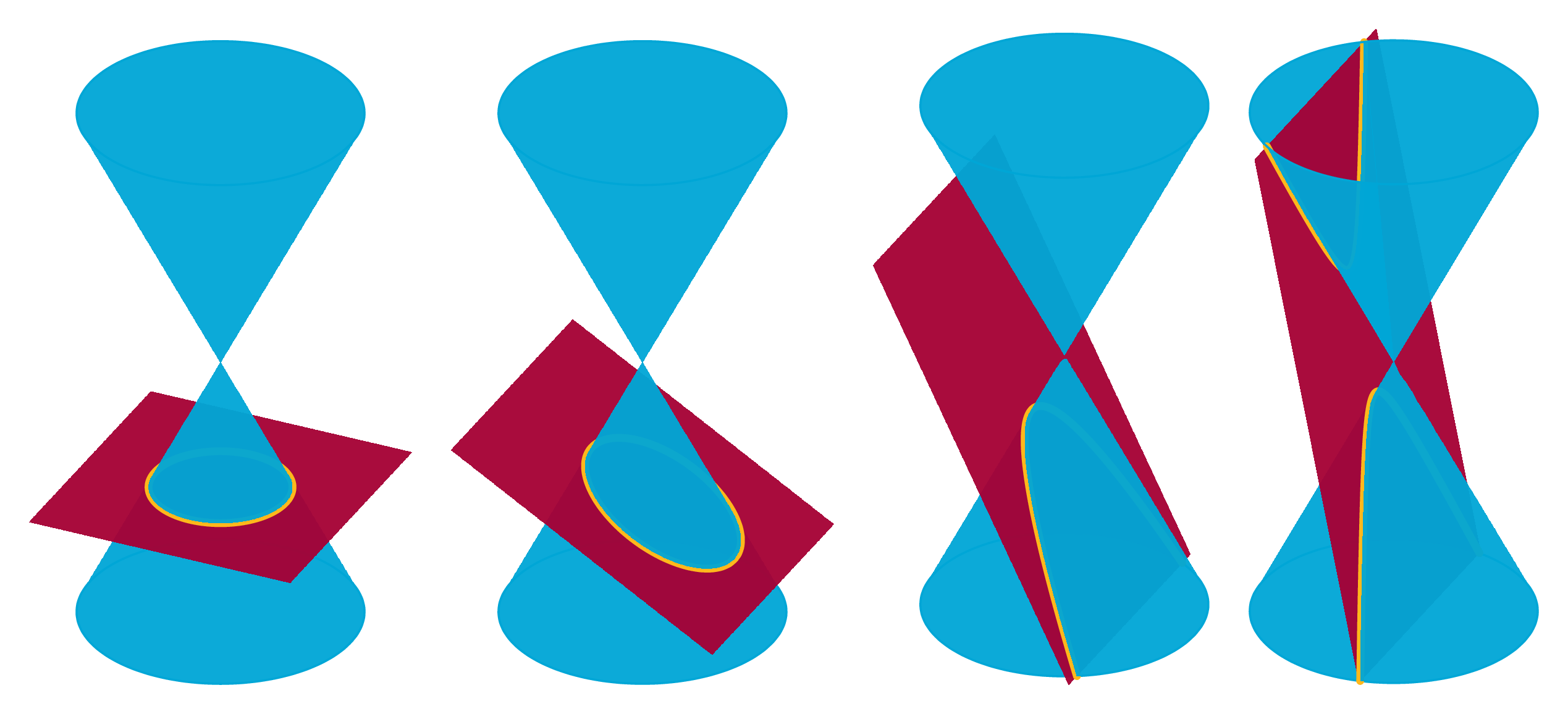

A conic section or conic is a curve that results when a circular cone is intersected with a plane.

Figure 8.2.4 shows the different shapes when the plane is not going through the apex.

Fig. 8.2.4 Intersections of a cone with several planes (not going through the apex).#

The resulting curve is then either a hyperbola, a parabola or an ellipse, with as special ellipse the circle. If the plane does go through the apex of the cone the conic section is called degenerate.

Exercise 8.2.3

Describe the (three) possible degenerate forms of conic sections. That is, what are the three different forms that result when a cone is intersected with a plane that goes through the apex?

Solution to Exercise 8.2.3

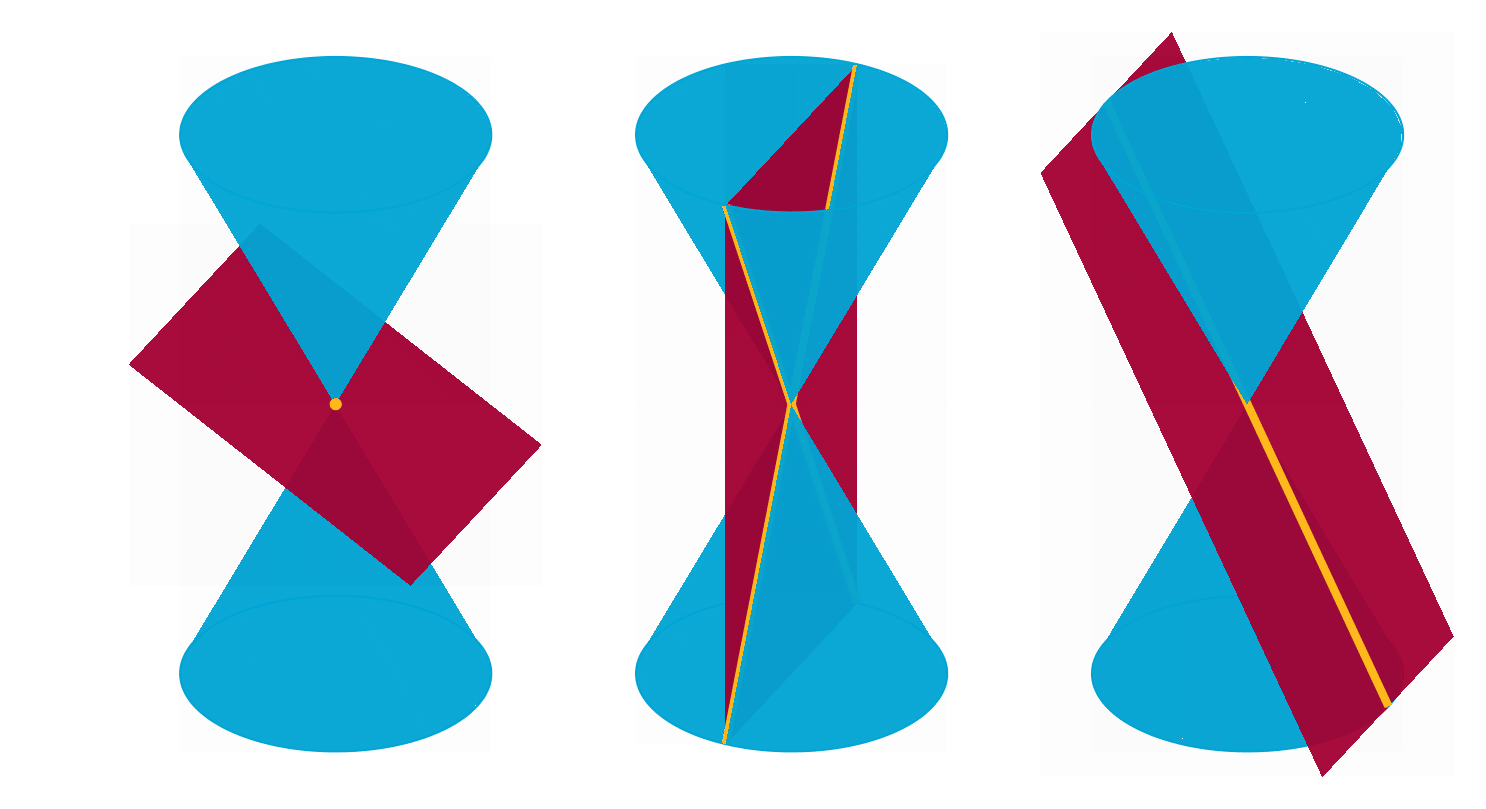

There are three essentially different situations.

A plane through the apex may have the apex as unique common point with the cone. Then the conic (inter)section is just this one point.

If (as in the central image in Figure 8.2.5) the plane is close to vertical, it will intersect the cone in two lines through the apex.

The last form of a degenerate conic section is the transition between these situations, i.e. when the plane is tangent to the cone. This yields the last possible conic section, a line.

Fig. 8.2.5 The three degenerate conic sections.#

In the plane all non-degenerate conic sections may be described by a quadratic equation

where both the parameter \(f\) and at least one of the parameters \(a,b,c\) are not equal to zero.

Example 8.2.9

The curve given by the equation \(x_1^2 + x_2^2 - 25 = 0\) is a circle with radius \(5\).

The equation \(x_1^2 - x_2 - 2x_1 + 5 = 0\) gives a parabola with vertex (‘top’) at \((1, 4)\) and the line \(x_1 = 1\) as axis of symmetry.

If the parameters \(d\) and \(e\) in Equation (8.2.3) are zero, the equation

is said to represent a central conic. When \(b = 0\) as well,

defines a central conic in standard position. Such a conic is symmetric with respect to both coordinate axes.

If all parameters \(a,c,f\) in Equation (8.2.5) are non-zero the equation can be rewritten in one of the two standard forms

where we may assume that \( r_1,r_2 > 0\).

In case \((I)\) the equation describes an ellipse if \(r_1 \neq r_2\) and a circle if \(r_1 = r_2\).

In case \((II)\) the resulting curve is a hyperbola, with the lines \(x_2 = \pm\dfrac{r_2}{r_1}x_1\) as asymptotes.

Both curves have the coordinates axes as axes of symmetry. In this context they are also called the principal axes. See Figure 8.2.6.

Fig. 8.2.6 (Standard) Hyperbola and Ellipse.#

Example 8.2.10

The equation

can be rewritten as

and a bit further to the standard form

The corresponding curve is an ellipse with the coordinate axes as principal axes and with \(-5/2 \leq x_1 \leq 5/2\) and \(-5/3 \leq x_2 \leq 5/3\).

Likewise

When rewritten in the form

it is seen that the lines \(x_2 = \pm 2x_1\) are asymptotes. Namely, if \(x_1 \to \pm \infty\), then

If in Equation (8.2.4) the parameter \(b\) is not equal to zero, the principal axes can be found by diagonalisation of the quadratic form

The next proposition explains how.

For notational convenience we have denoted the coefficient of the cross term as \(2b\).

Proposition 8.2.5

Suppose the conic \(\mathcal{C}\) is defined by the equation

where \(a,b,c\) are not all equal to zero, and \(k \neq 0\).

Then the principal axes are the lines generated by the eigenvectors of the matrix

We will not give a proof of Proposition 8.2.5, but instead we will give two illustrative examples.

Example 8.2.11

We consider the quadratic form

Since

Proposition 8.2.5 tells us we have to look for eigenvectors of the matrix

The usual computations yield the following eigenvalues and eigenvectors:

The eigenvectors are orthogonal, as they should, for a symmetric matrix. We see that \(A\) can be orthogonally diagonalised as

The substitution \(\vect{x} = Q\vect{y}\) yields

So in the coordinates \(y_1\) and \(y_2\) the equation becomes

From this we can already conclude that the curve defined by Equation (8.2.7) is a hyperbola. The principal axes in the \(x_1\)-\(x_2\)-plane are the lines given by

The asymptotes in the coordinates \(y_1, y_2\) are the lines

Since

we find the asymptotes in the \(x_1\)-\(x_2\)-plane by rotating the lines \(y_2 = \pm\sqrt{3}y_1\) over an angle \(-\frac14\pi\). This leads to the direction vectors of the asymptotes in the \(x_1\)-\(x_2\)-plane as

They can be simplified to the direction vectors \( \begin{pmatrix} 2 \\ 1\end{pmatrix}\) and \(\begin{pmatrix} 1 \\ 2\end{pmatrix}\).

Example 8.2.12

We consider the quadratic form

Here we have

so now we have to look for eigenvalues and eigenvectors of the matrix

They are found to be

We orthogonally diagonalise \(A\) as

The substitution \(\vect{x} = Q\vect{y}\) yields the quadratic form

or

This is an ellipse in the \(y_1\)-\(y_2\)-plane with long axis \(6\sqrt{2}\), the length of the line segment from \((-3\sqrt{2},0)\) to \((3\sqrt{2},0)\), and short axis \(\dfrac{12}{\sqrt{7}}\).

For the ellipse in the \(x_1\)-\(x_2\)-plane we find the principal axes

See Figure Fig. 8.2.7.

Fig. 8.2.7 The ellipse with its principal axes.

#

Exercise 8.2.4

What happens if in Equation (8.2.4) the coefficient \(f\) is equal to zero? (There are actually three cases to consider!)

Solution to Exercise 8.2.4

We have to look at the solutions \(\begin{pmatrix} x_1 \\ x_2 \end{pmatrix}\) of the equation \(ax_1^2 + bx_1x_2 + cx_2^2 = 0\), where not all three coefficients \(a,b,c\) are zero.

We can rewrite the equation as

As \(A\) is symmetric, it can be orthogonally diagonalised as \(A=QDQ^T\), with \(D\) a diagonal matrix with the eigenvalues \(\lambda_1, \lambda_2\) of \(A\) on the diagonal and \(Q\) an orthogonal matrix with the corresponding eigenvectors as columns.

The substitution \(\vect{x} = Q\vect{y}\) transforms the equation to

or equivalently

We now consider three cases: \(\lambda_1\lambda_2 > 0\), \(\lambda_1\lambda_2 < 0\) and \(\lambda_1\lambda_2 = 0\).

For \(\lambda_1\lambda_2 > 0\), the only solution to (8.2.9) is \(\vect{y} = \vect{0}\), which means that the only solution to the original equation is \(\vect{x} = \vect{0}\), i.e. the conic section is the point \((x_1,x_2)=(0,0)\).

If \(\lambda_1\lambda_2 < 0\), the two solutions to (8.2.9) are

which are are with respect to the \((y_1,y_2)\)-coordinates two intersecting lines through the origin, with slopes \(\pm \sqrt{-\frac{\lambda_1}{\lambda_2}}\). As the transformation \(\vect{x} = Q\vect{y}\) is invertible, the two intersecting lines in the \((y_1,y_2)\)-plane are transformed to two intersecting lines through the origin in the \((x_1,x_2)\)-plane.

Finally, consider the case \(\lambda_1\lambda_2 = 0\). Then one of the eigenvalues is zero, say \(\lambda_1 = 0\), and the other is non-zero, say \(\lambda_2 \neq 0\). The equation (8.2.9) then becomes

which has the solution \(y_2 = 0\). The other variable \(y_1\) can take any value, so the solution set is the line \(y_2 = 0\) in the \((y_1,y_2)\)-plane. The transformation \(\vect{x} = Q\vect{y}\) is still invertible, so the solution set in the \((x_1,x_2)\)-plane is also a line, namely the line through the origin with direction vector \(\vect{v}_1\), the eigenvector corresponding to the zero eigenvalue.

8.2.5. Grasple exercises#

Grasple exercise 8.2.2

To write down the matrix of a quadratic form in three variables.

Click to show/hide

Grasple exercise 8.2.3

To write down the matrix of a quadratic form in three variables.

Click to show/hide

Grasple exercise 8.2.4

To perform a change of variables for a quadratic form \(\vect{x}^TA\vect{x}\) in two variables.

Click to show/hide

Grasple exercise 8.2.5

To classify a \(3\times3\)-matrix of which the characteristic polynomial is given.

Click to show/hide

Grasple exercise 8.2.6

To classify a quadratic form in two variables.

Click to show/hide

Grasple exercise 8.2.7

To classify a quadratic form in two variables.

Click to show/hide

Grasple exercise 8.2.8

To classify two quadratic forms in two variables.

Click to show/hide

Grasple exercise 8.2.9

For which value of a parameter \(\beta\) is a quadratic form in two variables indefinite?

Click to show/hide

Grasple exercise 8.2.10

To describe three central conic sections geometrically.

Click to show/hide

Grasple exercise 8.2.11

Natural sequel to previous exercise.

Click to show/hide

Grasple exercise 8.2.12

For which parameter \(a\) is a conic section \(\vect{x}^TA\vect{x} =1\) an ellipse/hyperbola/something else?

Click to show/hide

Grasple exercise 8.2.13

Maximising \(\vect{x}^TA\vect{x}\) under the restriction \(\norm{\vect{x}}=1\), for a \(2\times2\)-matrix \(A\).

Click to show/hide

The following exercises are a more theoretical.

Grasple exercise 8.2.14

True or false? If \(A\) is a positive definite matrix, then the diagonal of \(A\) is positive (v.v.).

Click to show/hide

Grasple exercise 8.2.15

If \(A\) and \(B\) are symmetric matrices with positive eigenvalues, what about \(A+B\)?

Click to show/hide

Grasple exercise 8.2.16

Two True/False questions about vectors \(\vect{x}\) for which \(\vect{x}^TA\vect{x} = 0\).